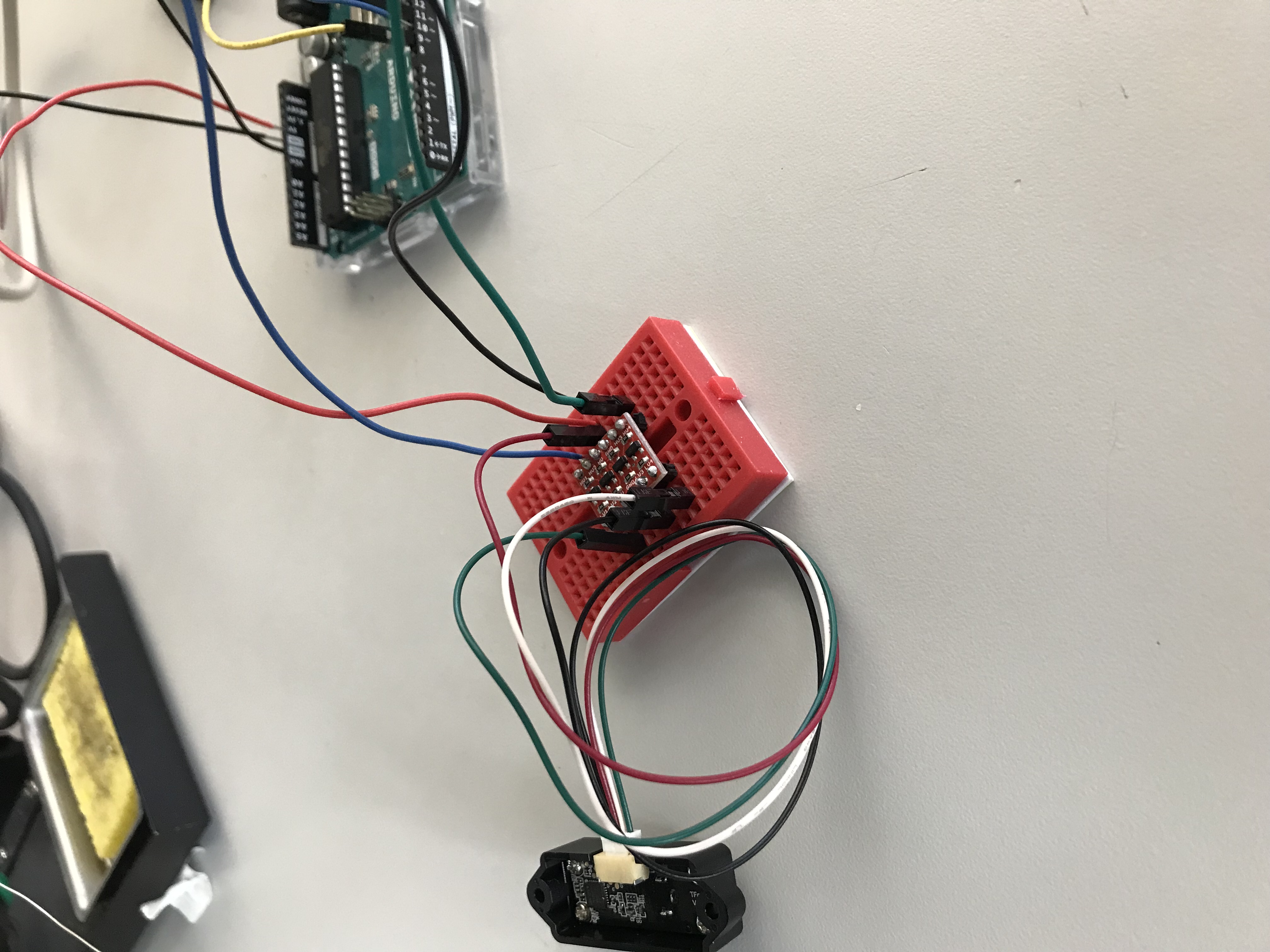

This week, I couldn’t be there in-person for the demo due to COVID, but I was still able to work on the project remotely thanks for my Arduino at home. I could also join zoom remotely during lab time, so I was able to help my teammates with running the full pipeline. I’ve been mostly debugging with our team and helping come up with new ideas to improve our pipeline. I was considering setting up some sort of sound system to guide the user through the locking and pairing procedure. Also, I was able to solder and build the lidar circuit when I got out of quarantine on Friday, and got it running with some test arduino code from this website: https://github.com/TFmini/TFmini-Arduino/blob/master/TFmini_Arduino_SoftwareSerial/TFmini_Arduino_SoftwareSerial.ino which fixed a checksum issue I was having with the basic reading code that shipped with the tfmini library.

With this lidar setup, I’m almost back on schedule. I hope to finish integrating the code by Monday, so that then I will be completely caught up and we’ll be thoroughly in the refinement stage for this last week.

Over the next week, I will complete any debugging or improvement patches to our calibration code, finish integrating the lidar, and help out with the user study we will be conducting in the second half of the week. I will also help with the completion of the final presentation slides.