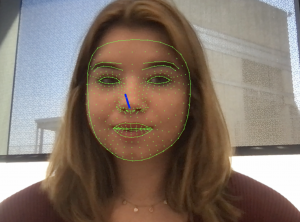

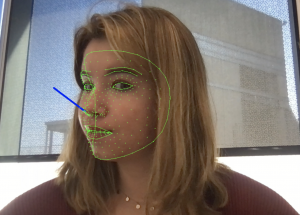

At the beginning of the week, I finalized an initial gaze estimation program. Attached are two photos showing me moving my face around. The green face mesh marks different landmarks that MediaPipe identifies. The blue line extending from my nose marks my estimated head pose (which is where the projection should eventually be in line with). This estimated head pose has a rotation and translation vector corresponding to it. The head pose estimation file has the capability to connect to the Arduino and send data to it. Currently, we are only sending the head angle rotation about the y-axis (“yaw”) to the Arduino. Once we get this working with the motor and projector, we intend to send and integrate the rest of the data.

My personal goal for the next week is to refine the angle estimation and make it more robust to small head movements. I also ordered a camera that should be able to handle facial recognition in dim lighting, so I plan to integrate that into the system within the next week as well.