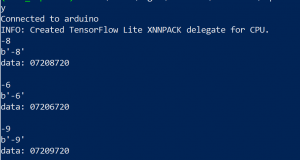

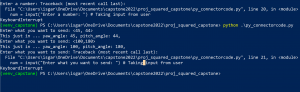

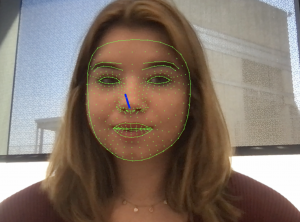

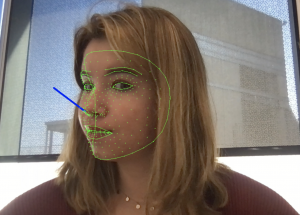

This past week, I have been refining the head pose estimation program. I have also begun implementing the detection of eye blinks. Our idea is that 3 eye blinks within 3 seconds will activate a lock or unlock gesture. “Locking” will pause the movement of the motor while “unlocking” will allow the motor to follow the movement of a person’s head again.

Aside from that, I’ve run into quite a few non-technical difficulties. My laptop and iPad broke which left me without the ability to work on the program for quite a few days. (Thank goodness all my code was backed up on GitHub.) Additionally, I was told the camera I ordered was delivered. My plan this week was to connect that into the rest of the system. However, when I picked up the camera, there was a diode in the package instead. I discussed the issue with ECE Receiving and was told the camera should be here soon. My plan for the next week is to connect the camera and head pose estimation program to the rest of the project and have an initial prototype complete. This way, we can begin user testing! I also plan to finish the program that detects the eye blinks this week.