This week our team worked on the individual parts of our project to prepare for integrations. On the software side, we have completed the recording and data collection pipeline for real-time collection of data. On the neural network side, we have run preliminary tests and decided on the networks to use. On the game logic side, we’ve made and implemented some changes to the previous versions of the game. Next week we plan on continuing on these aspects, work on the connection between each part.

Chris’s Status Report for Apr 3

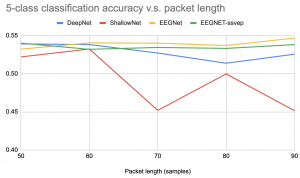

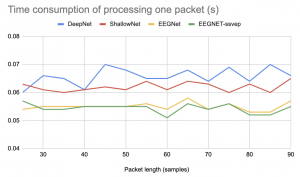

I implemented and tested the 4 neural networks we will be using. I made some small changes to the architectures so they can perform well on lower packet sizes. To change from 256-512 which was originally designed for, the pooling layers are changed from length 16 to length 3. Convolution filters are also reduced from 16-32 to 3-5; these two changes are meant to address the change in our sampling rate. I then tested the performance of each of the network’s performance on packet size 50-90, and we obtained test results from a held-out group of data. From the plot, we can see DeepNet and ShallowNet have relatively worse performance, and the performance doesn’t change much with the size. EEGNet and EEGNet-SSVP, a variant of EEGNet, show better performance and show a slight increase when we use higher packet sizes. We will thus likely use these two for our future tests.

Note that in the plot, our problem was a 5-class classification, from our pre-training dataset. Although they don’t exceed 60% accuracy, it’s still a significantly harder task than our final goal of 2-3 class classification.

Lavender’s Status Report for Apr 3

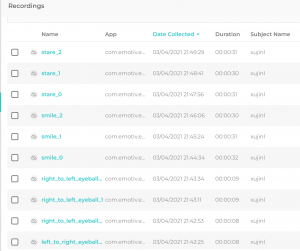

This week I recorded the dataset for training and testing with Chris. For each activity we recorded 3 episodes with 15-30 seconds duration. The activities include closed-eyes neutral, open-eyes neutral, think about lifting left arms, think about lifting right arms, move eyes from left to right, move eyes from right to left, smile and stare.

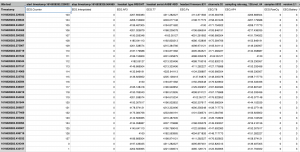

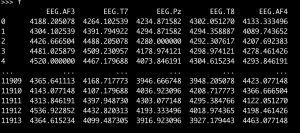

I also exported the recorded dataset to csv file and wrote a python script to automatically convert the batch of csv files to pandas dataset, which can be used directly for learning with pytorch.

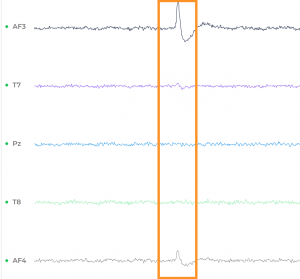

One challenge with preprocessing the raw data is eye blinking removal. The artifact is visually obvious in the frontal lobe sensors AF3 and AF4. Using the MNE-Python neurophysiological data processing package, I was able to remove the eye blinking artifact using fast independent component analysis (ICA). This method works by separating the additive components of a mixed signal. In our case, the eye blinking is an additive component to our source brain signal. The fast ICA works by first pre-whiten the input signal (centering each component to have zero expected value, and then using a linear transformation to make the components uncorrelated and have unit variance). Then the fast ICA uses an iterative method to find an orthogonal projection that rotates the whitened signal such that non-Gaussianity is maximized. It is noteworthy that while the MNE-Python works well for preprocessing our recorded data, for real-time packages of short duration, its performance is subpar. So I am implementing the software for using morphological component analysis on the short time fourier transform to remove the blinking noise in real time, following the method suggested by

“Real time eye blink noise removal from EEG signals using morphological component analysis” by Matiko et al.

Eryn’s Status Report for Apr 3

I proposed and implemented two major changes to the game design. Firstly, to improve the accuracy of control signal interpretation, we decide to reduce the previous 3-level controls to 2-level controls, just on and off. Secondly, to accommodate the significant number of false positives, we want to limit the impact of each command. In our previous design, if the user thinks left, the cart will move left immediately, where the action is fully executed upon one single command which could be falsely detected. Now we plan to design something like the peashooter in Plants VS Zombies, in which the user must shoot a sequence of peas to eliminate the zombie. So instead of giving a single command, the user needs to keep thinking “shoot, shoot, shoot, shoot …” and the zombie is only killed after being shot ten times. With that, every command only achieves 10% of the target, therefore reducing the impact of false positives. Also, to avoid users wasting peas when there’s no zombies, we’ll limit the number of available peas, otherwise users could cheat by not switching intentions and keeping shooting throughout the game. The implementation of the change is underway and I’m expecting to see a working prototype next week.

Team Status Report for Mar 13

The team finished the preliminary hardware test and found a way to ensure good contact quality. We confirmed that all of our end-to-end neural network models can operate at the desired latency. The game design needs to be modified to accommodate the intrinsic low accuracy of brainwave analysis. Next week we will independently test the data pipeline, denoiser and controller.

Eryn’s Status Report for March 27

This week I worked with Lavender and Chris on the remote control game tests. The game is part of the Emotiv software that allows users to mind control and move a dice. Supposedly this game provided by Emotiv represents the highest standard we can achieve from processing raw signals. Even so, during the tests, we found that the processing results are highly unstable and it’s very difficult to effectively control the dice. This poses a huge challenge to the usability of our obstacle dodging game, which essentially involves the same set of control directives as the Emotiv dice game. The challenge is unexpected and I’ll try to modify the design of the game in the next week to accommodate the intrinsic low accuracy of brainwave analysis.

Chris’s Status Report for March 27

This week, apart from working with Lavender and Eryn to explore the hardware that we just received, I’ve mostly been running tests on the neural networks that we selected as candidates for processing. We found that the single-run processing time of the EEG image method is higher than expected and might not work well for our near real-time control purpose, therefore we decided to solely focus on the end-to-end CNN-based approaches. I tweaked the architectures that usually expect larger input sequences so they will be able to work with shorter sequences and in a near real-time way. I ran preliminary tests to test the run time for different packet sizes. As we will be running our game on CPU, we are also doing the inference on CPU as well. Running experiments on my own laptop, this is the result I got. These run times are acceptable for real-time control in games, although this is the run time for control signal extraction only.

Next week I will both try running these models back to back on the open access dataset and the real data collected from the headgear, if we were to complete the data collection pipeline.

Lavender’s Status Report for March 27

This week, I organized two hardware tests. The first test revealed the contact quality issue, which was unexpected. I subsequently contacted Emotiv customer support for the contact quality issue, training issue and raw data stream API issue. The second test confirmed that wet hair with plenty of glycerin can stabilize the contact quality to 100%. Next week I will record real data with good contact quality and start testing our denoising algorithm with the real data.

We tried multiple ways for differentiating the brainwave signals. In the picture above, we tested whether seeing different colors would result in a visible difference. We also tested temperature, pain, movement, and sound. So far, movements seem to be the most effective cue. We are still looking into more training procedures and signal processing techniques to improve our detection.

Chris’s Status Report for March 13

I worked on creating a GitHub repository, and started to work on the software infrastructure for neural network training, testing, and inference. I’ve integrated the existing repositories that we plan to use for generating control signals from raw EEG signals. Although we can’t see the real data coming from our particular device, I’ve selected a large open-access dataset to use for our pretraining whose electrode configuration include those provided by our device with a similar sampling rate. Next week I plan to keep working on the software infrastructure and work on the design review report.

Lavender’s Status Report for March 13

I created an Overleaf project for the team to collaborate on our status report. For the report, I will work on the implementation section for signal processing, design tradeoffs and related work. In our design review, someone suggested that we could include the frequency component of EEG signals in our preprocessing step to potentially reduce individual calibration time. I thought this was a good idea and I looked into integrating this step into our preprocessing. Next week I plan to start testing our controller implementation with synthetic data from the Emotiv App.