This week I made and prepared for the final presentation. Tomorrow I will test our software with Tianyi Zhu, to observe the effect of our detection algorithm on a different individual. In the coming week, I will keep practicing for the final presentation and write part of our final report.

Lavender’s Status Report for Apr 24

This week I implemented the low pass filter for short-length data packet (32Hz) and changed the eye blinking removal algorithm to Empirical Mode Decomposition (EMD), which is faster than ICA or STFT+MCA. I also tried testing the game as a test subject. However, my hair is too thick to ensure good contact quality. With the team, I helped testing the entire pipeline (with calibration and gameplay) with Chris as the test subject.

Team’s Status Report for Apr 24

This week our team finished integrating the entire pipeline.

On the software side, we added a calibration phase that records user data to fine tune the pretrained model.

On the neural network side, we implemented a fast API for fast training routine and integration with the software part.

On the game logic side, we implemented the denoising algorithm for band pass filter and EMD.

Chris’s Status Report for Apr 24

This week I implemented an API which is fast and has an encapsulated training routine for the integration between our NN part and the software part. It takes two classes’ processed data and train a network using these data. About the details of training, they are optimized by previous training sessions, which uses the default learning rate, the deep conv net, and 10 epochs of training. This API trains the model and saves the model with user’s profiles.

Eryn’s Status Report for Apr 24

I implemented the calibration phase in the game that runs prior to the game start and collects user brainwave data under both control modes – 1 for fire and 0 for stop (neutral state). The pretrained model will be trained again with new data specific to the current player. Instructions on how to do calibration will be displayed on the screen to guide the player. Next week I’ll continue refining the game UI and also help with the integration effort.

Interim Report Gantt Chart Update

Team’s Status Report for Apr 10

This week our team continued to work on the individual parts of our project to prepare for integrations. On the software side, we have completed the implementation of the denoiser. On the neural network side, we have determined the network architecture that works the best for our task and the thought-action mapping that shows the most obvious class boundaries. On the game logic side, we are ready to deliver a working prototype. Next week we will focus on integrating the individual parts and putting together a demo that runs the entire pipeline.

Eryn’s Status Report for Apr 10

I worked on the updated game design and finished the implementation. Now we’ve got a fully-working prototype in which the user is able to control the peashooter to fire the peas and each zombie will be eliminated after being hit 10 times. The game can be played with both keyboards and EEG. The only issue is how to handle the continuous stream of incoming data from EEG: What refresh rate (frames per second) is a good number that doesn’t make our data segments too choppy? How many time steps are we packing into each frame/packet so that there’s enough information for effective detection? Next week I will look into that and write a port for receiving the EEG data synchronically.

Lavender Status Report for Apr 10

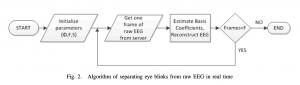

I worked on real time denoising of the input data and data io this week. For the real time denoising, we confirmed the feasibility of denoising using ICA last week, but it is not fast enough so we decided to try performing denoising in real time using some heuristic. The reason why we avoided data driven approaches for this particular problem is that we do not have sufficient time to record and annotate data for training a denoiser. The approach we take utilizes the sparsity of blinking signals.

The idea is that we find two vector spaces (known as the “dictionaries”), where the blinking artifacts and the original signals are sparse (meaning that most of the coefficients are zero). Then we find the projecting coefficients of the mixed signal on both spaces, and reconstruct the clean signal using only the coefficient from the “clean” vector space.

(block diagram by Maitiko et. al)

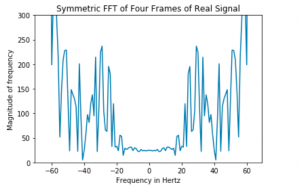

While the idea seems simple, there are a lot of design choices in implementation. For instance, we find the dictionaries using Short Time Fourier Transform with a long Hann window and a short Hann window. The time-frequency trade off makes the frequency resolution of the found dictionary basis vectors different. To ensure the consistency of the length of our basis vector, we chose to fill zeros to improve frequency resolution. Also, to save storage spaces, we decide to only keep the nonnegative Fourier coefficients during basis matching, because the Fourier transform for real signals is symmetric around zero.

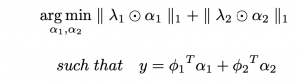

Last example is the choice of optimizer. We chose Fast Iterative Shrinkage-Thresholding Algorithm (FISTA) with the PyLop library because it solves an unconstrained problem with L1 regularization and (as the name suggests) it is faster than ISTA.

(objective function with L1 regularization)

While the code for the denoiser is complete, I am still debugging, eye-examining, and testing with previously recorded data to make sure it works. This week we will test with real-time data frames once it is done.

Chris’s Status Report for Apr 10

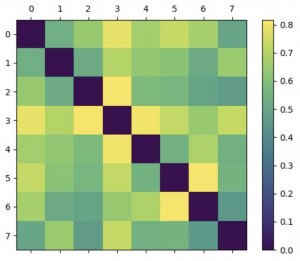

’ve been working on 2 things this week. The first thing I’ve been working on is trying to find how our neural networks perform on different types of combinations of tasks. Since our current game requires the discrimination between 2 types of action, we collected data from 8 different actions and compared the performance of each neural network on every possible 2-pair of actions. We’ve found that similar actions are hard to be distinguished, and very different actions are easy to be distinguished. For example, thinking about lifting the right arm and left arm are hard to distinguish, but not thinking about anything and moving the eyeball from right to left is easy to distinguish. We found that the DeepConvNet performs the best overall, so here we will only include the results from this network.

The actions from are numbered from 0-7, and the color of the matrix shows how good the performance of distinguishing the two actions are. From 0-7 the actions are respectively:

- ‘smile’,

- ‘stare’,

- ‘open-eyes-neutral’,

- ‘closed-eyes-neutral’,

- ‘left-to-right-eyeball’,

- ‘right-to-left-eyeball’,

- ‘think-lifting-left-arm’,

- ‘think-lifting-right-arm’

And as evident in the plot, the action pairs that are most easily distinguishable are:

- Eyeball moving right to left and think lifting left arm

- Closed eye neutral and left to right eyeball

- Open eyes neutral and closed eyes neutral

- Smile and closed eyes neutral

As we can see from these pairs, the algorithm almost has to rely on some eyeball information to operate. This could be due to multiple factors, one of them being we are not cleaning the data at present stage. The data cleaning pipeline is still under progress, and all the training and testing here are done on raw signals. We will continue testing and keep a note on how these performances change. However, what’s worth noting is that even if we were to rely on eyeball movement signals for our game’s control signal, the technology we are using is still significantly different from just using eye tracking.

The second thing I’ve been doing is adding multi thread processing into the main game logic. I take the main files Eryn has been working on and I’m writing a multi-process consumer and producer system that reads in signals and processes signals, then send the control signals to the game logic.