Chris’s Status Report for May 8

This week I worked on improving the reliability and stability of our neural network to reduce false positive rate in our game. From comprehensive testing with our newly acquired test subject Eryn, we found 40% as a threshold for firing provides the best result for fire control, as confirmed by visual examination. I also worked with Lavender and Eryn to obtain our last test video.

Team’s Status Report for May 1

This week our group is working on preparing for the final presentation.

On the software side, we made necessary changes to the code for effective latency tests under running conditions.

On the game logic side, we are improving the user interface and creating a more user friendly game experience by adding necessary components such as score display and start-over buttons.

Chris’s Status Report for May 1

This week I helped Lavender prepare for her final presentation. I also wrote code in our program to test the latency of each stage of our algorithm. Currently, we can profile for each stage (signal processing, NN inference, rendering) except the data gathering. We tried to find a reliable way to synchronize the data collection with a timer, but we can’t find a way to reliably time it. Tomorrow we will be testing each stage in conjunction with the profiler and see if latency at each stage change significantly.

Chris’s Status Report for Apr 24

This week I implemented an API which is fast and has an encapsulated training routine for the integration between our NN part and the software part. It takes two classes’ processed data and train a network using these data. About the details of training, they are optimized by previous training sessions, which uses the default learning rate, the deep conv net, and 10 epochs of training. This API trains the model and saves the model with user’s profiles.

Chris’s Status Report for Apr 10

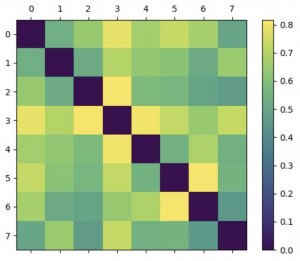

’ve been working on 2 things this week. The first thing I’ve been working on is trying to find how our neural networks perform on different types of combinations of tasks. Since our current game requires the discrimination between 2 types of action, we collected data from 8 different actions and compared the performance of each neural network on every possible 2-pair of actions. We’ve found that similar actions are hard to be distinguished, and very different actions are easy to be distinguished. For example, thinking about lifting the right arm and left arm are hard to distinguish, but not thinking about anything and moving the eyeball from right to left is easy to distinguish. We found that the DeepConvNet performs the best overall, so here we will only include the results from this network.

The actions from are numbered from 0-7, and the color of the matrix shows how good the performance of distinguishing the two actions are. From 0-7 the actions are respectively:

- ‘smile’,

- ‘stare’,

- ‘open-eyes-neutral’,

- ‘closed-eyes-neutral’,

- ‘left-to-right-eyeball’,

- ‘right-to-left-eyeball’,

- ‘think-lifting-left-arm’,

- ‘think-lifting-right-arm’

And as evident in the plot, the action pairs that are most easily distinguishable are:

- Eyeball moving right to left and think lifting left arm

- Closed eye neutral and left to right eyeball

- Open eyes neutral and closed eyes neutral

- Smile and closed eyes neutral

As we can see from these pairs, the algorithm almost has to rely on some eyeball information to operate. This could be due to multiple factors, one of them being we are not cleaning the data at present stage. The data cleaning pipeline is still under progress, and all the training and testing here are done on raw signals. We will continue testing and keep a note on how these performances change. However, what’s worth noting is that even if we were to rely on eyeball movement signals for our game’s control signal, the technology we are using is still significantly different from just using eye tracking.

The second thing I’ve been doing is adding multi thread processing into the main game logic. I take the main files Eryn has been working on and I’m writing a multi-process consumer and producer system that reads in signals and processes signals, then send the control signals to the game logic.

Team Status Report for Apr 3

This week our team worked on the individual parts of our project to prepare for integrations. On the software side, we have completed the recording and data collection pipeline for real-time collection of data. On the neural network side, we have run preliminary tests and decided on the networks to use. On the game logic side, we’ve made and implemented some changes to the previous versions of the game. Next week we plan on continuing on these aspects, work on the connection between each part.

Chris’s Status Report for Apr 3

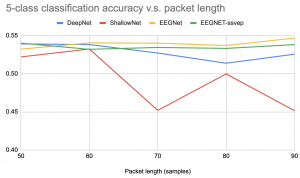

I implemented and tested the 4 neural networks we will be using. I made some small changes to the architectures so they can perform well on lower packet sizes. To change from 256-512 which was originally designed for, the pooling layers are changed from length 16 to length 3. Convolution filters are also reduced from 16-32 to 3-5; these two changes are meant to address the change in our sampling rate. I then tested the performance of each of the network’s performance on packet size 50-90, and we obtained test results from a held-out group of data. From the plot, we can see DeepNet and ShallowNet have relatively worse performance, and the performance doesn’t change much with the size. EEGNet and EEGNet-SSVP, a variant of EEGNet, show better performance and show a slight increase when we use higher packet sizes. We will thus likely use these two for our future tests.

Note that in the plot, our problem was a 5-class classification, from our pre-training dataset. Although they don’t exceed 60% accuracy, it’s still a significantly harder task than our final goal of 2-3 class classification.

Chris’s Status Report for March 27

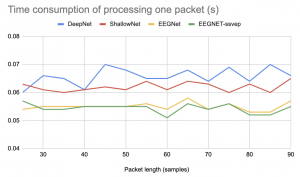

This week, apart from working with Lavender and Eryn to explore the hardware that we just received, I’ve mostly been running tests on the neural networks that we selected as candidates for processing. We found that the single-run processing time of the EEG image method is higher than expected and might not work well for our near real-time control purpose, therefore we decided to solely focus on the end-to-end CNN-based approaches. I tweaked the architectures that usually expect larger input sequences so they will be able to work with shorter sequences and in a near real-time way. I ran preliminary tests to test the run time for different packet sizes. As we will be running our game on CPU, we are also doing the inference on CPU as well. Running experiments on my own laptop, this is the result I got. These run times are acceptable for real-time control in games, although this is the run time for control signal extraction only.

Next week I will both try running these models back to back on the open access dataset and the real data collected from the headgear, if we were to complete the data collection pipeline.

Chris’s Status Report for March 13

I worked on creating a GitHub repository, and started to work on the software infrastructure for neural network training, testing, and inference. I’ve integrated the existing repositories that we plan to use for generating control signals from raw EEG signals. Although we can’t see the real data coming from our particular device, I’ve selected a large open-access dataset to use for our pretraining whose electrode configuration include those provided by our device with a similar sampling rate. Next week I plan to keep working on the software infrastructure and work on the design review report.