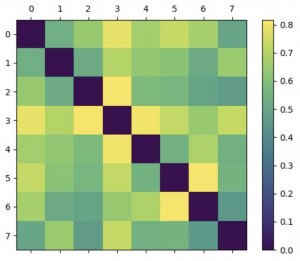

’ve been working on 2 things this week. The first thing I’ve been working on is trying to find how our neural networks perform on different types of combinations of tasks. Since our current game requires the discrimination between 2 types of action, we collected data from 8 different actions and compared the performance of each neural network on every possible 2-pair of actions. We’ve found that similar actions are hard to be distinguished, and very different actions are easy to be distinguished. For example, thinking about lifting the right arm and left arm are hard to distinguish, but not thinking about anything and moving the eyeball from right to left is easy to distinguish. We found that the DeepConvNet performs the best overall, so here we will only include the results from this network.

The actions from are numbered from 0-7, and the color of the matrix shows how good the performance of distinguishing the two actions are. From 0-7 the actions are respectively:

- ‘smile’,

- ‘stare’,

- ‘open-eyes-neutral’,

- ‘closed-eyes-neutral’,

- ‘left-to-right-eyeball’,

- ‘right-to-left-eyeball’,

- ‘think-lifting-left-arm’,

- ‘think-lifting-right-arm’

And as evident in the plot, the action pairs that are most easily distinguishable are:

- Eyeball moving right to left and think lifting left arm

- Closed eye neutral and left to right eyeball

- Open eyes neutral and closed eyes neutral

- Smile and closed eyes neutral

As we can see from these pairs, the algorithm almost has to rely on some eyeball information to operate. This could be due to multiple factors, one of them being we are not cleaning the data at present stage. The data cleaning pipeline is still under progress, and all the training and testing here are done on raw signals. We will continue testing and keep a note on how these performances change. However, what’s worth noting is that even if we were to rely on eyeball movement signals for our game’s control signal, the technology we are using is still significantly different from just using eye tracking.

The second thing I’ve been doing is adding multi thread processing into the main game logic. I take the main files Eryn has been working on and I’m writing a multi-process consumer and producer system that reads in signals and processes signals, then send the control signals to the game logic.