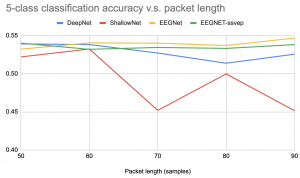

I implemented and tested the 4 neural networks we will be using. I made some small changes to the architectures so they can perform well on lower packet sizes. To change from 256-512 which was originally designed for, the pooling layers are changed from length 16 to length 3. Convolution filters are also reduced from 16-32 to 3-5; these two changes are meant to address the change in our sampling rate. I then tested the performance of each of the network’s performance on packet size 50-90, and we obtained test results from a held-out group of data. From the plot, we can see DeepNet and ShallowNet have relatively worse performance, and the performance doesn’t change much with the size. EEGNet and EEGNet-SSVP, a variant of EEGNet, show better performance and show a slight increase when we use higher packet sizes. We will thus likely use these two for our future tests.

Note that in the plot, our problem was a 5-class classification, from our pre-training dataset. Although they don’t exceed 60% accuracy, it’s still a significantly harder task than our final goal of 2-3 class classification.