- Current Risks and Mitigation

- We have spent a long time debugging the localization using GPS. One risk is that these issues never get resolved. We will be field testing the localization within the next day. If it doesn’t work with the current setup, we will switch to a single kalman filter taking in all inputs and localize within the UTM frame.

- Another risk is that the perception system may be pushed out due to the delay in localization. Most of the code has been written except for the data association part, which shouldn’t take too long.

- Changes to System Design

- After evaluating ORBSLAM2, we decided to use the GPS+IMU+wheel encoder localization method. The reasoning is that the non-visual method is getting good enough results and we do not think that it is worth the time needed to integrate another odometry source.

- Schedule Changes

- We have a lot of work to squeeze into this last week.

- Data association on pedestrians

- Verifying localization

- Testing the local planner with the new localization

- All the required metrics testing

- We have a lot of work to squeeze into this last week.

- Progress Pictures/Videos

- Some of the issues we are facing with a two-level state estimation system. Sometimes the local/global odometry gets misaligned (green/red – global/local odometry, blue – GPS odometry).

- We fixed this by verifying the data inputs and fixing magnetic declination and yaw offset parameters

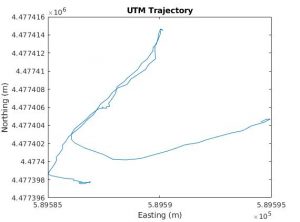

- This is a better run, as you can see, the raw GPS data aligns well with all the odometry integrated. We hope to get another validation run like this and perhaps log waypoints.

- Here’s a video of a constant velocity kalman filter tracker that we will use on pedestrians: MATLAB KF Tracker

- Here’s a video of the same algorithm tracking me in 3D (red is filtered, blue is raw detections): Tracking in 3D Real World

Carnegie Mellon ECE Capstone, Spring 2021 | Michael Li, Sebastian Montiel, Advaith Sethuraman