This week we began implementing based on our restructured plan. Neeti is working on the gesture classifier, Shrutika is working on modelling the physical platform animation with Simulink and Gauri is working on the control loop. We also ordered and received the mics. So we have all the parts we need to complete our project. The main changes we are making are with regards to animating a physical platform using Simulink instead of building a real one and rotating the animation based on the inputs received and processed by the RPis. We overall seem to be on track to demo-ing at least a mostly full version of manual mode.

Updated Risk Analysis:

We have identified several risk factors for our project. The first risk factor is related to the gesture detection input, taken by the Raspberry Pi Camera Module to classify left and right gestures, and forward them to the paired COMOVO. The risk here is that the accuracy rate of identifying the gestures depends on the size and quality of our dataset. Since we don’t have the resources to make our own datasets anymore, we are choosing an already available dataset and have changed the gestures to thumbs-up and thumbs-down for right and left. We plan to narrow the scope for the purpose of the demo to recognizing a gesture with a specific background (against a plain black/white background).

The second risk factor is the accuracy of the sound localization. We are planning for a very high accuracy rate of the COMOVO rotating towards the loudest speaker, and we have identified several risks regarding ambient sound, sound from the phone speaker and the accuracy of directional microphones. We plan to use hand-made baffles around each mic to ensure that too much ambient noise is not picked up. For the demo video we will demo in a quieter room so that there isn’t much distracting ambient noise.

The third risk factor is the potential for higher latency than is anticipated. Since our use case is for long distance video calls, our short distance testing might not accurately represent the latency for our original use case.

Finally, the performance of our device is heavily dependent on parameter tuning – the classifiers will need to be tuned to accurately recognize heads and hands and the baffled omnidirectional microphones’ sensitivities need to be sufficient to offset the short distance between them.

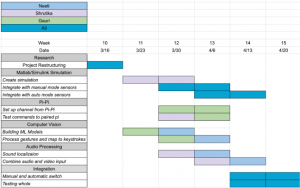

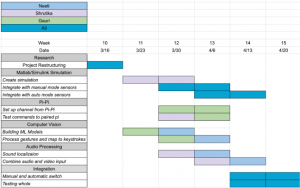

Updated Gantt chart: