This week, I spent time understanding MIDI; its message types, its generation, and its parsing. I wrote some sample code in Python using the mido library to generate MIDI messages, mapping some of my keys to different notes. I then pipelined the MIDI output to GarageBand to observe the latency and smoothness of MIDI generation, and was pleasantly surprised when the latency was basically unnoticeable. Next week, I will work on implementing our own audio generator, maybe with a wavetable, and try to connect it with MIDI generation.

Jason’s Status Report 3

This week, I spent my time mostly working on generating live audio using tone.js and getting familiar with the package’s functionalities. It has all the features we needed, including pitch bends and effects chains. I also spent a lot of time cleaning up the project codebase and dependencies, which was very cluttered due to our frantic past weeks trying to figure out how to implement several different features and in different languages. After cleaning up the code, we created a new repo in GitHub to isolate the environments and packages that we decided to stick with.

Team Status Report 3

This week, we began generating code that outputs sound in real time, using MIDI signals and the tone.js library for audio generation. In parallel, we also began work on motion classification with the GearVR controller.

Before we started work on motion classification, we extended the functionality of the GearVR analysis tool we built last week to include data from all of the sensors (gyroscope, touchpad, and touchpad clicks). This helped us design some classification methods and calibrate our sensors.

Team Status Report 2

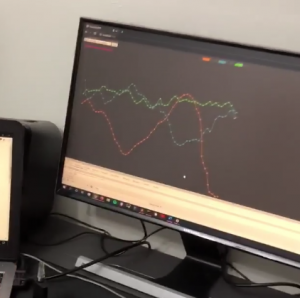

This week, we successfully reverse-engineered the Bluetooth protocol for the GearVR controller. Right now, we are able to read all sensor values from the controller (gyroscope, accelerometer, buttons, and touchpad). Before implementing motion detection, we decided to graph out the sensor values for each type of motion we want to classify. This helped us better understand what the data looks like, and will help us in the classification of motion detection.

After establishing a stable connection with the controller, we were also able to generate real-time MIDI messages, controlled by the trigger button on the GearVR controller, and outputted sound through GarageBand. We have not measured the latency yet, but it was hardly noticeable. All in all, we got past a major roadblock this week (figuring out how to use the GearVR controller), and are only slightly behind schedule.

Introduction

We plan on creating a motion-controlled MIDI controller, similar to a keyboard controller with a pitchwheel. The controller will be played with two hands, one hand controlling note selection through a phone app, and the other controlling note output using motion. Our main goal is to minimize the latency of the controller to provide for smooth playing.