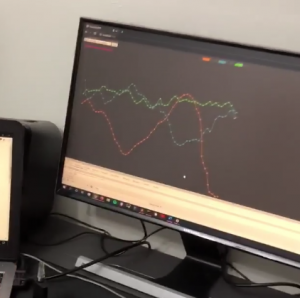

This week, we successfully reverse-engineered the Bluetooth protocol for the GearVR controller. Right now, we are able to read all sensor values from the controller (gyroscope, accelerometer, buttons, and touchpad). Before implementing motion detection, we decided to graph out the sensor values for each type of motion we want to classify. This helped us better understand what the data looks like, and will help us in the classification of motion detection.

After establishing a stable connection with the controller, we were also able to generate real-time MIDI messages, controlled by the trigger button on the GearVR controller, and outputted sound through GarageBand. We have not measured the latency yet, but it was hardly noticeable. All in all, we got past a major roadblock this week (figuring out how to use the GearVR controller), and are only slightly behind schedule.