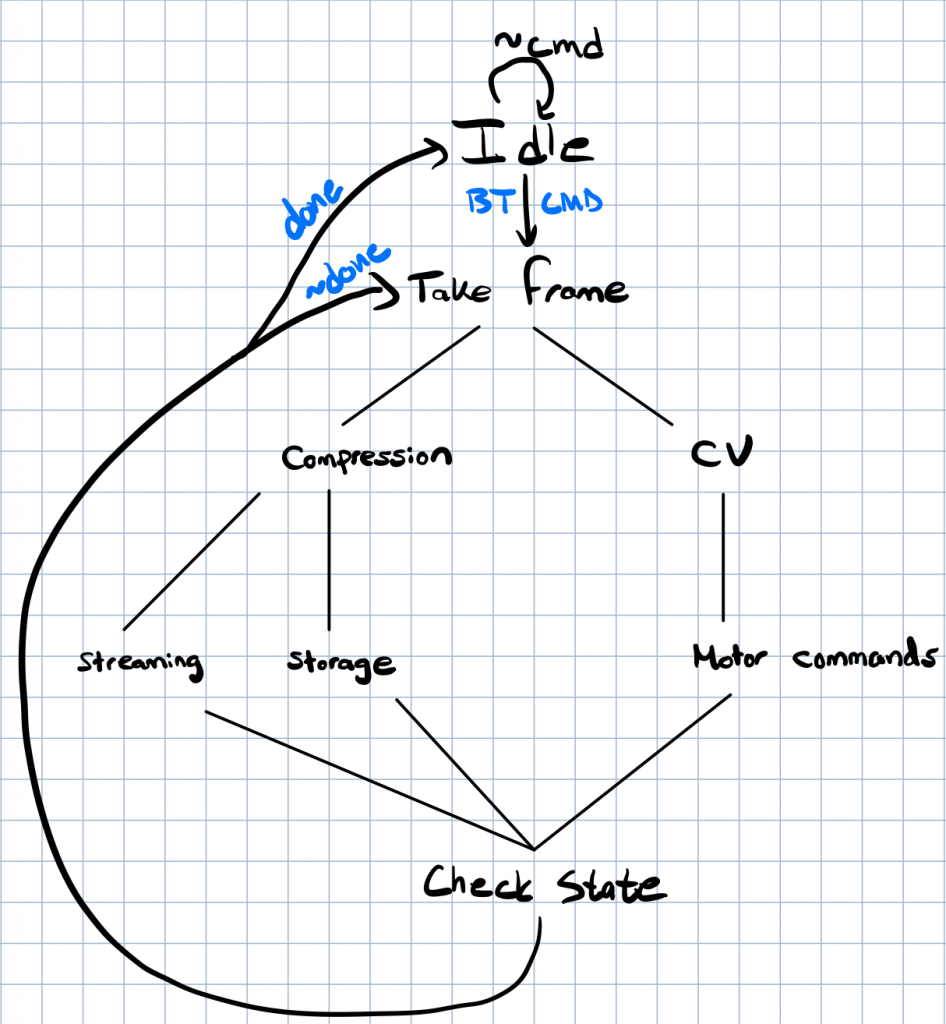

NVIDIA Jetson – FSM

- Overview: InFrame’s core processing power and camera control will be centralized within the NVIDIA Jetson module. This decision was made with the purpose of bringing the image processing closer to the camera itself and, as a result, cutting down any data transmission delays. Compared to the first design, where images were sent to a remote iPhone where processing could occur, this alternative enables higher functional FPS of the CV-worked video.

- Commands: When the Jetson receives a command (a process explained further in the Communication section below), the device exits its

Idlestate and begins the bulk of its work. The current design includes two accepted commands, each leading into its own FSM branch:currentSnapshot– The system takes an image frame, compresses the recorded data, and either sends this compressed image to the iPhone controlling the device for selection of which detected object/person to track or stores the snapshot locally when no network can be connected to.followTarget– The system enters into a tracking mode with a target specified by the iPhone-controlling user. This pathway first takes a frame, pushes the output to an on-board entity-tracking algorithm, and sends commands to the system motor controllers to realign the target at the center of the frame.- After each branch has been executed, there is a system state check made to confirm the status of the current command. If the command has been completed, the device returns to the

Idlestate, else continues to take another frame and follow its specified branch.

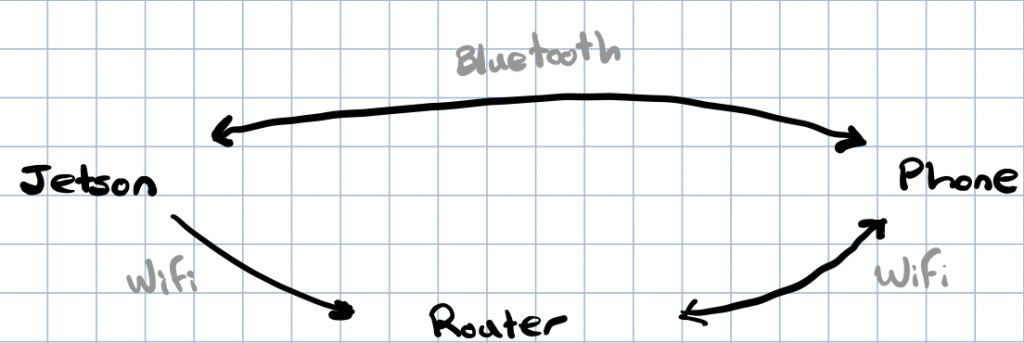

System Communication Diagram

- Overview: To modularize the InFrame system, we have split up processing and operations between multiple devices; the communication between these devices must be planned in such a way that minimizes latency, while reaches the baseline requirements necessary to have a functioning MVP.

- Remote Commands: In order to control the camera system, a remote iOS interface will be create with the ability to send high-level commands to the central Jetson, where they can be combined with data regarding the pitch/tilt of the camera (only stored on the Jetson itself) to make a more informed movement decision. This design choice was made with the purpose of minimizing the amount of data transmitted between devices (e.g. iOS app does not need to know about the current positioning of the camera), while also providing a clean, easily-accessible user experience.

- Thus, for these high-level command purposes outlined, the Jetson will use Bluetooth as its data sending medium. Bluetooth was picked over communication via Wifi to accommodate for InFrame’s outdoor use-cases such as videotaping a specific target in a skateboarding park, where Wifi may not be readily accessible.

- Video Delivery: When the device can access a Wifi network, it will be able to deliver its recorded video to the iOS app, where users can download and view the footage. Wifi was chosen as the primary medium for communication in order to maximize bandwidth (~11Mbps vs. Bluetooth’s ~800Kbps). Until the device can connect to a network, any offline footage will be stored locally on the Jetson module.

0 Comments