Status Update 5/4/19

Ally:

- Worked on final presentation and demo poster

- Added login and register functionality so users are now required to login to access the tool; the purpose for this was to save uploaded music per individual

- Made the sheet music interactive so a user can edit anything about the sheet music, whether it be adding notes, changing notes, or editing the title; the purpose of this was to account for mistakes made by Audiveris in converting a pdf to musicXML

- Added a progress bar to the ‘play’ page so user’s are aware that back-end processing is occuring

- Updated the home screen since many links had changed since I began designing the website

- Integrated front-end with back-end so different functions are called based on the user playing violin or piano (mainly added so people could try out our tool on the keyboard during demos); updated percentage calculation based on new back-end format

Sasi:

- Created separate ABCJs conversion functions for both the violin and the piano.

- Tested several different recordings of the violin.

- Played with different ways to display note lengths with ABCJs. This was because I was trying to figure out how to display the two staffs differently when one had the smallest note being an eighth note and the other being an quarter note.

- Worked on the poster for demo day

- Final preparations for demo day including making sure the entire pipeline was running smoothly.

Tianbo:

- Gave our team’s final presentation on Monday

- Worked on the Poster for our final demo

- Made some small refinements to the back end

- Did some testing with the team using the keyboard as our audio input to our product.

Status Update 4/27/19

Ally:

- I set up audio recording through the browser, so a user now has the option to upload a pre-recorded wav file or record from their device. Upon completion of their recording, their audio file is saved and appears on the same screen as the ‘latest upload’. They still click ‘Results’ to actual render their analysis.

- I implemented a metronome on the play screen such that it adds beats according to the time signature of the piece and begins counting when a user begins their recording. It is just a visual metronome to avoid adding extra noise to the recording.

- As mentioned in my previous post, we decided to change how we are displaying results, so I updated the ‘Results’ page to show two stacked staffs, the top displaying the input sheet music and the lower showing the user’s audio, with wrong notes colored in red and correct notes colored in green.

- Interactive sheet music is the final main step to accomplish, then more “front-end” work can be implemented like creating users and email forms.

Sasi:

- I worked on the conversion of the pitch and rhythm detection to the input that Ally needs for front end.

- This included figuring out how to deal with key signature. I am taking in key signatures from 3 flats to 3 sharps.

- I also had to figure out how to deal with the differences in display with piano and violin. Currently, I have two different dictionaries for this.

- I also needed to format the incorrect notes that we are detecting using the levenshtein distance. This requires a special format of knowing the exact line number, measure number and note in that measure.

- Finally, I worked on our final presentation.

Tianbo:

- Wrote the verification program between note sequences using the levenshtein distance metric https://en.wikipedia.org/wiki/Levenshtein_distance where a note is used instead of a character.

- Worked on fixing some issues with the backend audio processing (like removing leading and trailing rests in a recording).

- Created audio files programmatically out of MIDI files for testing performance. However, it seems that the generated audio waveforms are different from those recorded by a microphone.

- Collected test results on audio processing performance from the bank of recorded piano and violin clips we have.

- Worked on the final presentation powerpoint

Status Update 4/20/19

Ally:

- Hooked up the website ‘upload music’ button to back-end code so a user can now upload a pdf and use it as their piece of music

- Uploaded pdf gets saved, then retrieved by Audiveris, runs OS command line prompts which output xml file in local folder

- Retrieve xml file and run through command line script to convert xml to ABCJs notation

- Parse this return notation into a Song object that gets sent to the front end to display

- Put restrictions on file uploads so only able to upload pdfs of sheet music and .wav files for audio files

- Decided with the group to switch to displaying the user’s input on a separate line below the input sheet music, so need to re-do this display asap

- Still need to implement through-browser recording (ran into a lot of difficulties trying to figure out existing softwares, will continue to work on this

- Still need to implement interactive sheet music for users!! (all to be done by Wed)

Sasi:

- Spent a good amount of time working on integration. This included hooking up the microphone properly and making sure that we were receiving input.

- We tested using many different samples. This included changing the placement of the mic so that the audio was neither too feeble nor too loud. We also needed to test with rest detection.

- I also continued work on the backend with the conversion of the pitch detection output to the frontend. We changed the way we are going to do this so that it is more compatible with the pdf scanning and xml interpretation.

- My goals for next week are to make sure that the conversion is complete for the demo on Wednesday. We will also begin to work on our final presentation and final report.

Tianbo:

- Improved onset detection for Violin music using a moving median filter. Now the detected peaks are much closer to the true onsets

- Set up and tested our audio processing with the violin microphone we ordered. Results seem to be consistent with our uploaded audio files.

- Wrote some code to extract the pitches and note lengths of music xml files using a python package called music21.

- Started to think about smarter verification between the user’s input and the score. Currently, if a user were to play a wrong rhythm, all the notes after that point would be marked wrong. Considering using a variation of minimum edit distance.

Status Update 4/13/19

Ally:

- I worked on implementing a ‘Record’ button, allowing a user to record their audio input directly from the browser. I spent a lot of time testing different functions and libraries already in existence, and settled on using an RecordJs, an OS library. It provides a simple recording script, but doesn’t convert the file to a downloadable format. Most other existing libraries are written for Node by creating event listeners and streams, so accommodating to Python is a bit tricky.

- I followed a tutorial on connecting RecordJS to a Django backend, however the tutorial is outdated and doesn’t return the exact wav format I am looking for. This is now my top priority task to complete, since I’ve discovered it is not as straight forward as originally anticipated.

- Plans for next week are to have the browser recording fully implemented as well as implementing a pdf upload and conversion to sheet music using another OS library, Audiveris.

Sasi:

- This week I didn’t get as much as I wanted done because of carnival.

- Mostly worked on the backend where I am trying to integrate the output of the pitch detection for Ally to use for front end.

- Looked into switching to a different output that will be easier for Ally to display.

- This includes not having such a strict way of displaying note length. Currently, notes length is displayed in the smallest beat in the music which is hard to detect before beginning the conversion process.

- Did research on microphones for the violin with Professor Sullivan’s help.

- Goals for next week is to integrate the violin and continue working on the conversion to string.

Tianbo:

- Instead of working on testing, I instead worked on getting violin onsets working. Going back to http://www.iro.umontreal.ca/~pift6080/H09/documents/papers/bello_onset_tutorial.pdf I used the high frequency content approach described on page 4 with median value smoothing to try to find violin onsets. This gives varied results (more testing required)

- I also applied the rest detection algorithm used for piano to violin and it appears to generalize fairly well. The existing pitch detection algorithm also appears to work for violin.

- Next week I will try to improve the performance of violin onset detection and look into improving the verification code for comparing the audio to the sheet music.

Status Update 4/6/19

Team:

- Our team was able to successfully connect our back-end to our front-end in time for our demo (yay!). Schedule has been updated according to progress thus far, showing how project is expected to be completed in the upcoming four weeks.

Ally:

- I did a lot of work leading up to the demo to get all of our parts integrated and sending data properly. I had previously hardcoded strings representing our sample songs in a js file, but could not gracefully send all necessary data back to python to compare with the back-end generated string. Because of this, I created a new model to store all necessary data and create these sample models in my python files.

- I pulled the back-end functions that take a wav file, tempo, and time signature as inputs and output a string in correct ABCJs format. I connected this to my own python functions and wrote a function to compare the two songs. My function then outputs a new song with all combined notes to be displayed. It also tracks every wrong note and sends these classes to my html so that each wrong note appears in red (when a note is incorrect, both the incorrect and correct note are colored in red; the ABCJs API doesn’t have a graceful way to single out notes so getting individual chords was rather difficult; hopefully I can single out notes in the future). This example shows the completed ‘Results’ page with two incorrect notes, as well as two missed notes at the end.

- I also wrote functions that take the resulting song and calculate the correctness of the file, comparing the number of incorrect notes to the total notes in the song. The website displays this percentage with a color corresponding like so: green >=90%, yellow >=70%, red <70%

- I added constraints to all of the buttons, so a user cannot begin recording or save music unless a piece is actually selected, and a user cannot upload a song without entering a tile and uploading a file.

- Next steps:

- Comparison function is not complete; works for audio files used on demo but need to add checks for all possible inputs

- Integrate Audiveris so user can upload pdfs of other music (aiming for these two steps to be complete by next week)

- Record audio directly from website instead of only uploading

- Interactive sheet music before a user begins recording, allowing them to correct any mistakes made by Audiveris (work with the ABCJs editor tool)

- Want to get red notes on single incorrect note, with the should-have-been-played correct note still in black

- Login and Register so users can save their music and recordings

- Email Form so website visitors can email us for support or suggestions

Sasi:

- This week I spent most of my time getting ready for the demo. For our demo, we were using a piano clip rather than a violin clip. So we used a working pitch detection and onset detection algorithm.

- For the full pipeline to work, we had to be able to output a string that Ally would be able to use in order to display the notes on the webpage.

- I wrote a scrip that took the note and the duration of the note and turned it into a string. The general string format looked something like this. “D D A A|B B A2|G G F F|E E D2|n A A G G|F F E2|A A G G|F F E2|n D D A A|B B A2|G G F F|E E D2|]n”

- I ran into some issues because Ally needed the shortest note to be whatever was specified in her function. I fixed this by taking the time signature as a parameter which allows me to mark measures as well.

- We also figured out how to display flats and sharps if the note was played incorrectly as well.

- The script will need to be modified a little in order to incorporate key signature as well as time signature. Given that the scope of the violin pitches is much smaller than the piano, this should not be too difficult.

- Next week, I will continue working on the transition of piano to violin for pitch detection.

Tianbo:

- Worked on getting the backed code running and ready to use for the demo.

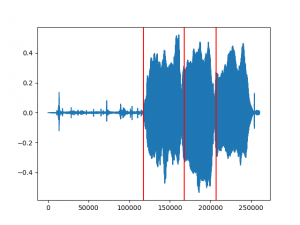

- Implemented rest detection. This works by considering periods of time where the amplitude is under some threshold. Periods of rest which are close together are combined into one period (sometimes the amplitude will randomly go above the threshold, but only for a very brief amount of time). Periods of rest which are very short and not close to any other periods of rest are thrown out. The following image shows the audio signal and red bars denoting either the start of the note, or the start of a rest.

- Next week I will be looking at programmatically testing the audio analysis to look at performance metrics.

Status Update 3/30/19

Team:

- Up to this point, team members were working mostly individually on their section of the project (front-end, onset detection, and pitch identification). The team decided they were going to be running their back end by integrating the python functions into the views.py file. This is so we do not have to set up an AWS connection for now. We may never end up using AWS, but would only to allow Sasi and Tianbo to change their functions easier.

Ally:

- I decided for the sake of our demo to allow a user to upload a wav file instead of record through their device. I implemented a form to allow a user to upload a wav file, along with the title of the piece and a desired tempo. The tempo is currently defaulted to 100bpm, as our onset detection works best at slower tempos.

- The latest uploaded recording is then displayed on the same web page with a link to listen to the recording and a button to redirect to the results page.

- I am now finishing up the results page before our demo. I created another model to store different variables of a song (title, key, song string in ABCJs format) and am working on sending these different parts to the results page and rendering them. I still need to write a function that compares the uploaded recording with the chosen sample music and highlights any mistakes. It looks like it may be difficult to color single notes a different color, especially when they’re being represented as chords, but we will see how this turns out by the demo!

Sasi:

- I was able to successfully detect the pitch of notes. The code was done in matlab. I originally worked with piano notes. Given a simple song and the onsets of each pitch, it is able to classify the note.

- The general algorithm that is used for this is to do a fast fourier transform and then after filtering out harmonics, it take the highest amplitude frequency and this is the frequency that is used to determine the exact pitch.

- Once I was able to successfully, run it with piano notes, I tried doing it with violin notes. Except for a loss of a couple notes along the way (due to a bug in the code), I was able to successfully classify the notes as well.

- One of the things that doesn’t work is when I give it onsets that are rests or if there are too many onsets in the small span of time. I will be working on fixing this.

- I then started working on writing this code in python since the working algorithm is in MATLAB. However, the conversion process should not be too difficult.

- My goals for next week are to get ready for the demo and polish my code.

Tianbo:

- Tried looking at average energy for violin onsets but didn’t have much success.

- Began working on detecting when a note ends (ie rests). There seems to not be a lot of literature on this area so I am coming up with my own methods for doing this. Currently I am trying to use an amplitude thresholding method on a smoothed/average version of the audio signal.

- For the purposes of the demo, I have assumed that the user plays the note from one note onset to the other note onset (ie assuming there are no resets in the piece). Using the user provided tempo, I can easily estimate the duration of the note. I am currently estimating the duration to eight note granularity.

- The python script I wrote currently outputs a list of piano key notes (https://en.wikipedia.org/wiki/Piano_key_frequencies) with their duration in eight notes.

Status Update 3/23/19

Team:

- Major design changes have been considered, including moving from a violin practice tool to that for a piano. More testing is in the future, but upcoming demo will likely analyze piano audio

Ally:

- I used Bootstrap to implement a cohesive and professional theme among all url pages. I have also used many other Bootstrap features to enhance design, including the team bio’s layout, the navigation bar, icons, and more.

- I started rendering sheet music this week, as this is an essential feature for the MVP. Up until this point, I was planning on using an open-source library called VexFlow. I was able to display notes in a certain key, but I quickly found out there is no easy way to score multiple measures. Various ideas included rendering each measure and using CSS to line then up next to each other, or deactivating the tick counter to create a measure bar. These are both “hacky” methods and were not looking as clean as I had hoped, so I decided to switch to a different OS library.

- I am now using an API called abcJS. I had considered this when I first began my research on music rendering libraries, but decided against it because it seemed less popular than VexFlow. I have found, however, that I am easily able to render an entire piece, including a title, tempo, and many advanced musical notations such as trills. Since the algorithm for transcribing music has yet to be completed, this switch does not require any additional adjustments.

- The website can be found at https://teamnoteable.herokuapp.com/ to fully see everything implemented up to this point.

- Current implementations:

- Home page entirely finished with exception of email form (links to WordPress and other page sections included throughout)

- JavaScript files written and implemented to send logic between pages and keep track of user data/selections (i.e. choice of song)

- ‘Get Playing’ page implemented with three sample songs rendering

- Choice of sample music sent through JS to actual ‘record’ page where rendered

- Still to-do by end of next week (for MVP):

- Upload mp3 on ‘record’ page

- mp3 -> abcJS render

- Script to compare two music files

- Discrepancies highlighted in red

- Results page with correct percentage

- Unnecessary but design improvements:

- Make nav bar disappear/reappear when scrolling up/down

- Arrow on bottom right redirecting to top of page

- Increase space above section headers

- Smooth scroll to different sections of page

- Less spacing between descriptions beneath avatars

- All pages robust to various screen sizes

Sasi:

- This week I spent the majority of my time testing pre existing applications with the violin instead of the piano. The point of this was to test and see whether having the violin as our instrument was a viable option over having piano as an option.

- I used the tool aubio which has separate pitch detection and onset detection.

- For pitch detection, the software would classify the pitch it detected every couple milliseconds. Taking the output and comparing the frequency to a chart, I found that it did pretty well with detecting the pitch.

- I tried doing many different types of samples. This included just a single note to start, then multiple note. I played the notes together smoothly and then I put deliberate stops in between. I also tried doing open notes and different sharps and flats. Finally, I did string crossings to see how the output changed with a noisier file.

- All of the samples that I used performed very well for the pitch detection. However, the samples where I put deliberate stops performed much better for onset detection. Even with the stops, the onset detection did not perform the most accurately. Given playing 4 notes, the following picture shows how many onsets aubio detected. It detected both the correct onsets as well as many false positives.

- Finally, I worked on pitch detection and running an algorithm where I am able to detect notes given that I know when the onset of each note is in the excerpt.

Tianbo:

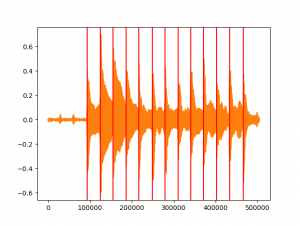

- Implemented an onset detector that works well with piano audio. This uses a derivative of Gaussian window filter in order to reduce the audio signal into a detection function where the peaks correspond to the onsets. Inspiration for using this particular function as a filter was found here: https://archive.cnx.org/contents/452b6bad-af84-47bf-a884-a123062ddafe@1/matlab-code-that-implements-piano-note-detection. Below is an example run on a chromatic scale on the piano recorded from an iPhone. The first image is the audio signal, the second image has vertical bars indicating the onset times.

- Implemented a pitch detector using FFT to find the prominent frequencies. However, since the highest amplitude frequency doesn’t necessarily correspond to the fundamental frequency, I implemented the two way mismatch algorithm found here: https://pdfs.semanticscholar.org/c94b/56f21f32b3b7a9575ced317e3de9b2ad9081.pdf in order to better identify the true fundamental frequency. So far it sees varying success, so it will likely need some more work.

Example FFT for one of the notes

Example run of pitch detection. For each note, I’ve listed the possible notes it could be (using MIDI number) and at the end I’ve shown the selected notes (fundamental frequency)

Status Update 3/9/19

Team:

- The team worked together to finish up the official Design Document

- Lofty goals have been set for the next two weeks as each team member is planning on dedicating a significant amount of Spring Break time to project

Ally:

- Worked a lot on cloud deployment; switched back to Django app bc know this format better and successfully connected repo. Website found at https://teamnoteable.herokuapp.com/.

- Designed home page layout. Rough sketches below:

Nav-bar implemented on site using Bootstrap. Link to ‘Login’ page redirects to login page, from which a user can login, select the newly available option to register, or return to the home page (currently implemented up to this point). After logging in or registering, practice and profile pages will become available. Rest of tabs on home page (‘Our Product’, ‘Our Team’, ‘FAQ’) will link to different sections of main page.

Nav-bar implemented on site using Bootstrap. Link to ‘Login’ page redirects to login page, from which a user can login, select the newly available option to register, or return to the home page (currently implemented up to this point). After logging in or registering, practice and profile pages will become available. Rest of tabs on home page (‘Our Product’, ‘Our Team’, ‘FAQ’) will link to different sections of main page.

Sasi:

- Completed the design document

- Continued work on the pitch detection

- Implemented a fast Fourier transform with the signal of the violin

- For next week, I need to work on doing the multiplication to find the period.

Tianbo:

- Finished up the design document that was due on Monday

- Worked on onset detection implementation. Here’s an example of what is working so far:

The original signal appears as such (its fairly clear where the notes begin and end)

Then I rectified the signal to lie above the x-axis

Using a moving average filter I smoothed out the signal

At this point, I tried peak picking in order to find the note onsets

However, we see that we find way too many peaks. Taking a closer look at the signal, we see that it is actually very noisy due to the sinusoidal nature of sound. This throws off the peak picking algorithm which tries to find local maxima.

After this, I attempted some filtering techniques (various low pass filters) to remove the noise, but I haven’t been able to fully smooth out the signal yet.

I followed some of the work in the slides detailed here: http://www-labs.iro.umontreal.ca/~pift6080/H08/documents/presentations/jason_bello.pdf

I hope to have onset detection working and tested by the end of this week.

Status Update 3/2/19

Team:

- All team members ended up spending most of their time working on the Design Report. This will hopefully pay off by requiring less time to be focused on the Final Report and all design decisions are finally very concrete.

- Updated schedule with much greater detail

Ally:

- Finished design presentation and spent most of time working on design report

- Successfully deployed an example application so learned the steps required to do so through Heroku

- The next step I will approach is connecting the website to AWS through their API Gateway. This is important so that data can be received from the back-end functions as soon as they are complete

Sasi:

- At the beginning of this week, I worked on our design presentation as well as practicing presenting.

- Spent time working out the requirements for our design report.

- Continued work and research of pitch detection for a violin clip.

- Since I didn’t reach my goal from last week, for next week, I am still trying to successfully detect a single violin note.

Tianbo:

- Worked on the Design Presentation as well as Design Report

- Tried the zero crossings method on more violin pitch clips. It appears that this method performs very poorly for violin, so I’ll looking to test other algorithms to see which ones work well for violin.

- Looked into more onset detection algorithms to decide which to implement.

- I’m a little bit behind on the audio analysis. I plan to make up some work over Spring Break.

Status Update 2/23/19

Team:

- Using Python for the note detection system. We made this design choice because integration with the whole project will be easier than using MATLAB. The numpy library works just as well as MATLAB.

Ally:

- I set up our Team’s WordPress website with all the requirements and added some design.

- I worked through a Heroku tutorial on setting up and deploying an app. This required downloading many tools and taking notes on features I need to deploy my own application.

- I spent most of my time working on deploying my html through my Heroku app. The app currently builds successfully, but is still not rendering my files properly. I ran into many difficulties figuring out which files are necessary and continue to make changes according to the error logs, so I hope to complete this very soon. I changed the app to build with NodeJS (link to website).

- My goals carry over from last week, successfully setting up the Heroku app first so I can then focus on actual front-end display!

Sasi:

- I spent the majority of my time trying to classify a single note. For the beginning of the week, I used MATLAB and an auto correlation algorithm for this. Although I was able to get a result, it was not accurate due to the intricacy of the excerpt.

- I spent the latter half of the week using Python to develop a note detection script. I spent a lot of time researching and reading existing code. Then, I spent time learning about Fourier transforms and auto-correlation algorithms in general.

- I also worked on our design presentation slides.

- My goals for next week are to have a working Python script for a single violin note as the classification might be different than working with a piano note (which is what I was doing right now).

Tianbo:

- I worked on trying to classify single notes by finding their fundamental frequency. Initially, I tried to recreate an auto correlation algorithm, but I ran into some difficulties with the implementation. However, using the simple method of zero crossings described here: https://gist.github.com/endolith/255291#file_parabolic.py I was able to parse out the correct notes for a sample piano clip. Unfortunately, I wasn’t able to get similar results with a violin clip.

- I also started looking at a few papers on note onset detection (programmatically finding where a note begins). Unfortunately, the specifics of these papers are hard to parse, but I did gain a general understanding of how onset detection works.

- http://www.iro.umontreal.ca/~pift6080/H09/documents/papers/bello_onset_tutorial.pdf

- http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.60.9607&rep=rep1&type=pdf

- I also tested Audiveris on a few pages of violin sheet music. It was able to almost perfectly transcribe the notes for the last page of Bach’s 6th sonata which should be more than good enough for our purposes. I was also able to verify that the package could be run from the command line, which makes it possible to call via other scripts. Here are some sample images

- Next week I intend to continue looking into parsing techniques for music notes and onset detection.

Status Update 2/16/19

Team:

- Ongoing challenges include audio pitch and rhythm identification. Our project doesn’t provide much use without an accurate transcriber, so we are discussing with professors to hone in on the best technique (spectrogram analysis versus pitch correlation). Other difficulties lie in our stretch goals, including polyphonic transcription or complexities in writing our own front-end sheet music visuals. Ally is currently taking a web development course so has resources to aid in these issues.

- Originally, we were planning on using a MIDI file format to compare the user’s audio with the parse sheet music. However, this requires creating our own MIDI files and then figuring out how to compare them. Instead, we will be using our own internal representation to best compare notes. This saves us from having to understand the MIDI file format and eliminates an unnecessary step.

- Since developing for iOS is restricted to Macs, we have moved away from creating an iOS app for our user interface. Instead, we will be creating a web application. This will allow multi-platform support and provides the ability for other team members to work on the UI if necessary.

- Updated Schedule

Ally:

- I attempted to run XCode on my PC by installing a VM but decided a web application would better suit our project. I set up a GitHub repository and created a domain on Heroku to begin development.

- I decided to use an open source software called VexFlow that I will use to display sheet music and notes on the website. I was able to begin rendering simple music staffs and notes when running my HTML set up locally.

- I have updated my schedule for the rest of the project since my goals were previously designed to create an iOS application.

- My goals for next week include getting comfortable using Heroku, hand-drawing a design layout for the website and necessary features, rendering a simple piece of music through the new domain, and displaying two inputs at once, making one a different color to simulate user input transciption.

Sasi:

- I mainly spent my time converting an audio file to a spectrogram and then reading papers to start to learn how to read the spectrogram.

- I used Matlab to do the spectrogram construction. I learned how to create them using an online example. Then, I used a short monophonic clip that we recorded on a keyboard.

- I had a difficult time understanding the first orientation of the spectrogram so I modified the graph to have time as an axis. Then, it was easier to match the frequency with the notes in the excerpt.

- I also spent time reading some of the papers that Tianbo has linked below.

- The deliverables I have planned for next week is trying to have a preliminary script that will distinguish a single note from a spectrogram.

Tianbo:

- I researched different algorithms for monophonic pitch detection. Two techniques for pitch detection are using auto correlation in the time domain or peak detection in the frequency domain. Some papers I looked at

- I looked at the audiveris open source package for state of the art optical music recognition. Their code runs on Java and can be found here https://github.com/audiveris. I set up the package locally and tried putting a sample piece of sheet music through. I found relatively good results as shown below

- By next week, we should hope to have some code which can parse single music notes into pitches.