This week was rough due to sickness I caught over the weekend. I was sick all week and found out on Wednesday that I had the flu. This in combination with traveling for the next weekend: I did not make progress.

Team Report #3

Team C4 – Cooper Weaver, Nakul Garg, Scott Hamal

Status Report 3

Progress

- Data preprocessing for categorization algorithm

- Camera and RPi setup

- Endpoints named defined and spec-ed

- Backend class architecture defined

Significant Risks

- Rotation preprocessing – Possibly very difficult. We have a plan in place to do it but we have already identified specific data-points that will break our method and require additional rotation-correction processes.

- Incorrect classification results – If a workout is incorrectly classified the wrong backend WorkoutAnalyzer will be used this breaks the form correction, rep counting, and set counting for the rest of that workout.

Design Changes & Decisions

- Each workout will have its own WorkoutAnalyzer class which will each have separate subclasses for form correction, rep counting, and set counting. Though these algorithms will be similar in many respects they will have certain stark differences and possibly require drastically different data pre-processing.

- Http Endpoints named and http protocol for endpoint communications defined: here

Cooper Status Report #3

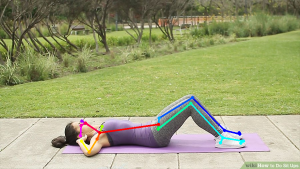

This week we stepped back a bit to refocus our overall structure (spurred by the design review). I reconsidered the I/O of the classification algorithm making sure it fit with our refined design. I also focused on designing the data pre-processing for the classification algorithm. The data preprocessing is going to be an ongoing task for me as I expect it to be by far the most complicated part of the classification algorithm. The design as it stands now is to zero (center) the hip point, and normalize all other points to that to eliminate horizontal and vertical movement of the camera and the user. This is a relatively simple process and eliminates the most common differences between images. Following that the points will be flipped (or not) to make sure the users head is always to the left for horizontal postures and that the user is facing to the left for standing postures. Finally, the trickiest part of the data preprocessing is going to be rotation to avoid a skewed camera or a non-level floor from breaking our algorithm. For the purposes of rotation I decided to only consider a +/- of 15 degrees as anything larger than that would be frankly shocking as well as extremely difficult to recover from. In order to execute rotation correction the plan is to draw essentially a “best-fit” approximation line for the foot, hip, and head (or shoulder) points and rotate all points to bring that line to the nearest horizontal or vertical (within +/- 15 degrees). If there is no horizontal or vertical mark within that range those data-points probably should not be used and the camera likely needs to be adjusted. This solution is not foolproof, specifically there is a clear problem in creating a best-fit line during the “up” portion of a sit-up. A solution I’m considering now for that problem is to throw out the head/shoulder point if it is too far off the line of the foot-hip points and simply use the foot-hip line to rotate in that case.

This past week we also received our hardware so I began working to get the camera hooked up to the the Pi and take a few pictures before I got the flu which shut me down for the rest of the week.

Nakul Status Report #3

Last week’s we dove into the high level architecture of our project, and this week I further formalized those high level interactions as well as started a class architecture for our core backend server. The high level work I did involved creating a list of endpoints each sub-system needs to provide. Link Here: Endpoints. These are based off our flow diagram posted previously as well as this baseline sketch of how progressing through a workout may go:

This is not final yet, but provides a good starting procedure which we can build off. The Analysis flow should be defined in the next week. The work done in this endpoint document will greatly help define the responsibilities in each sub-system and empower us to delegate work clearly and efficiently.

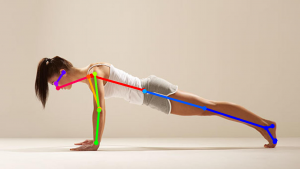

The other side of what I did this week was starting to figure out our core backend class architecture. Link Here: Backend Architecture. This is a first attempt at figuring out data structures we need, as well as designing a clean, modular class structure that would easily scale. As for class structure, I came up with the idea of having a WorkoutAnalyzer that can be created based off a specified Exercise that will contain a FormCorrector, RepCounter, and a SetCounter. These will be abstract classes that each have an implementation for each Exercise (i.e. SquatFormCorrector, PlankFormCorrector, and SitupFormCorrector). More detail in the link.

This portion of the design is the most difficult and most interesting. Creating a process in these two weeks that is clear, clean, and efficient is crucial to the success of the project. Once this stage is done, then it is a matter of implementation and then iterating the design based off new information. I believe I am still on schedule, and hope to use this upcoming week to get a first full draft of the class architecture as well as a comprehensive flow chart of the backend perspective of starting and finishing a workout.

Nakul Status Report #2

This week was a great iteration on last week’s design focussed work. Previously, what packages and what components are involved were starting to come together, but this week they were decided. Furthermore, detailed discussions over how these players speak to each other both in protocol and general work flow was ironed out. These were big next steps in creating the clarity needed to create a performant and scalable system.

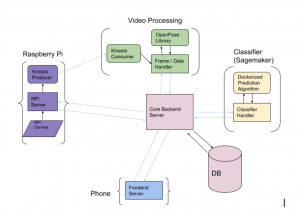

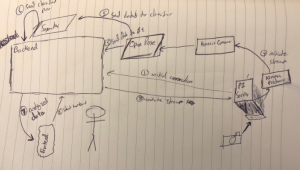

A big stress of this week was communication protocols, and in our current design (diagram below) we plan to use HTTP Requests and Responses to accomplish most of the intra-system messaging. This well established and flexible protocol seems ideal for keeping our communication straightforward. All arrows in blue are those that we believe to be HTTP communications and all in black we believe can be handled through internal calls.

Throughout this detailed design process we also managed to get an idea as for what servers we need to be live as well as what those servers must do. The Raspberry Pi server must be able to initiate a connection with the backend as well as stream content to Kinesis. The Frame / Data handler must be able to take a Kinesis video stream, utilize the OpenPose Library, and ferry that data to the Core Backend Server. The Core Backend Server has a host of responsibilities including but not limited to: communicating with the frontend, managing a user session, prompting the classifier, and correcting form. The Classifier Server must be able to use the dockerized classification algorithm to classify OpenPose data and send that to the backend.

For next week, I hope to dig into the duties of the Core Backend Server. This means defining rigidly all we expect the backend to do. Then the next step is to create high level documentation defining all the endpoints for each subsystem. This list will be incredibly useful in terms of work delegation and scheduling.

Scott Status Report #2

This week I worked on installing openpose on an amazon ec2 instance. Along the way I found I needed to use a p2 type of server that would have the required tools for openpose. I didn’t initially have access to this so I requested it and have been approved but haven’t finished this up yet.

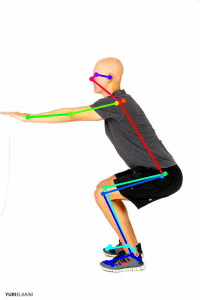

I also worked with the library and got json data for 3 basic workout images, one for each exercise. I then analyzed the data to get a better understanding of how we will work with this data, and how we will communicate it across our cloud services. This is important to be confident about because this data is essential to our classifier, rep/set algorithm, and our form correction algorithm.

In the next stretch of time I will install the openpose library on a p2 instance and begin to test it to see the performance and decide if we need a more intensive server. Then I will integrate AWS Kinesis to get frame data on the AWS server that is running openpose.

I will work with Cooper to standardize the output of the hardware camera so that we can begin to collect training data for our classifier. I will work with Nakul to begin designing our backend endpoints.

I think we are still on progress this week as long as I do not run into any issues with openpose on the p2 instance, since that is what caused a lag this week.

Cooper Status Report #2 (2/24)

I didn’t get much work done this week as I had a lot of work in other courses and we were waiting for our hardware to arrive to start collating image and OpenPose data for the classifier.

In the interim I read the AWS specs as well as a few articles on dockerizing the prediction algorithm, the correct form of the data input, and the form of the data output of a fully trained and dockerized prediction model to be able to spec out the data preprocessing that will be necessary for the classifier and clarify the communication protocols necessary for the classifier input/output.

In the coming week I will be finishing the KNN workout classification algorithm and collecting as many data points as I can hopefully to be able to train and test a first iteration by next weekend.

Cooper Status Report #1 (2/16)

This week we were working on the overall design of our project, trying to get into the specifics about how the different portions of our project were going to meld together and communicate with each other. My primary project this week was deciding on and ordering the hardware we needed for a successful project. Specifically we had to decide on a camera to use and a conduit to communicate camera data to the cloud. We wanted our conduit to have wifi connectivity such that it could communicate with the cloud without a middleman or an ethernet cord. We also wanted it to be bluetooth capable to allow for flexibility in our design to potentially have the conduit communicate directly with the user phone if we decided we wanted to (potentially for offline storage/usage when disconnected from wifi). With this in mind my team and I chose the Raspberry Pi 3 B+ as it had all of the features we wanted as well as our team having a good amount of experience and comfort working with Raspberry Pi’s. Next I had to decide on a camera. First I had to make a decision about whether or not we needed a depth camera, I was looking at the Kinect and the Intel RealSense D415 for depth cameras. Ultimately I determined that a depth camera was necessary as the video processing done by OpenPose is not helped by depth cameras. Looking through other options for cameras I narrowed it down to the Logitech C290 and the Raspberry Pi Camera V2. The specs of the camera were relatively similar and it was unclear that we were going to be able to get any kind of performance increase from one or the other so I chose to go with the Raspberry camera as it was slightly cheaper and there would be more available documentation if we ran into any problems.

For the coming week we will be (hopefully) receiving our Pi and Camera. I plan to start work on our classification algorithm. Using the output data from OpenPose I would like to use a KNN map algorithm to identify workouts. In the first week working on this I hope to get a KNN algorithm working and running on an existing dataset that likely will not be particularly useful for us. This should give me a better sense of the functionality of the KNN algorithm and confirm it is the one we should be using, while also being able to quickly switch it to another dataset once we have accumulated an appropriate number of datapoints. At the same time, I will use the camera and possibly publicly available videos of workouts to begin compiling an extensive dataset for our particular problem. Hopefully by the following week I can begin to attempt training the algorithm on our new algorithm, or possibly move on to a different algorithm if I determine that KNN is not appropriate for this problem.

Team Report #1 (2/16)

Significant Risks

- Speed of OpenPose and network communications must be fast enough to provide real-time updates and form correction.

- Security is of minimal concern here which should help optimize our communication.

- Wifi-enabled conduit eliminates middleman

- OpenPose on AWS machines should be extremely fast

- Potential Workaround: Provide feedback at the end of the specific workout

- Gives us a significant buffer (~30 seconds to a minute) before the user needs the feedback

- Cloud Security

- Our connections between the cloud and devices need to be secured. Our implementation will take security measures to ensure that our cloud endpoints are only being used for their intended purposes

Design Changes & Decisions

- Raspberry Pi 3 B+ as Conduit

- Raspberry Pi Camera V2 for Camera

- Classification algorithm will be run on OpenPose Data not directly on video/image files

- Pro: Should make algorithm itself much simpler and potentially more accurate

- Con: Need to compile large dataset

- Run OpenPose on pre-existing (online) workout videos to help compile more data than we could by creating our own.

- We are no longer using a RGB-D camera and rather just RGB

- We believe that the added depth will not improve openpose’s performance and is not needed

- Classification will have to occur on images rather than videos in order to be able to classify an exercise without missing the first rep

Nakul Status Report #1 (2/16)

Nakul Garg

Team C4 — EZ-Fit

Individual Status Report 1

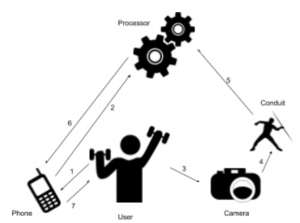

This week being the first week with feedback from the presentations focussed heavily on design for  the whole team. I specifically was looking into the high level architecture of our system. This includes what components we need, how they interact, and how they communicate. We had a rough idea of the involved components coming into this week as can be seen in left figure, yet as a team we wanted to take this high-level model and start deciding what will actually fill the role of each part. I looked into how to link the Raspberry Pi to AWS Kinesis thus fulfilling the role of the conduit, and it turns out AWS provides a tutorial on how to do so. The Pi can act as a Kinesis Producer and the AWS Server as the Kinesis Consumer thus streaming our video straight into the processing. Then I made a sketch of the flow of how the components can interact for an average use case. The Pi will need to initially link with the backend and establish its connection to AWS Kinesis. Then the user will initiate a workout through the frontend which will prompt the backend to tell the Pi to initiate a stream. That stream can be sent through Kinesis running OpenPose and the data can be sent both to AWS SageMaker which we plan to use to run our

the whole team. I specifically was looking into the high level architecture of our system. This includes what components we need, how they interact, and how they communicate. We had a rough idea of the involved components coming into this week as can be seen in left figure, yet as a team we wanted to take this high-level model and start deciding what will actually fill the role of each part. I looked into how to link the Raspberry Pi to AWS Kinesis thus fulfilling the role of the conduit, and it turns out AWS provides a tutorial on how to do so. The Pi can act as a Kinesis Producer and the AWS Server as the Kinesis Consumer thus streaming our video straight into the processing. Then I made a sketch of the flow of how the components can interact for an average use case. The Pi will need to initially link with the backend and establish its connection to AWS Kinesis. Then the user will initiate a workout through the frontend which will prompt the backend to tell the Pi to initiate a stream. That stream can be sent through Kinesis running OpenPose and the data can be sent both to AWS SageMaker which we plan to use to run our classifier and the backend for user feedback. This week I spent focussing on ironing out these details with the flow to make sure that the technology we want to use can work together and that the architecture is clear.

classifier and the backend for user feedback. This week I spent focussing on ironing out these details with the flow to make sure that the technology we want to use can work together and that the architecture is clear.

For the next week, I want to iterate on this initial architecture and keep drilling deeper until we have a very clear design. This involves explicitly stating the responsibilities of each component in the above diagram. From these internal requirements, I should hopefully be able to craft a class architecture for the backend revolving around the services it is expected to provide. I also hope to specify what communication protocol the Frontend specifically uses to talk to the Backend as well as how the Pi will initially connect. We seem to be on schedule.