Group Update

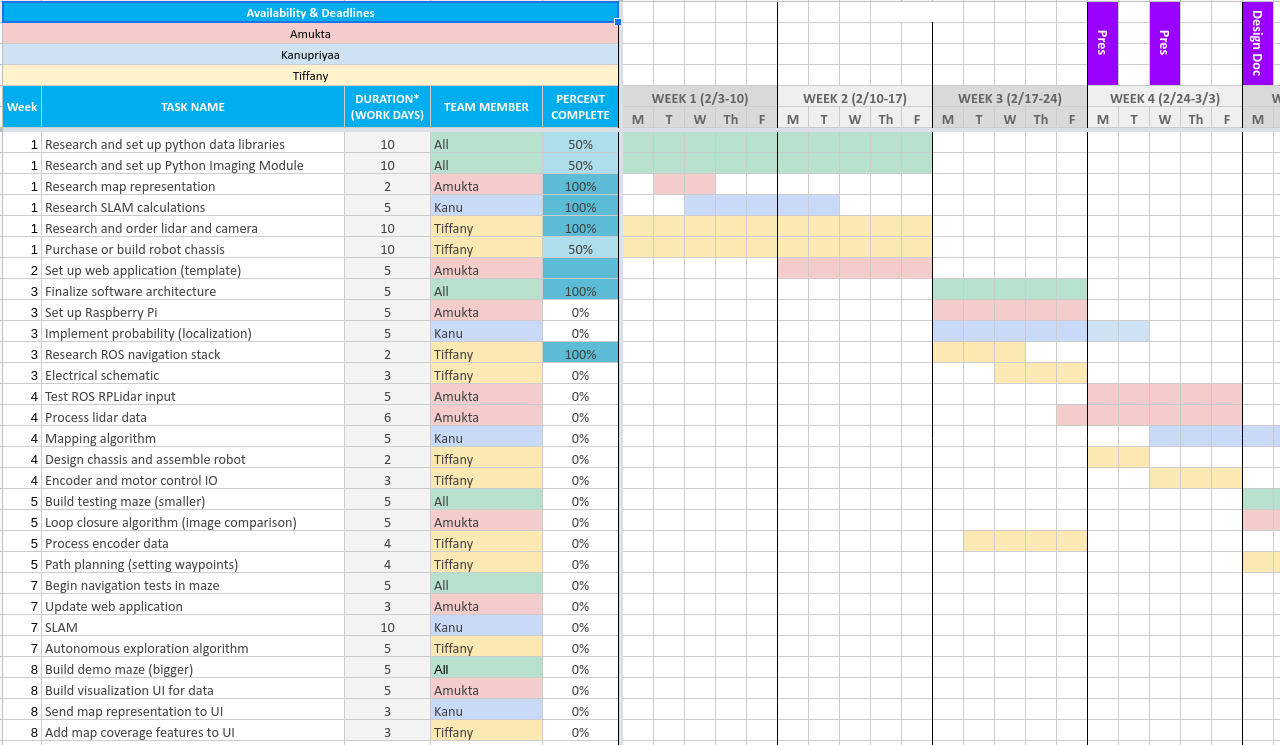

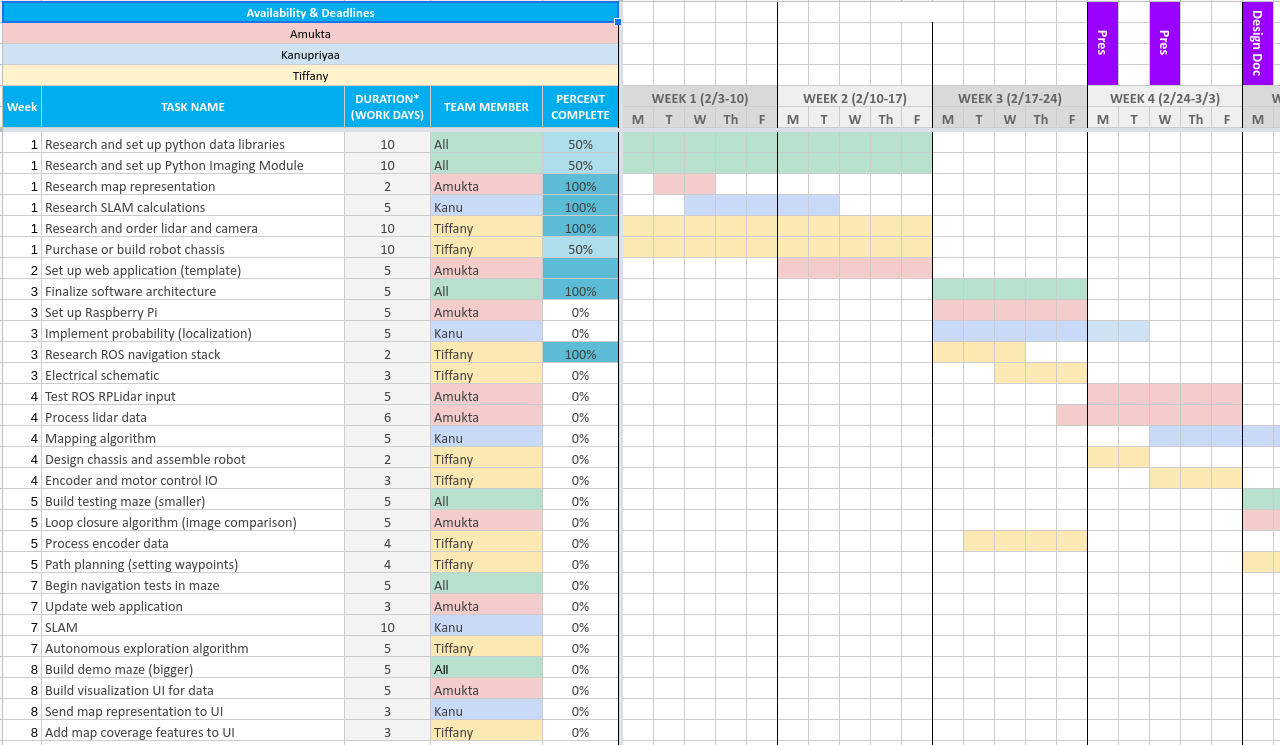

Overall, the team is on schedule.

Amukta’s Update

- I didn’t get the RPLidar until the end of the week, so I started setting up the visualization web app (python/django) in the mean time. I also looked into the RPLidar SDK and the RPi, and set them both up. RPLidar/ROS/Raspberry Pi has been done together many times, so I’ve been using those tutorials.

- We decided to use ROS, so I looked into how ROS nodes work.

- Next week, I’m going to begin testing the RPLidar with the RPi, and working towards processing the lidar’s points and creating data structures that are usable by the other ROS nodes/modules. I will also begin building the maze.

- I’m still figuring out how the RPLidar points are going to be used by the other modules, but overall, I’m on schedule.

Kanupriyaa’s Update

ROS Learning:

I completed a ROS course to learn about the basic structure that will need to be set up and spent time building a software architecture diagram that shows the interactions between all the different interfaces for the design review.

Localization:

The localization EKF algorithm couldnt be completed due to addition of ROS. I have also decided to change to implement Nearest Neighbour ICP-EKF which will be a bit harder in terms of implementation and will require all of next week.

Next Week:

Next week will be completing the implementation of ICP-EKF inside a ROS node and testing to make sure the node works.

Tiffany’s Update

ROS

This week, I focused on transitioning our design to using ROS environment after I realized ROS provides easier interfacing with the RPLidar, as well as existing autonomous navigation architectures that we could use as guidelines. I researched ROS implementation of SLAM and drew schematic of existing ROS nodes that can be used for a full SLAM navigation pipeline. Then, Kanupriyaa and I finalized the architecture for our customized implementation with Kanupriyaa. I also set up a github and ROS workspace so that the team can concurrently work on code and port to the Raspberry Pi easily.

Electrical

I researched methods for powering the 12V motor and 5V components (everything else). One option is to use two separate power sources. I decided on using a portable phone charger, which outputs 5V, is highly compatible for Raspberry Pi and can easily be replaced or recharged. To power the two 12V motors, we can use a 5V to 12V step up converter that minimizes power loss. Additionally, I finalized the electrical schematic (without camera).

Next Week

Due to a delay in ordering parts last week, I was unable to start assembling the robot this week. I need to test the motors and encoders running on an Arduino script first (since Amukta is working with the Raspberry Pi), design the chassis CAD and assemble the robot base. We will only have 1 motor and wheel now since we decided to test it out before buying more. I will write a preliminary ROS motor driver node and a reader for encoder data.