What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

Our current biggest risk is that the parts that we have ordered did not arrive. We have been stuck in this position for a while, so while the software has been making progress, our hardware aspect has not yet started yet. We checked in with our TA for our orders. In order to mitigate this situation, we have looked through a lot of resources and planned an even more thorough implementation for our hardware. Ideally, we’ll be able to bring up and connect them to the software and work as intended without much challenges in between. Additionally, another biggest risk is with our actual structure of the mirror. To account for our inexperience in this area, we have put in an order for a larger quantity of woodsheets so we have room for error.

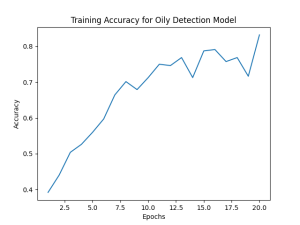

As for software, the biggest risk is still the performance of the remaining two models. The acne detection model is working well on the dataset. Likely, the largest risk is within the sunburn detection model. Increased data gain via scraping detailed in the report is likely the strongest risk management.

Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

While making concrete plans for our design report, we have decided to add more buttons. This was necessary as our LCD will sit behind and mirror and will not have a touchable screen. These added buttons will help the user navigate our Magic MIrror App. Because buttons are cheap and are easily workable through GPIO, we don’t expect much added challenge. In the case where the user studies feedback details that buttons are hard to use, we may consider installing the LCD in front of the mirror (touchable screen).

Provide an updated schedule if changes have occurred.

We changed our schedule on the implementation and testing side – our orders are coming in a bit late, and we realized that we need more time to integrate all the software/hardware components. We decided to shorten the user study by a week (we think this is reasonable since getting approved by the IRB will also take time).

link to our schedule

Part A: written by Isaiah

Our project is designed around analyzing skin, and suggesting products to help improve skin health. There are multiple components to the project that require sensitivity to global considerations for the resulting product to be truly effective and accessible for a wide range of people. Beyond just skin tone, different environmental conditions such as average temperature and humidity of a region can shift. As an extreme case, the level of skin moisture that’s common and healthy in tropical regions might not be the same in mountainous or subarctic climates. Typical tips and tricks used to identify skin conditions that are popular in one climate or for one group of people might not be reliable for another. Our product allows for an algorithmic solution for analysing skin that’s trained and tested on a large variety of skin types, allowing for accurate analysis with a greater trend towards global invariance. Furthermore, in detailing generic products and product combinations, users can make use of the product recommendations, where specific brands might not be available in some regions of the world.

Part B: written by Corin

For any technology that requires personal data collection, many cultures, including the American culture, take privacy and trust very seriously. Privacy is often tied to personal rights, and there’s a cultural implication that personal information should be protected (laws for medical privacy, student records, etc. exemplify that). People generally expect transparency when it comes to how their information is collected, used, and shared. Mirror Mirror on the Wall focuses a lot on this privacy aspect, ensuring that all image processing is done on a raspberry pi locally. We intentionally made this design choice so that our user can trust that his/her data will be kept private and can comfortably use a product that collects sensitive personal data.

Part C: written by Siena

Mirror Mirror on the Wall’s initiative is primarily focused on analysis of skin care and has no direct ties with environmental factors. However, we have considered environmental impacts in our design to offer sustainable use of resources. Through the use of a Raspberry Pi 5, an energy-efficient embedded system, and making all machine learning inference locally inside the device instead of relying on cloud servers, our system circumvents network-based carbon emissions. Although the physical components of the mirror (LCD, camera, case, etc) must have electronic materials as part of them, our design encourages durability, modularity, and reuse, such that individual components are repairable or replaceable without scrapping the entire system. While the system never has a direct interaction with natural ecosystems or biological systems, its impact on the environment is reduced indirectly through employing sustainable hardware options and power conservation.