Final Video

Corin’s Status Report for 12/6

Accomplishments

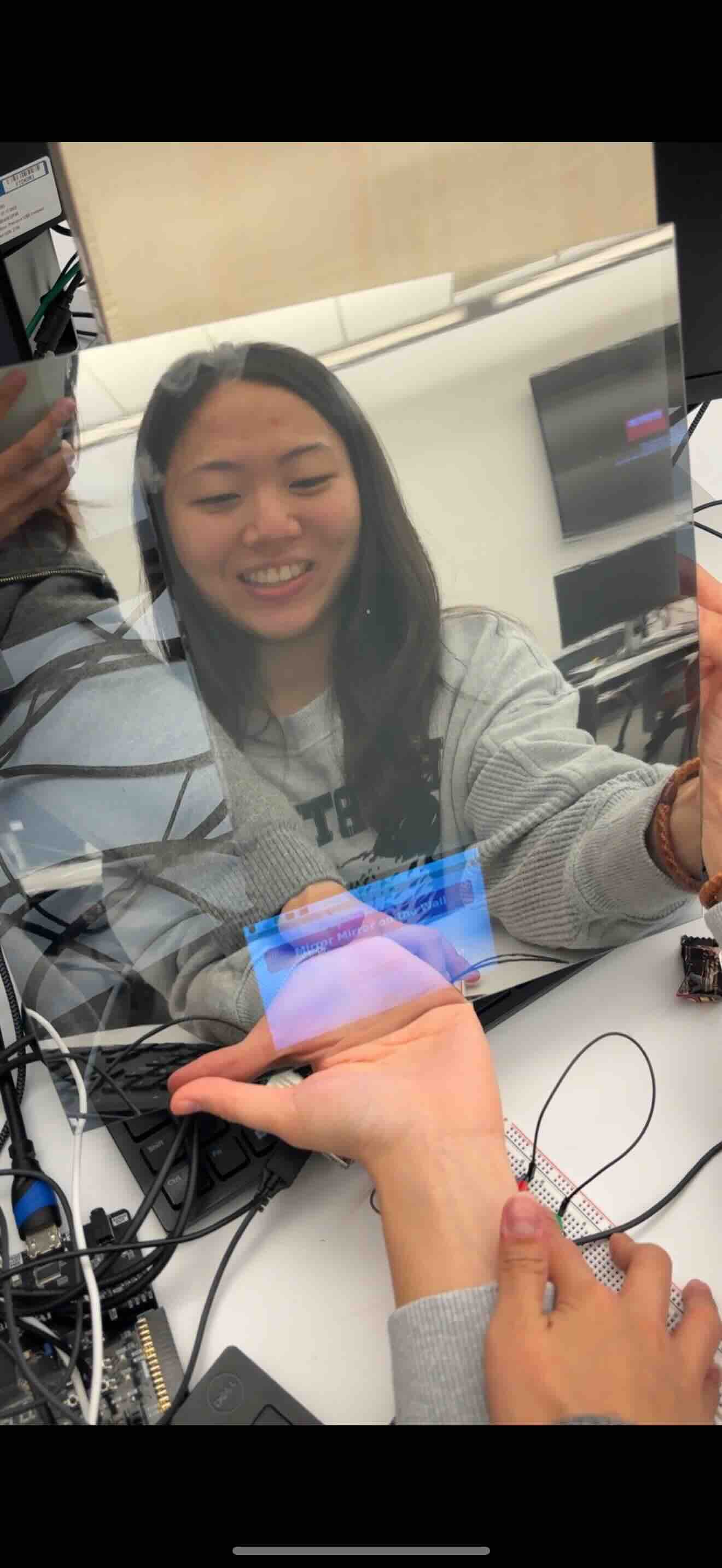

This week, we focused on finalizing the physical build of our smart mirror. We finally integrated all subsystems (camera, display, and mirror into the frame) and adjusted the setup to ensure the proper height and angle for the camera. This allowed a more consistent capture of the user at the intended operating distance mentioned in our design report.

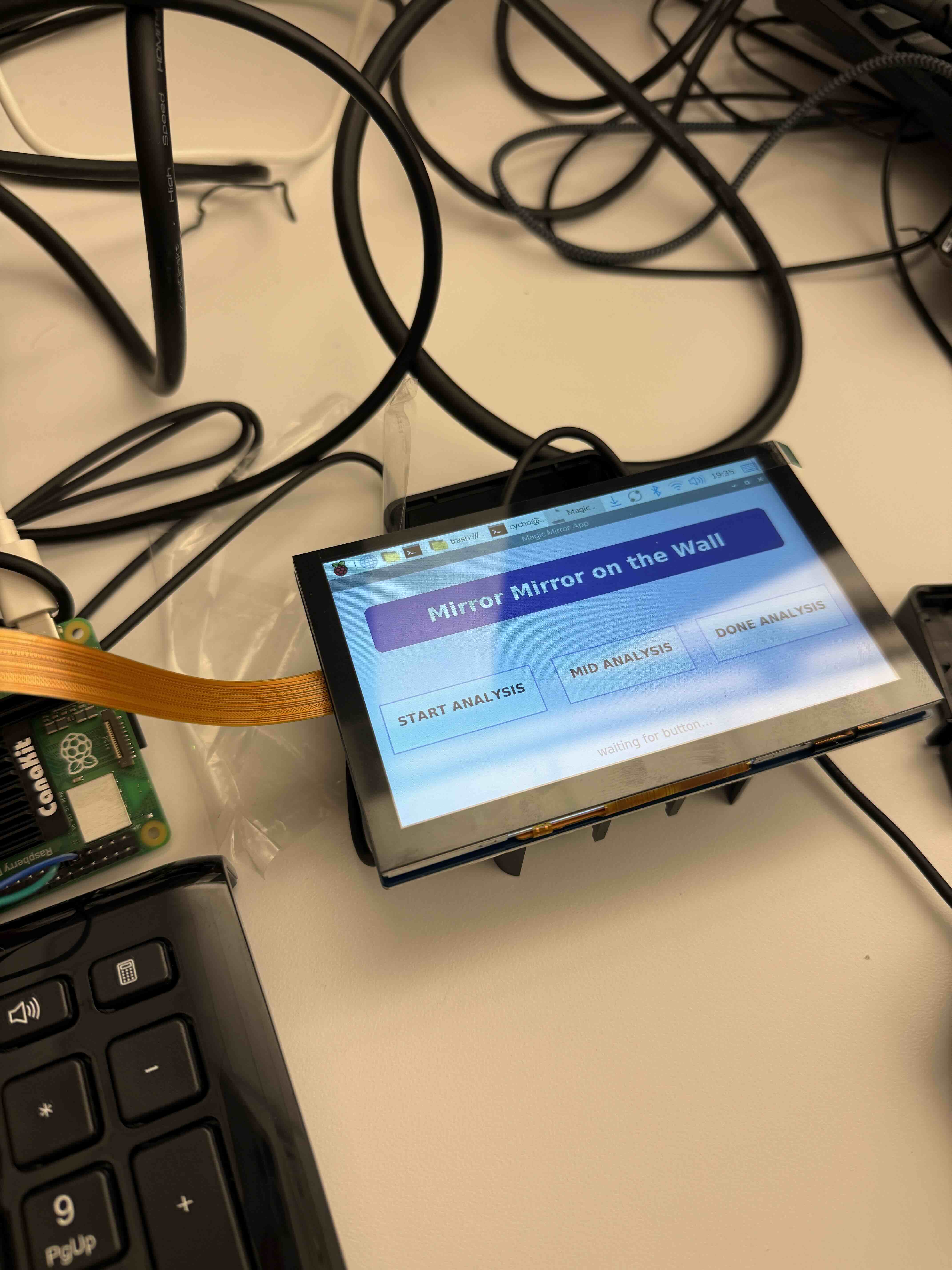

We also worked on refining our user app based on testing and user study. One challenge was that even with a bigger display, it was really difficult to navigate the app with our fingers. We made all of the buttons/scroll on our app wider. In addition, we fixed lingering bugs in our positional feedback, adding accurate guidance based on the user location within the frame.

Schedule

Make our demo video & set up our project for final demo!!

Next Week

Work on the final report and demo video

Team Status Report for 12/6

Risks

After user testing, the most feedback we’ve gotten was the difficulty with the touchscreen interface. Even when the user pressed a button, our system’s app would not register it properly. To mitigate this, we made our essential buttons/controls larger so that it is easier to press.

We also had a lot of difficulty integrating the camera (it started throwing bugs after we switched the display). We fixed the problem by switching the camera port (for some reason, the original port threw errors for autofocus). We’re not sure why switching the port fixed the problem, but if we had to build the system again, these minor bugs are potential risks.

We also realized that during demo, where the mirror would sit affects user orientation. To make sure we don’t face difficulties on demo day, we will test it in the room we’ll have our demo in (somewhere in 1200 wing).

Changes

As we are approaching the end, we did not make any changes in our system expect for sizing of some buttons.

Schedule

No changes to the schedule! Our project has been finalized 🙂

Siena’s Status Report for 12/6

Accomplishments

Early this week, I used the new LCD (bigger size) and adjusted our system app’s UI so that everything is centered. Then, we conducted user study with 5 people in addition to all 3 of our group members (8 total).

After each user ran through 5 sessions, we received feedback about our UI as well as how helpful they thought the product was. We made changes accordingly, mostly with the UI (positional feedback and making buttons/scroll bars larger so its easier to press).

Schedule

We are on schedule! Waiting to set up our demo.

Next Week

Our team will work on the video and the final report.

Isaiah’s Status Report for 11/22

This week was finishing integration and some timing testing. The wrinkle model was integrated into the mirror code, and times were taken with all of the models together ~2.1 seconds for an image. In order to make this number smaller, I change the architecture of the wrinkle model to match that of the acne detection model, causing its individual inference time to go down to ~0.12 seconds. Beyond that, face detection was integrated with the mirror, although we had to switch from blazeface to DeepFace, due to RPi compatibility issues.

Corin’s Status Report for 11/15

Accomplishments

This week, I worked with Siena to modify our app. We added features to view the history of the user sessions (we added a view trend page that shows the past 5 analyses). We also added a mock recommendation system (based on the classification results, we display mock recommendations below each session results). We haven’t finalized the recommendation system yet because we’re still working on categorizing the different skin types based on the classification & confidence. We also added lighting to our product – this did make the input image better. Through testing, we also realized the distance to the camera also played significant role (the results were best when we were pretty close to the camera, less than 1ft).

I also planned out the new physical design we would implement if we were to change directions to a real mirror and a display underneath. A new CAD sketch is needed since the design would become slightly larger and a different frame is needed to accommodate the real mirror dimensions.

Schedule

I am on track!

Next Week

We hope to finalize our physical design and have the full physical product built. Siena and I will work on turning our mock recommendation system into a real one, and hopefully we will integrate all 4 models for skin analysis.

Isaiah’s Status Report for 11/15

What did you personally accomplish this week on the project? Give files or

photos that demonstrate your progress. Prove to the reader that you put sufficient

effort into the project over the course of the week (12+ hours)

This week was spent on both finishing integration, and trying to make the current models work more effectively. 3/4 of the models are integrated and benchmarked in time, taking 2.018 seconds on average to analyze one image with the models. Also, work has been done to improve the model’s capabilities to detect more reliably. In particular, a patching system has been implemented to analyze multiple segments of an image.

What’s left is the just adapt the wrinkle model, integrate the face detection, and to finally perform testing and validation

Corin’s Status Report for 11/1

Accomplishments

This week, all of our parts arrived on Thursday. I connected the screen display to the RPI, so that we can see the mockup app on the display. I also connected two buttons(since we cannot touch the screen behind the mirror film), one to start the analysis and one to view the results of the analysis. I continued working on the mock app, and included a view trend page that includes a chart based on a mock json file of the results of the analysis. I also checked that the screen was bright enough to be seen through our mirror film and that the user can also see themselves pretty well if we have a dark surface behind the mirror film. Siena and I wanted to work on combining the camera with our mirror app/button, such that when the button is pressed, the camera takes a picture and the user is notified that their analysis has begun. However, we had some problems working with the new camera, so we will have to continue working on it next week.

Schedule

I am mostly on schedule. Next week, I will need to work with both Isaiah and Siena to integrate the basics of the whole system for the demo. The goal is to at least have button –> camera snap —> model start —> model out —> results shown on the app.

Deliverables

The demo is next week, so our group wants to connect at least the functional parts together. We want a camera input, buttons to control the most basic start/view results, and an app to show our users our analysis.

Although the physical mirror is unavailable, we want the skeleton to be put together.

Isaiah’s Status Report for 11/1

What did you personally accomplish this week on the project? Give files or

photos that demonstrate your progress. Prove to the reader that you put sufficient

effort into the project over the course of the week (12+ hours)

This week I focused on getting the last model ready. I ended up switching to also using a yolo head for sunburn, since it worked well for acne. Likewise, this will need to be fine-tuned to classify what confidence/density would be good for defining “sunburn” vs “no sunburn”. There’s empirical testing that can be done to our >85% accuracy standard, but also some qualitative tuning/testing would be needed, since there’s a gray area between the two binary categories.

Below is one image of the scraped images displayed with burn bounding boxes. The right is more emblematic of typical use cases, but it can detect a burn in both cases.