Final Video

Corin’s Status Report for 12/6

Accomplishments

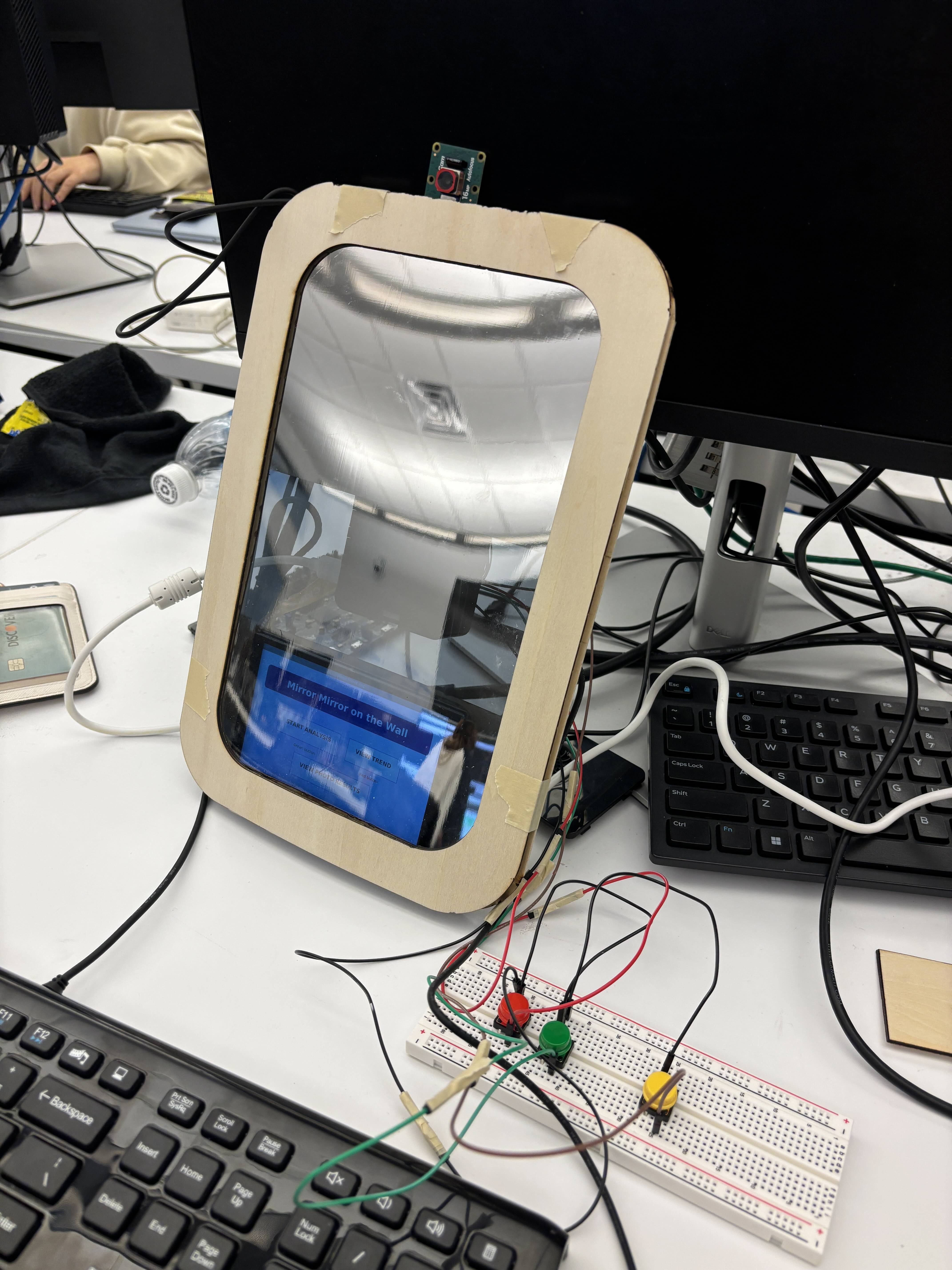

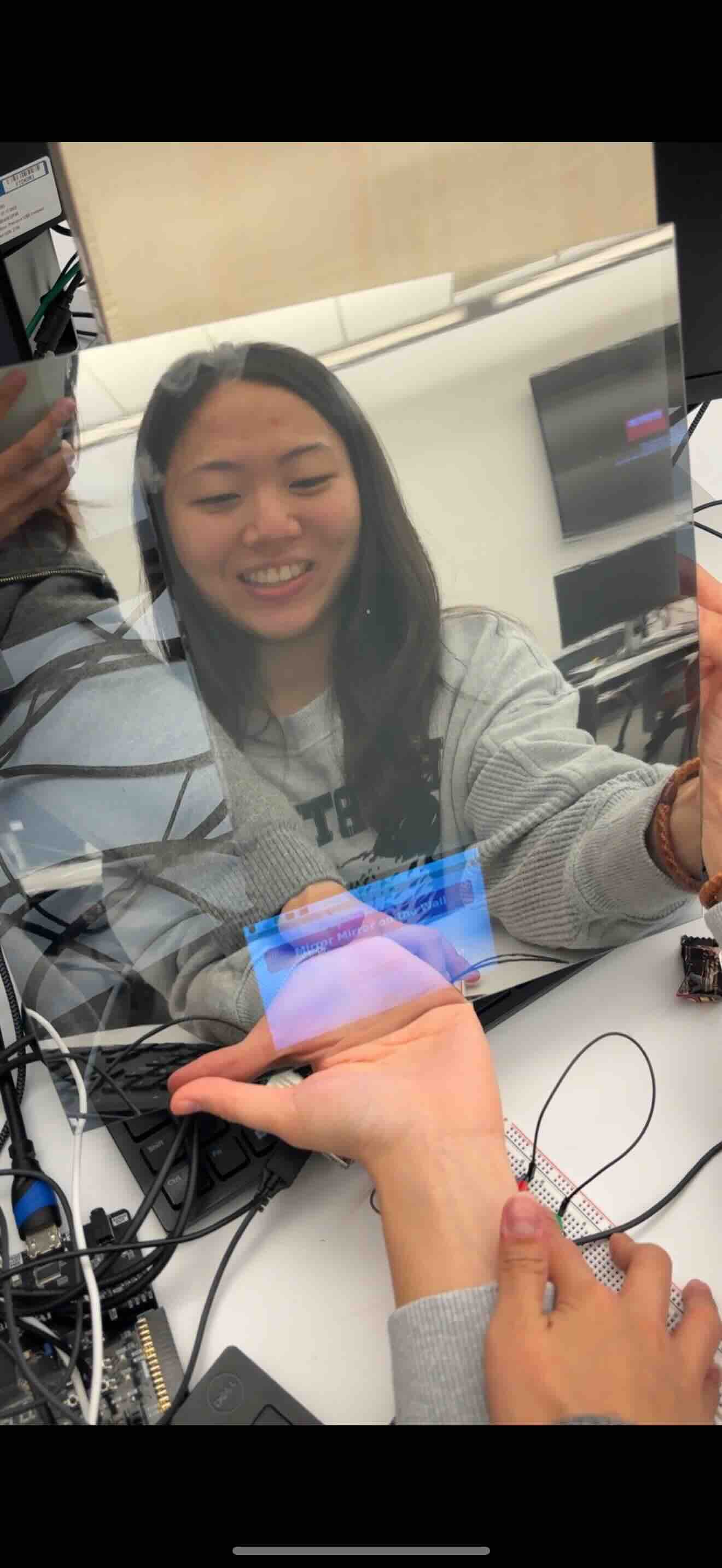

This week, we focused on finalizing the physical build of our smart mirror. We finally integrated all subsystems (camera, display, and mirror into the frame) and adjusted the setup to ensure the proper height and angle for the camera. This allowed a more consistent capture of the user at the intended operating distance mentioned in our design report.

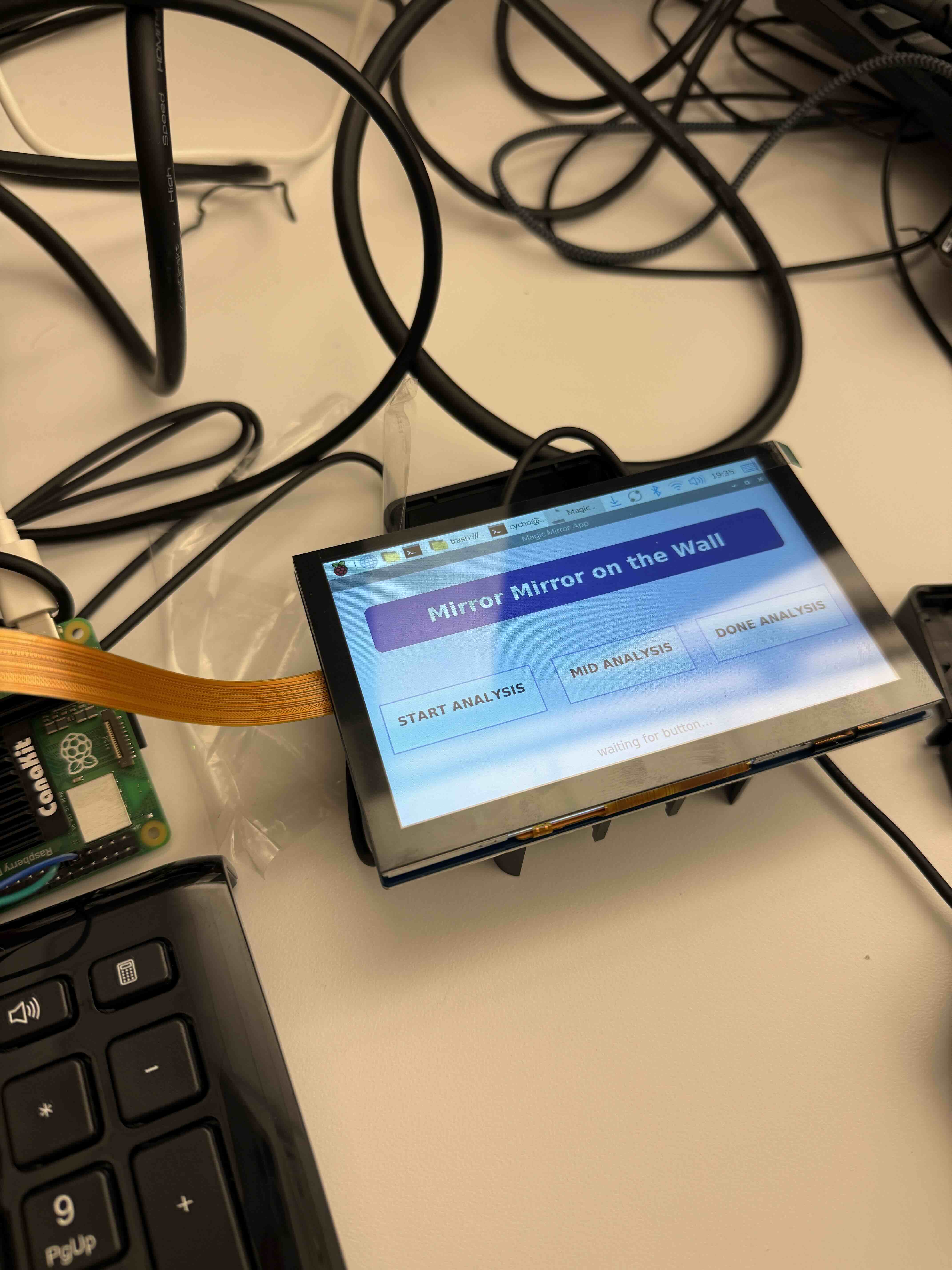

We also worked on refining our user app based on testing and user study. One challenge was that even with a bigger display, it was really difficult to navigate the app with our fingers. We made all of the buttons/scroll on our app wider. In addition, we fixed lingering bugs in our positional feedback, adding accurate guidance based on the user location within the frame.

Schedule

Make our demo video & set up our project for final demo!!

Next Week

Work on the final report and demo video

Team Status Report for 11/22

Risks and Mitigations

Our team has fully integrated all the software side of the system. The biggest risk for now is the physical connections not having stable connections for long term use. Since we changed around our physical design pretty late and put in the orders late as well, we have to speed up the process of CADing the new design and connecting the camera, display, lights, and the SBC in a stable manner.

Another risk is to have the user sessions be intuitive (touch screen display being comfortable to use), since we changed our design from using buttons to a touch screen. We designed the UI to have menu items + ordered a larger display, but we plan to conduct user study pretty soon to gather more information on how comfortable it is to use our product.

Our latency risk has been mostly fixed this week by parallelizing our models with threads and shortening the preview. The blaze face also does a great job quickly capturing our face for each session. We will continue testing the latency to ensure that we consistently hit the target latency.

Design Changes

We are using a real mirror and a 7 inch display so that the user can more clearly see their face and the analysis results. These were the physical changes mentioned last week (we have decided to confirm on the changes). Our overall system has the same functionality.

Schedule

Our team is pretty much on track! We plan to focus on testing next week.

Corin’s Status Report for 11/22

Accomplishments

This week, I worked with Siena to fully integrate the models we got from Isaiah with the app and finalized our UI. The wrinkle model got added to our app, and we confirmed that all four conditions were detected during a user session. We also tested the inference latency of our models and succeeded in parallelizing the 4 different models to reduce our latency. We achieved a latency of about 1.5 seconds, which was bottlenecked by our slowest model (the burn model). I created a trend graph with the last 5 sessions for the 4 different conditions. When the user clicks on the dots on the trend graph, it displays the photo result of that session. I also continued working on the new CAD sketch for our physical design. We changed the physical design from last week, and focused on seamlessly integrating a larger display so that the user can more comfortable navigate around the touch screen display.

Schedule

I am pretty much on track. I just need to speed up the CAD design, but I think it would be done by early next week.

Next Week

We hope to get a larger display, build the full system once again, then start collecting data from our tests.

Corin’s Status Report for 11/15

Accomplishments

This week, I worked with Siena to modify our app. We added features to view the history of the user sessions (we added a view trend page that shows the past 5 analyses). We also added a mock recommendation system (based on the classification results, we display mock recommendations below each session results). We haven’t finalized the recommendation system yet because we’re still working on categorizing the different skin types based on the classification & confidence. We also added lighting to our product – this did make the input image better. Through testing, we also realized the distance to the camera also played significant role (the results were best when we were pretty close to the camera, less than 1ft).

I also planned out the new physical design we would implement if we were to change directions to a real mirror and a display underneath. A new CAD sketch is needed since the design would become slightly larger and a different frame is needed to accommodate the real mirror dimensions.

Schedule

I am on track!

Next Week

We hope to finalize our physical design and have the full physical product built. Siena and I will work on turning our mock recommendation system into a real one, and hopefully we will integrate all 4 models for skin analysis.

Team Status Report for 11/15

Risks and Mitigations

This week, after the interim demo, we got some feedback on improving the physical design. It was harder to see through the mirror film for our screen display than we imagined. One suggestion made to our group was to change our design so that we have an actual mirror and the display right beneath the actual mirror instead of a see through mirror film. This would improve the quality of the reflection as well as the screen display. Although we are still contemplating on what design to go with, we have placed and received the parts to change our design as suggested.

We also got feedback on our latency for our inference models. We didn’t have the time measured for the inference models, and we got questions on whether our product will meet the timing we aimed for. After the interim demo, Isaiah measured the time for each inference models, which averaged 2.01 seconds. We are planning to figure out a way to run the models in parallel to achieve the timing for our MVP.

Besides the two concerns mentioned above, we are still working on improving the accuracy of our models. Isaiah is still working with the models to improve the accuracy, while Siena and Corin are working with the lighting that is optimal for the input image.

Design Changes

As mentioned in the first part of the section above, we are contemplating on getting rid of the mirror film and replacing it with an actual mirror. The display will be beneath the mirror instead of behind the mirror film, if the design changes. None of the software side will change, and our product will still deliver the same functionality (just a slight modification in the physical side of the product).

Schedule

We are pretty much on track!

Corin’s Status Report for 11/8

Accomplishments

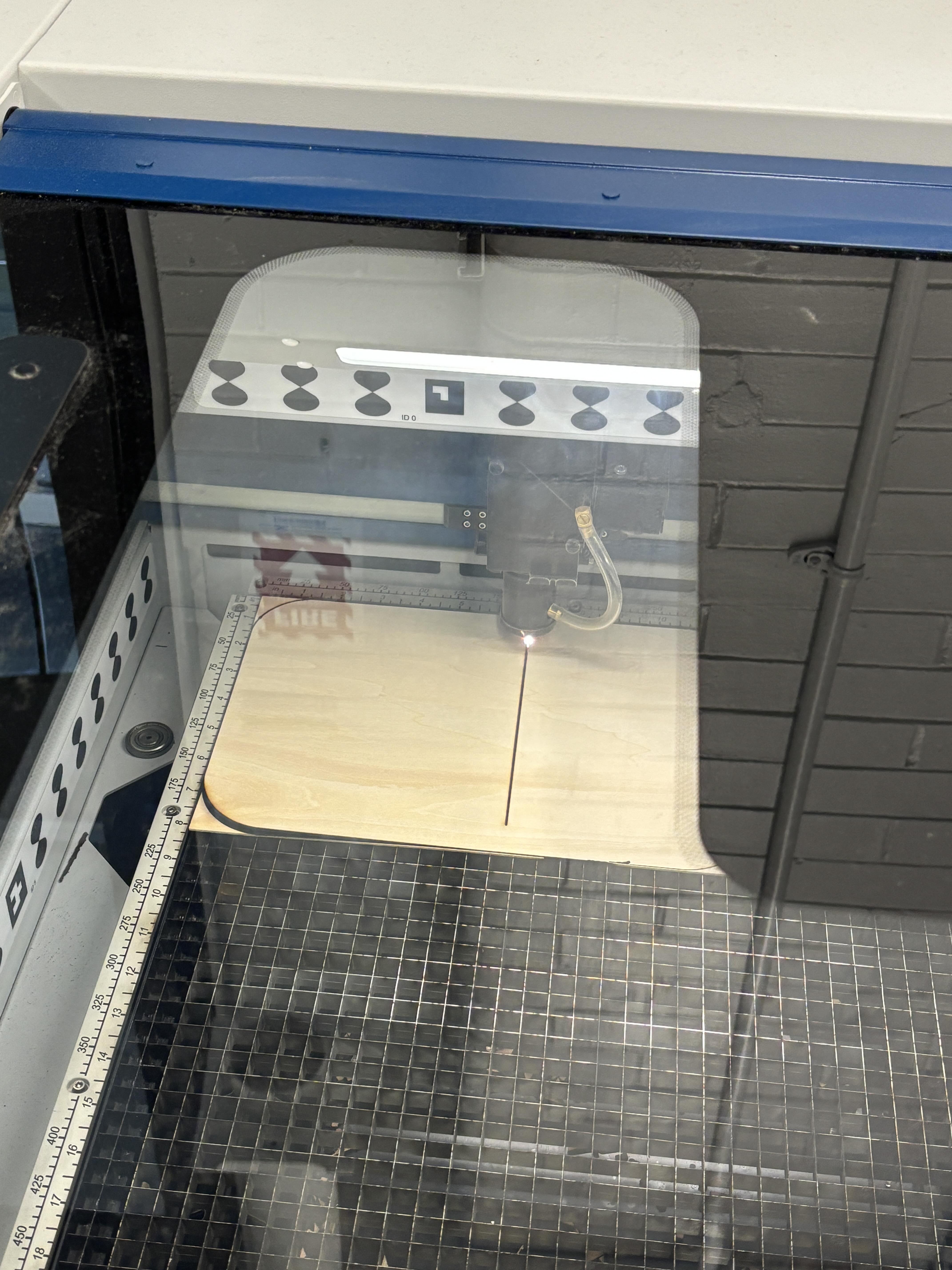

This week, our team started integrating all of our subsystems together. My job mainly was to assist Siena and Isaiah integrate the code they had for the camera + ml model to the mock app that I have previously built. I also quickly did a little bit of CAD work to laser cut the mirror frame. The wood we got was a bit smaller than we expected it to be, so I made a smaller version of the CAD sketches to make a prototype for our interim demo. I also added extra features to the app that we had before – Isaiah added code to outline the conditions on the image, and I added the feature on the app so that the user can view their picture with analysis on it.

Schedule

I am on schedule now. The next steps for me would be to modify the CAD so that we have a product that is well put together. I would also like to improve on the user experience (refining the app and the buttons).

Deliverables

Currently, we have a prototype with acne, sunburn, and oiliness analysis. Our next step is to add wrinkles as well and to improve the overall quality of our product with a better CAD design and lighting.

Corin’s Status Report for 11/1

Accomplishments

This week, all of our parts arrived on Thursday. I connected the screen display to the RPI, so that we can see the mockup app on the display. I also connected two buttons(since we cannot touch the screen behind the mirror film), one to start the analysis and one to view the results of the analysis. I continued working on the mock app, and included a view trend page that includes a chart based on a mock json file of the results of the analysis. I also checked that the screen was bright enough to be seen through our mirror film and that the user can also see themselves pretty well if we have a dark surface behind the mirror film. Siena and I wanted to work on combining the camera with our mirror app/button, such that when the button is pressed, the camera takes a picture and the user is notified that their analysis has begun. However, we had some problems working with the new camera, so we will have to continue working on it next week.

Schedule

I am mostly on schedule. Next week, I will need to work with both Isaiah and Siena to integrate the basics of the whole system for the demo. The goal is to at least have button –> camera snap —> model start —> model out —> results shown on the app.

Deliverables

The demo is next week, so our group wants to connect at least the functional parts together. We want a camera input, buttons to control the most basic start/view results, and an app to show our users our analysis.

Although the physical mirror is unavailable, we want the skeleton to be put together.

Team Status Report for 11/1

- What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

This week all of our parts arrived! We have progress in most of our subcomponents, but because our parts arrived on Thursday, we didn’t have too much time to integrate all of our subcomponents. As we transfer our parts one by one, we are expecting a lot of hurdles in putting everything together. Siena was working with another camera before our actual camera arrived, and there was already a problem with the new camera that we didn’t encounter in our old camera. This is preventing us from connecting it to the rest of the hardware (buttons and the screen with the mock app).

We also need to start combining all of our mock setups with the actual data input/output from our camera and the ML model. During this process, we will need a lot of communication/trial and error, since we expect various compatibility issues with our mock data formats and actual outputs from the camera and ML model.

Isaiah is done training the models for the majority of our classes. Since most of our subcomponents are done, we aim to mitigate the risks that comes with integration by working together next week to connect everything – the camera inputs to the model and the model outputs to the display (app).

- Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

No changes were made to our existing design requirements. We just received all of our parts, and after checking them out and testing on a few components, we think it’ll be sufficient with our existing design.

- Provide an updated schedule if changes have occurred.

The schedule didn’t change except that the hardware buildup/testing is going for a week longer (until the mid-demo) because our parts arrived later than expected. everything else will remain the same.

link to schedule: https://docs.google.com/spreadsheets/d/1g4gA2RO7tzUqziKFuRLqA6cGWfeL0QYdg5ozW9hug74/edit?usp=sharing