- What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

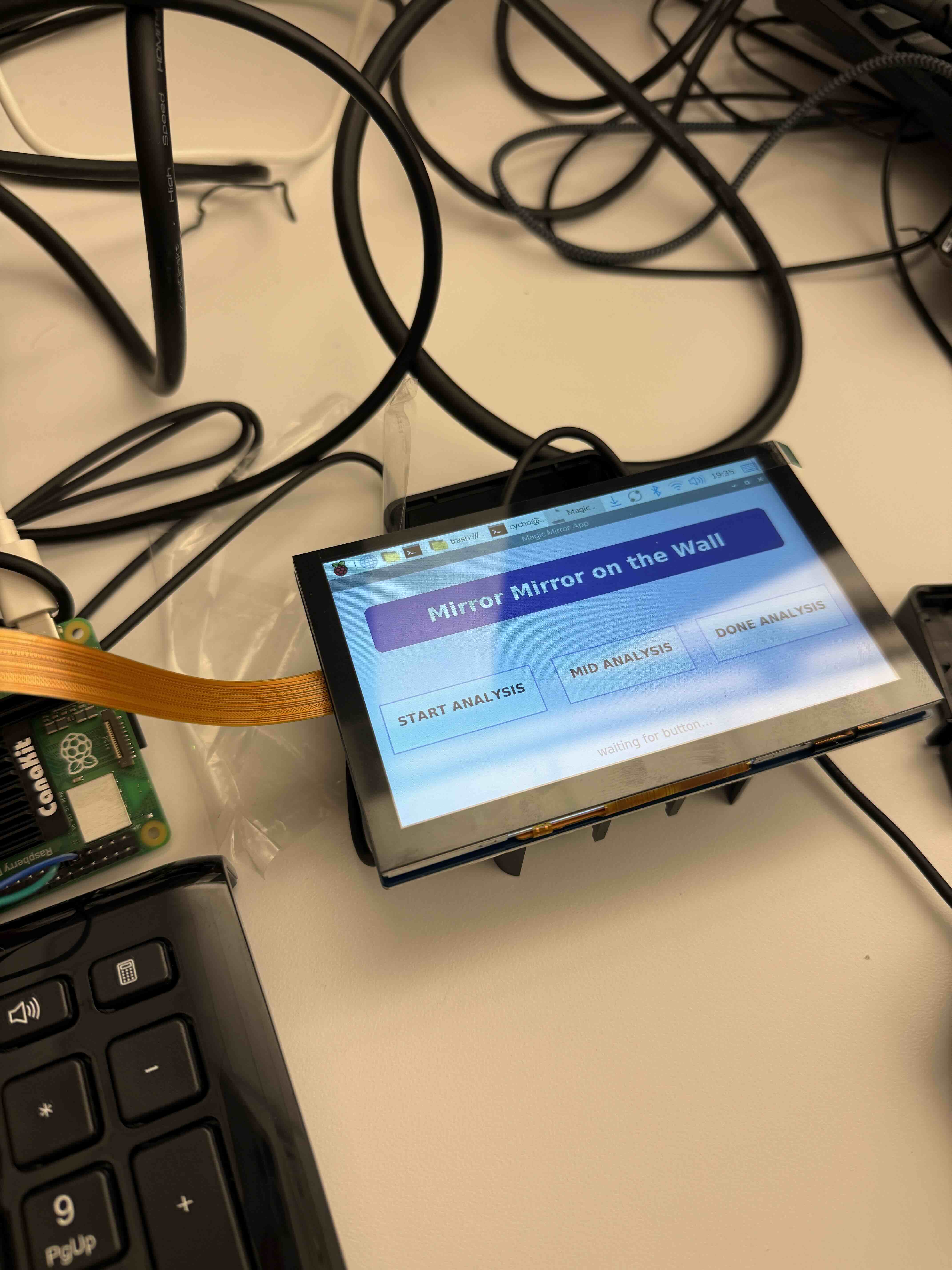

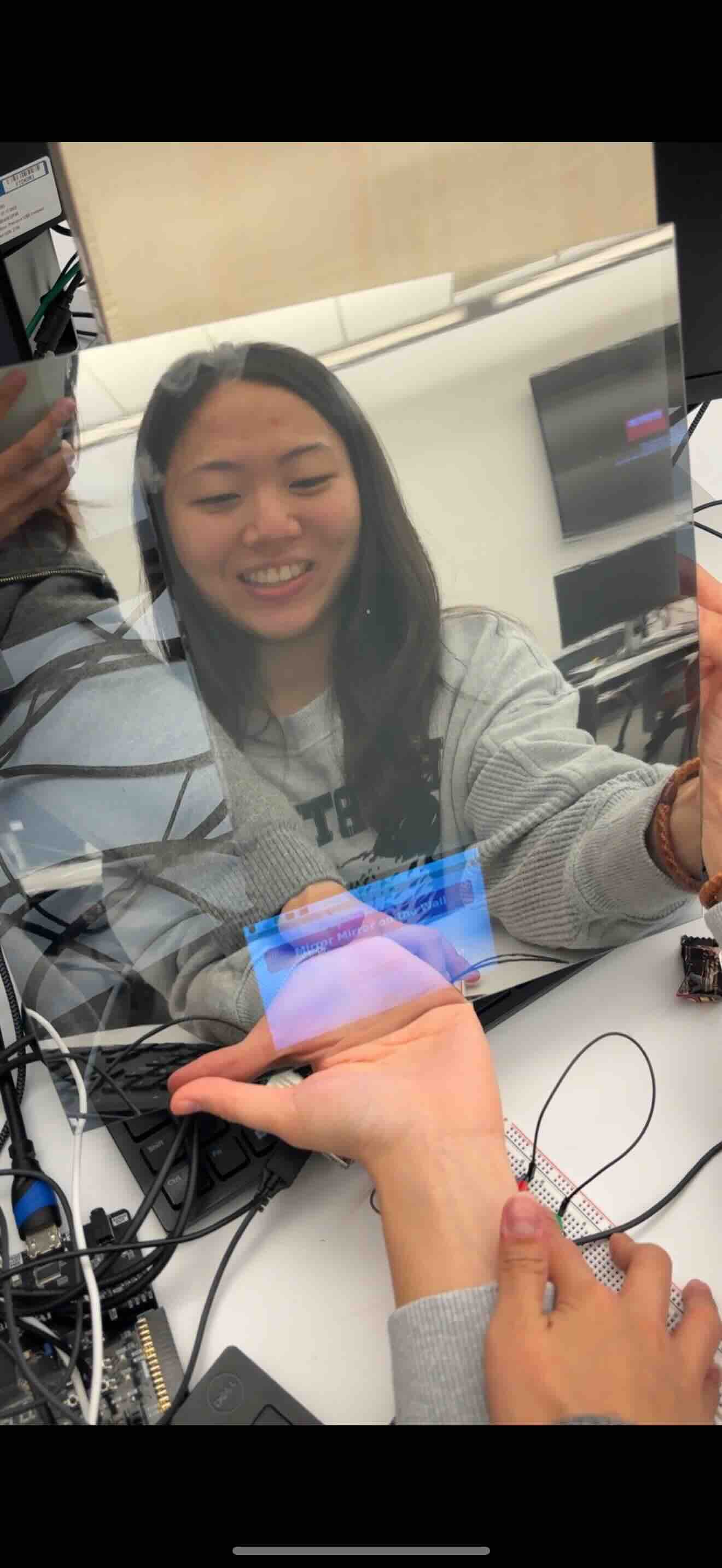

This week all of our parts arrived! We have progress in most of our subcomponents, but because our parts arrived on Thursday, we didn’t have too much time to integrate all of our subcomponents. As we transfer our parts one by one, we are expecting a lot of hurdles in putting everything together. Siena was working with another camera before our actual camera arrived, and there was already a problem with the new camera that we didn’t encounter in our old camera. This is preventing us from connecting it to the rest of the hardware (buttons and the screen with the mock app).

We also need to start combining all of our mock setups with the actual data input/output from our camera and the ML model. During this process, we will need a lot of communication/trial and error, since we expect various compatibility issues with our mock data formats and actual outputs from the camera and ML model.

Isaiah is done training the models for the majority of our classes. Since most of our subcomponents are done, we aim to mitigate the risks that comes with integration by working together next week to connect everything – the camera inputs to the model and the model outputs to the display (app).

- Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

No changes were made to our existing design requirements. We just received all of our parts, and after checking them out and testing on a few components, we think it’ll be sufficient with our existing design.

- Provide an updated schedule if changes have occurred.

The schedule didn’t change except that the hardware buildup/testing is going for a week longer (until the mid-demo) because our parts arrived later than expected. everything else will remain the same.

link to schedule: https://docs.google.com/spreadsheets/d/1g4gA2RO7tzUqziKFuRLqA6cGWfeL0QYdg5ozW9hug74/edit?usp=sharing