See Shravya’s individual report for more details relating to UART debugging progress; in summary, while all UART-related code has been written and run for about a week now, debugging is still underway and taking much longer time than estimated. This bottlenecks any accuracy and latency testing Shravya can conduct with the solenoids playing any song fed in from the parser (accuracy and latency of solenoids behave as expected when playing a hardcoded dummy sequence though). Shravya hopes to get a fully-working implementation by Monday night (so there is ample time to display functionality in the poster and video deliverable), and conduct formal testing after that. She has arranged to meet with a friend who will help her debug on Monday.

Testing related to hardware:

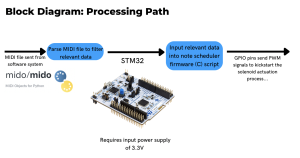

As a recap, we have a fully functional MIDI-parsing script that is 100% accurate at extracting note events. We also are able to control our solenoids with hardcoded sequences. The final handshaking that remains is connecting the parsing script to the firmware to allow the solenoids to actuate based on any given song. Once the UART bugs are resolved, we will feed in manually-coded MIDI files that encompass different tempos and patterns of notes. We will observe the solenoid output and keep track of the pattern of notes played to calculate accuracy, which we expect to be 100%

- During this phase of the testing, we will also audio record the output with a metronome playing in the background. We will manually set the timestamps of each metronome beat and solenoid press, and use those to calculate latency.

Some tests that have been completed are overall power consumption and ensuring functionality of individual circuit components:

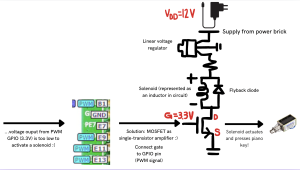

- Using a multimeter, we measured the current draw of solenoids under the 3 possible different scenarios. Obviously, the maximum power consumption occurs when all solenoids in a chord are actuated simultaneously, but even then we stay right under our expected power limit of 9 Watts.

- To ensure the functionality of our individual circuit components, we conducted several small-scale tests. Using a function generator, we applied a low-frequency signal to control the MOSFET and verified that it reliably switched the solenoid on and off without any issues. For the flyback diode, we used an oscilloscope to measure voltage spikes at the MOSFET drain when the solenoid was deactivated. This allowed us to confirm that the diode sufficiently suppressed back EMF and protected the circuit. Finally, we monitored the temperature of the MOSFET and solenoid over multiple switching cycles to ensure neither component overheated during operation.

Shravya’s MIDI parsing code has been verified to correctly parse any MIDI file, either generated by Fiona’s UI or generated by external means, and handles all edge cases (rests and chords) which caused troubles previously.

Testing related to software:

Since software integration took longer than expected, we are still behind on the formal software testing. Fiona is continuing to debug the software, and plans to start formal testing on Sunday (see more in her report). For a reminder of our formal testing plans, see: https://course.ece.cmu.edu/~ece500/projects/f24-teamc5/2024/11/16/team-status-report-for-11-16-2024/. We are worried that we might have to restrict these testing plans a little bit, specifically by not testing on multiple faces, due to the fact that many people are busy with finals, but we will do our best to have a complete idea of the functionality of the system. One change we know for certain we will make is that our ground truth for eye-tracking accuracy will not be based on camera play-back, but on which button the user is directed to look at, for simplicity and to reduce testing error.

Last week, Peter did some preliminary testing on the accuracy of the UI and eye-tracking software integration in preparation for our final presentation, and the results were promising. Fiona will continue that testing this week, and hopefully will have results before Tuesday in order to include them in the poster.

Global Considerations, Written by Shravya

Global Considerations, Written by Shravya