This week’s tasks:

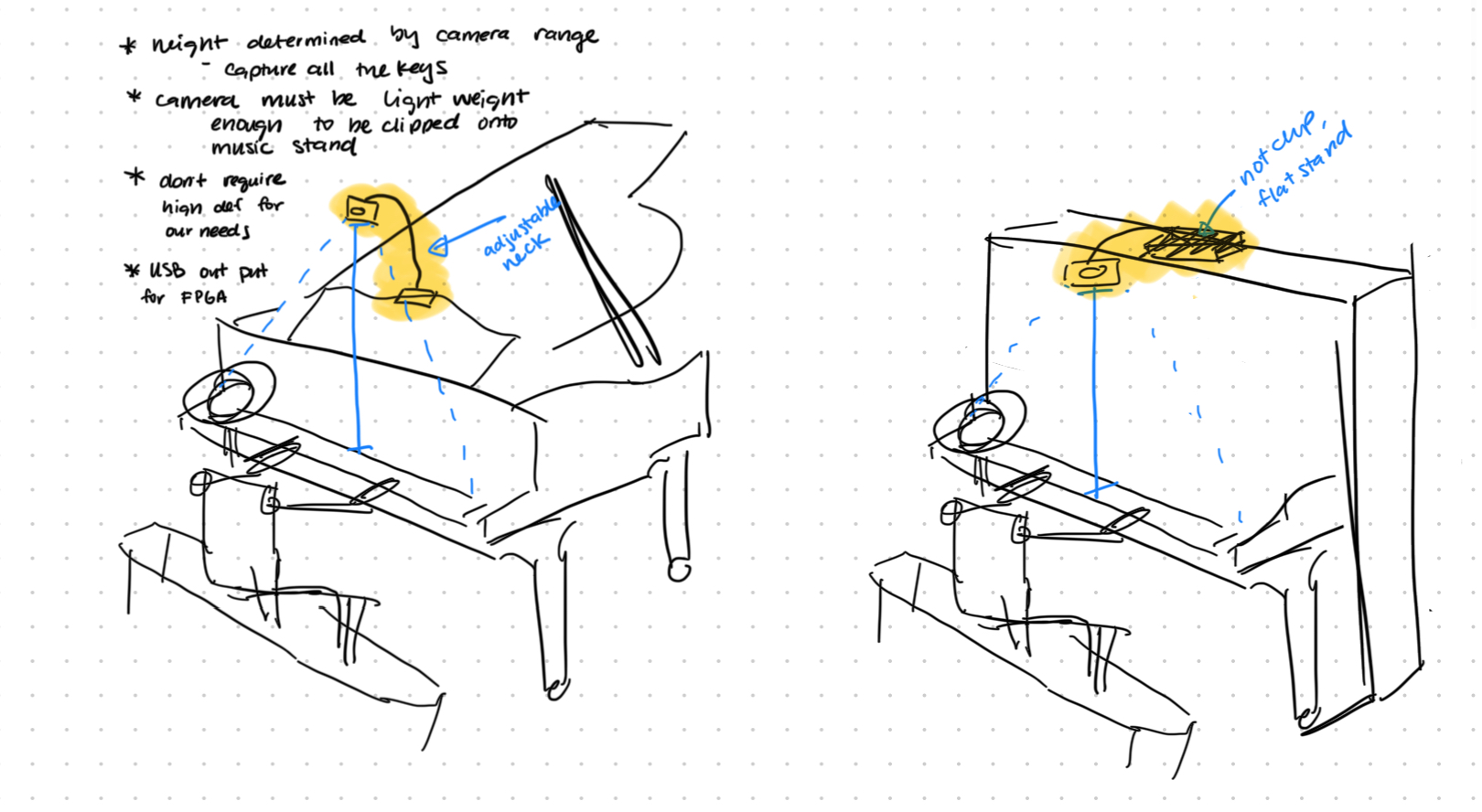

- I finalized shopping for parts for the camera stand given we now had a better idea of the measurements. These parts arrived and they seem to meet our requirements. We just need to find a way to connect the gooseneck and the tripod before our meeting with Prof Dueck’s students on 10/9.

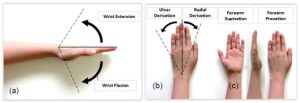

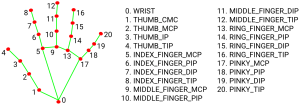

- I looked into how to run the kinematic calculations on the FPGA. I again referenced Peter Quinn’s code: https://github.com/PeterQuinn396/KV260-Pose-Commands/blob/main/app_kv260.py and saw that he simply used python. I looked into some other options and saw that the easiest way would be to use the python Vitis AI API, but there also exists a C++ API that may allow us to have higher performance and the hardest option would be to work directly with the FPGA’s output bitstream. For now, we can plan to use python and later change to C++ if we are facing performance issues. A stretch goal could be to work directly with the bit stream.

- A concern that came up while I was looking into these options is the rate that the FPGA would be able to run the pose detection model. Peter Quinn was able to achieve 3 FPS, I’m not sure how much better we can do and what is the requirement to fit out needs. We can investigate this at a later time and if it becomes an issue here are some ideas to try and improve performance: attempt to simplify the model (lower resolution, less landmarks, smaller image samples, etc.), go down the stack for the kinematic implementation (python to C++ and then to working with the bit stream).

- We received the power supply and were able to finish the Zynq UltraScale+ tutorial: https://xilinx.github.io/Vitis-AI/3.0/html/docs/quickstart/mpsoc.html

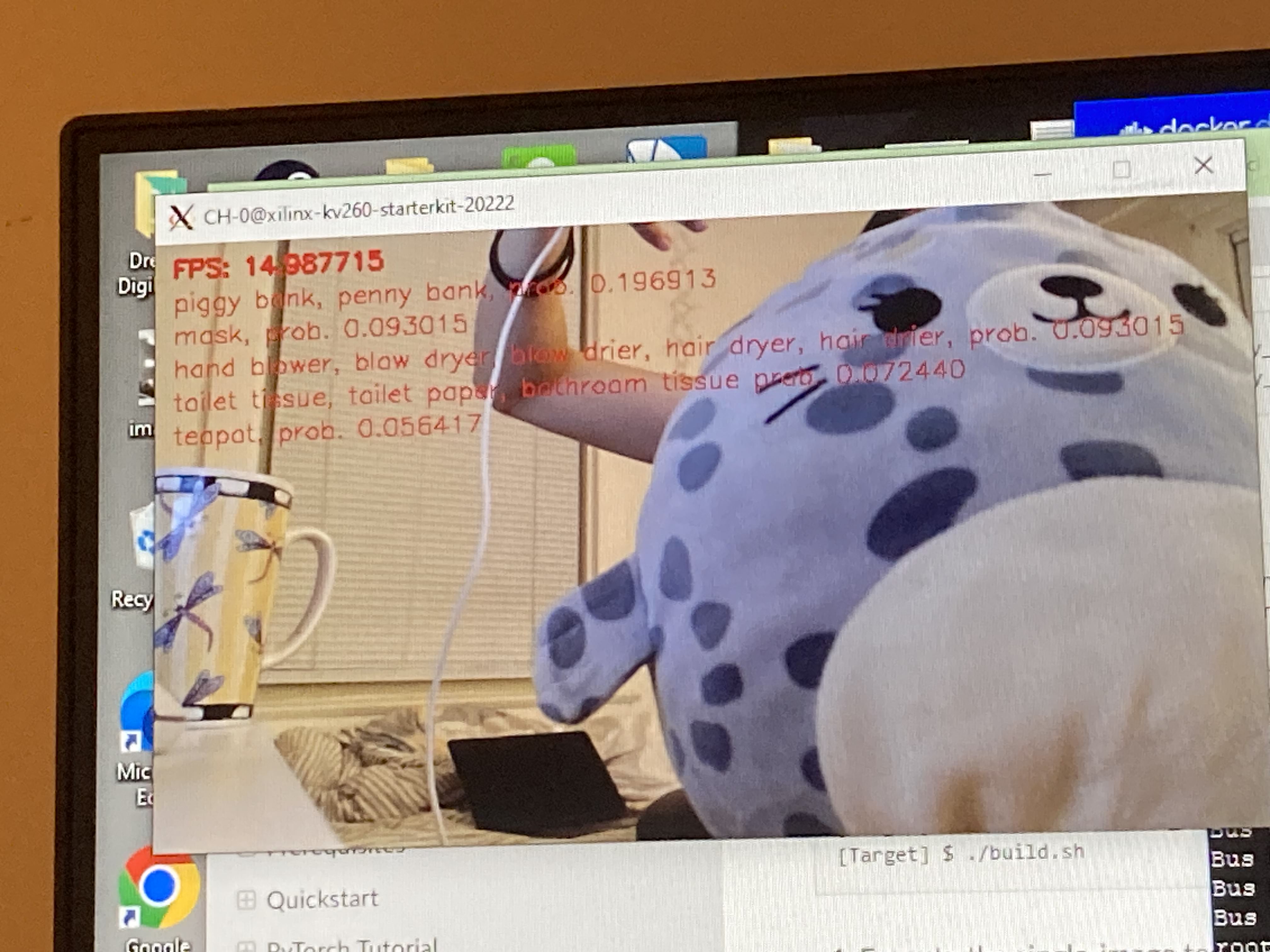

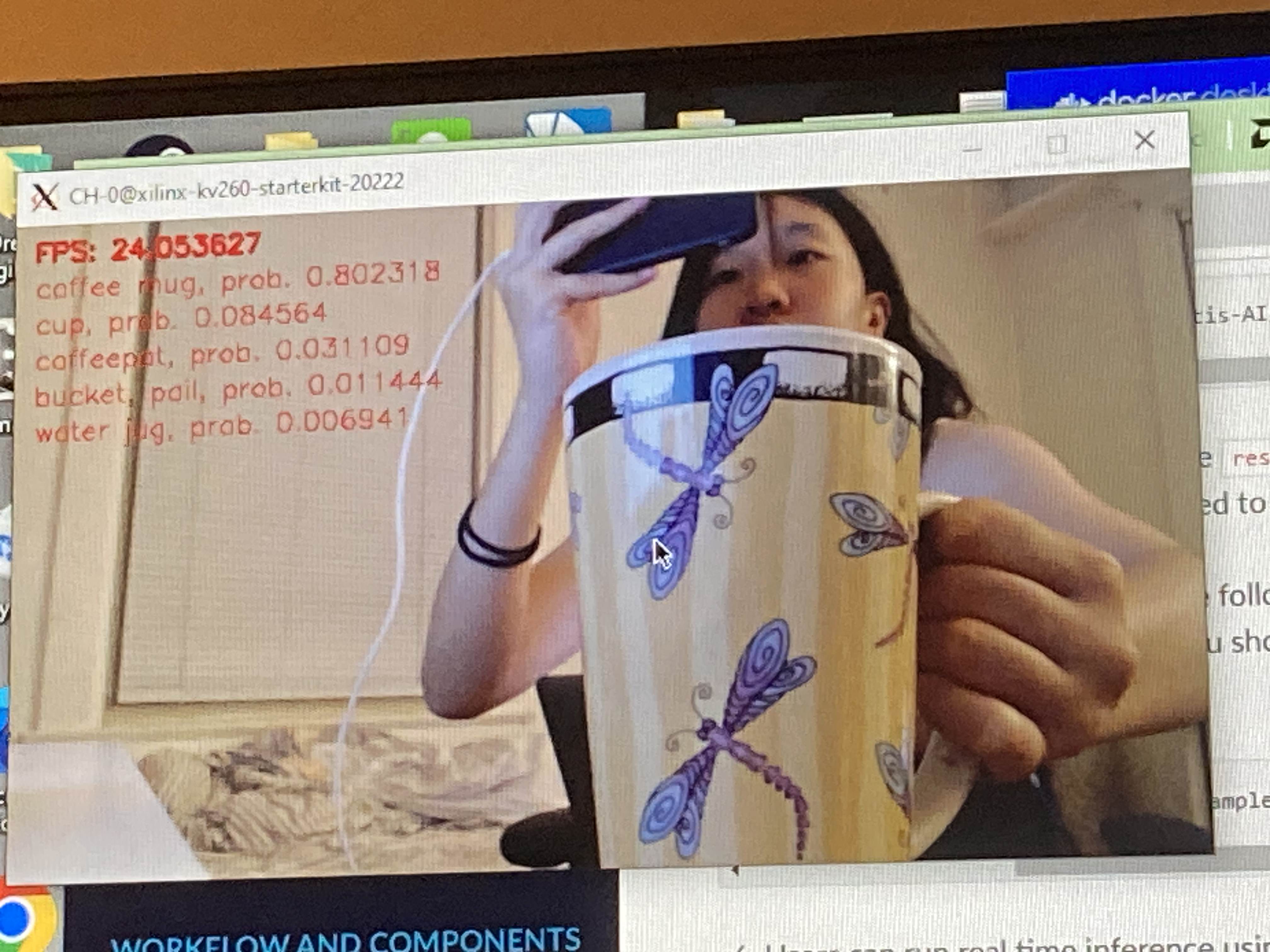

- Danny did the first half of the tutorial (set up the target and download the necessary software) and I did the second part of the tutorial (PyTorch Tutorial). I followed their walkthrough of the Vitis AI workflow with the example models and have a general idea what what configuration and scripts we need to write/modify from their examples. I was able to successfully follow the tutorial and compile a classification model on the FPGA. It was able to classify input from our webcam.

- In reference to my previously mentioned concern regarding framerate, the example model is able to achieve a frame rate of around 14 or 15 fps when there is some movement in the video and around 24 or 25 fps when the video is still. I think the pose detection model will be more complex and be slower than this example model.

Schedule:

I’m still on schedule. Next week I plan to work with Shaye to start the early steps of the CV and FPGA integration.

Next week’s deliverables:

- A rough draft version of the Python kinematics code using the Vitis AI API.

- Work with Shaye to decide how we will obtain samples for the quantization step of the Vitis AI workflow.