What I did this week:

-

- RPI MODEL AND TENSION CODE INTEGRATION: Shaye mostly led the integration process. I moved the code for the model onto GitHub so Shaye could work with it. I then copied the integrated version of the code from Shaye’s branch on GitHub. We then worked together to debug any errors. The initial integrated code can be found on the jessie_branch at the blaze model commit.

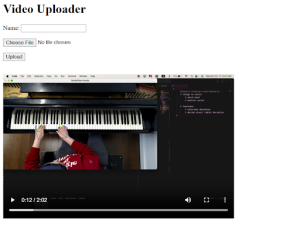

- I built off the integrated model and tension algorithm code Shaye and I worked on to add the live feedback buzzer feature. I copied my previously written buzzer code into [], then using feedback from Shaye’s rudimentary tension algorithm, if tension was detected, I triggered the buzzer for 0.1 seconds. I noticed that the video feedback was laggy when the buzzer went off. I believe this is because to have the buzzer buzz for 0.1 seconds, it is turned on, then waits 0.1 seconds (time.sleep(0.1)), then is turned off; the time.sleep is a blocking process, which would cause the code to lag as I saw. To remedy this, I created a thread each time the buzzer buzzed; the video seemed much smoother after this change. The code can be found on the jessie_branch at the buzzing roughly works commit. Video of the working code can be found here: https://drive.google.com/file/d/1jA_3Sd2Z57bAgNAS1DLm2VFd9FsDfc_w/view?usp=sharing

- In the video, you can see that when I move my hands, the buzzing stops, and when my hands are still the buzzing occurs. This is because we correlate less movement with more tension. In the future, I’ll look into how to make the buzzer buzz less frequently when tension is detected.

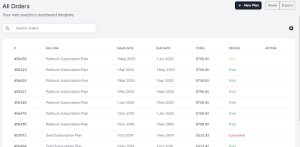

- RPI WEB APP INTEGRATION: I worked with Danny to interface video recording and calibration with the web application.

- To interface the video recording, I had ChatGPT generate some basic functions to start and stop video recording. These functions were mapped to buttons on the web app. I mostly had to work to ensure the paths of where the video was being stored were correct and that the videos were uniquely named. This code can be found on the jessie_branch in accelerated_rpi/vid_record/record_video.py

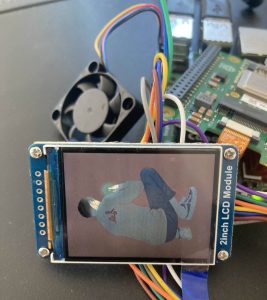

- I also wrote some code to interface the mirror_cam.py code with Danny’s web app buttons. The code consists of functions to start and stop the mirror_cam.py code. The code can be found on the jessie_branch at accelerated_rpi/LCD_Module_RPI_code/RaspberryPi/python/call_mirror_cam.py. In the future, the calibration process will be much more detailed than this, but we have yet to fully think it through.

Schedule:

I am slightly behind schedule as I wanted to have the system fully integrated by this week. These are the elements I am missing: 1. recording while live feedback is being provided 2. triggering the start of a live feedback session with a button. However, I’m making good progress towards that and think it is very achievable for next week.

This week I focused on writing code specifically for demonstration purposes with the upcoming demo in mind, rather than working on completing the full system integration— showing that the buzzer interfaces with the tension algorithm and model on the RPi, the calibration interfaces with the web application, and video generated on the RPi interfaces with the web application.

Additionally, I was unable to write the Google form for the tension ground truth since we are waiting for Dr. Dueck to send us a tension detection rubric that we want to include in the form. Dr. Dueck has been away at a workshop in Vermont this week and thus has been delayed in providing the tension rubric.

Next week’s deliverables:

- Make a Google form and spreadsheet to collect and organize ground truth data from Prof Dueck and her students.

- Work with Danny to make the real recording with live feedback triggerable through a button on the web app.

- Record video while the live feedback is occurring.

- Work with Danny to ensure this video is accessible on the web app.

Less time-sensitive tasks:

- Investigate the buzzer buzzing frequency

- Start brainstorming the calibration code

- Experiment with optimizing the webcam mirroring onto the display

Verification of System on RPi:

FRAMERATE:

We have already been able to verify the frame rate at which the model runs on the RPi, since a feature to output the frame rate is already included in the precompiled model we are using. The frame rate of the model is around 22 FPS with both hands included in the frame.

To determine the frame rate at which our system is able to process data (it’s possible some of our additional processing for tension detection could slow it down) I have 2 ideas. The first is to continue to use the precompiled model’s feature to output the frame rate. Our code builds on top of the code used to run the precompiled model, so I believe any slowdown as a result of our processing will still be observed by the precompiled model’s frame rate output feature. My second idea was to find the average frame rate by dividing the total number of frames by the total length of the video. Both of these methods should result in a good idea of the frame rate at which our system processes video; additionally, I can use these 2 methods to cross-check each other.

I plan to find the framerate when there are:

- 2 hands in the frame (2 hands in the frame slows down the framerate of the model compared to 1 hand in the frame)

- Various amounts of buzzing (does the buzzer code affect frame rate?)

- Various piece types (does the amount of movement in the frame have an effect?)

LATENCY:

To find the latency of the system’s live feedback, I plan to subtract the time audio feedback (buzz) was given by the time tension was detected. To collect the time at which tension was detected, I plan to have the program print the times (sync-ed to the global clock) at which tension was detected. To collect the time at which live audio feedback was given, I plan to have another camera (with a microphone) that will also record the practice session; I will sync this second video with the global clock as well and mark down the (global) times at which audio feedback occurs. I will then match the times at which tension was detected with the times at which audio feedback was given to find the latency.

If I find that the frame rate varies given the different situations previously mentioned, then I will also run this latency test for those situations to find the effect of various framerates on latency.

POST-PROCESSING TIME:

My plan to verify the post-processing time is fairly simple. I plan to record the time it takes for videos of various lengths to post-process, specifically for 1-minute of video, 5-minutes of video, 10-minutes of video, and 30-minutes of video. I will start timing when the user is finished recording and stop timing once the video is finished processing.