This past week I gave the final presentation, continued integration, and focused on completing testing for my individual portions of the project.

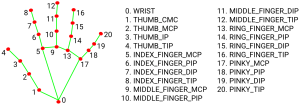

For integration we hit a slight blocker; I had used a handedness classifier available through Mediapipe to differentiate the hands in our videos, but that classifier was unavailable in the Blaze hand landmarks version. As a result, I had to circumvent by relying on identified landmarks to tell the hands apart instead. For now, I’m simply labelling the leftmost middle landmark as the left hand and the rightmost middle landmark as the right hand. Since this method has lots of clear complications, I’ll work on using some other landmarks (ie: thumb relative to middle) to tell apart hands as well.

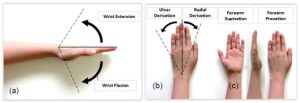

For testing, I’ve continued with the landmark & tension algorithm verification. For landmark verification, I’ve wrapped up verifying that Blaze and Mediapipe produce the same angles. Jessie ran some techniques clips I ran with Mediapipe, and I plotted the angle outputs from that to compare with the Mediapipe output. For tension testing, I’ve gone through and organized the datasets that we can verify with. More on this is in the team report. I’ll actually go through and test the video with the system tomorrow. I’ll record if the output matches the label given to the video, as well as instances of false positives/negatives.