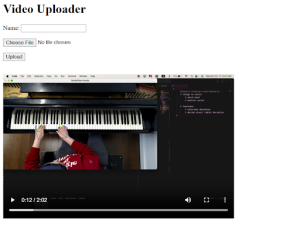

For this week, I continued my work of putting the videos and associated graph onto the web application. The graphs are very rudimentary but they display whether you have tension or not over the course of time. I investigated trying to sync the video with the graph but I think it is slightly too complex. I was not quite sure how to implement that and I didn’t think it was essential to the web application so I skipped it for now. I was able to fix all the bugs I had and the videos/graphs are able to be displayed onto the web application now. I also used an HTML video library to have a better display for our videos as the default one lacks some features. There is a small issue where the videos are not able to be played for some reason. I am currently working with the videos provided by the model and I have noticed that some of the videos are being corrupted. I am not sure if the model is not saving the videos correctly or if it is a problem with my code. I am going to test the model with regular videos I download off the internet and work with Jessie if the model is outputting corrupted/invalid videos. Additionally, I worked on improving the instructions so that our users are able to clearly understand what is being asked of them.

My progress is still on schedule. I am not worried about falling behind.

For next week, I hope to fix the video issue I was seeing where the videos were not properly being played. I also will begin testing the new UI with different users. These users do not need to be pianists as they will simply follow the instructions to set the stand up. Additionally, we will have one integrated test for the UI that we have planned out as well. For the UI itself, I will clean up some of the graphs associated with the video that display tension. If I have time, I will also work on improving the look of the web application such as changing the color scheme and moving components but these are not essential and more minor changes if the time allows.