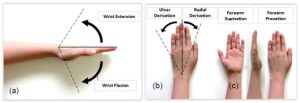

This week I worked on building out the initial pipeline for our hand posture tracking. Before starting the pipeline, I looked more into how wrist deviation is usually measured. I found that typically it relies on the middle joint as a reference position. First, a neutral position is recorded (solid vertical line in the photo). Then, as the wrist deviates, we can measure the angle between the middle solid line and the dotted lines to find the angle of deviation.

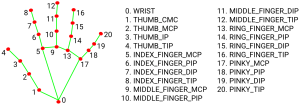

I then ran the Mediapipe hand landmark detection demo available on Github and used the code as a starting point to build out the rest of the pipeline. After looking at the landmarks I had available to me, I decided to use points 0 and 9 to get the vectors to calculate wrist deviation angle with (see image below). I wrote a function to record a neutral position, then changed the code a bit to continuously find the vector formed between points 0-9 and calculate the current angle of deviation. This then allowed me to print out the live deviation of the wrist once a neutral position was recorded. A video demo of the running code is included here.

A current issue with the pipeline is that it can’t tell which direction the deviation is in. Since I’m using a dot product to calculate the angle between my neutral vector and the live recorded vector, the angles I get as outputs are only positive. Thus, I’ll be adding another check in to identify, then label the direction of deviation using more vector operations.

Another smaller issue is that the pipeline currently only works with one hand. I’ll add in the ability to track neutral positions & deviations for both hands while I’m finalizing and cleaning up the pipeline.

For next week, my plan is to fully flesh out the pipeline by fixing the issues identified above restructuring the code to be more understandable and usable. We have a work session with Professor Dueck’s students on 9/25—two handed tracking will be added by then. During the session, I’ll be testing to make sure that the pipeline still works from an overhead angle with the piano keyboard backdrop. I’ll also record some video and photos to test with the following week. Finally, I’ll start looking at how to port the pipeline to work on the FPGA and assist Jessie as necessary on setup.

Link to Github w/ code. A new git will be made for the team; currently using this as a playground to mess with the hand detection before transitioning to a more formal pipeline.