This week, mainly consisted of debugging my audio localization solution and making necessary changes to the hardware of SBB.

Hardware

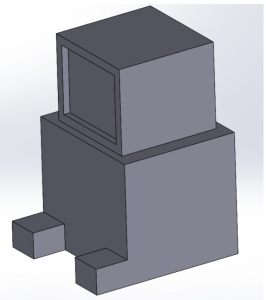

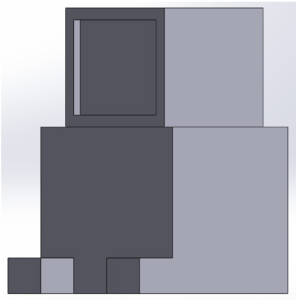

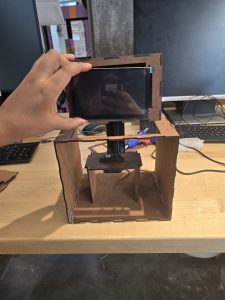

Based on the decision to change motors from servo to stepper, I had to change the mounting mechanism of the robot’s head to the body. I was able to reuse most of the components from the previous version, and had to make the mounting stand slightly longer to be in line with our use case requirement. Now the robot can move its head very smoothly and consistently.

My work on audio localization and its integration with the neck rotation mechanism has made significant progress, though some persistent challenges remain. Below is a detailed breakdown of my findings and ongoing efforts.

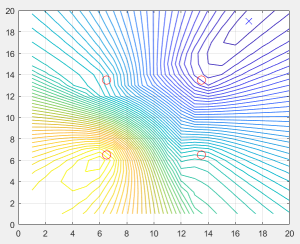

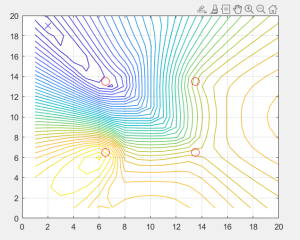

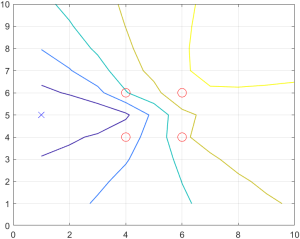

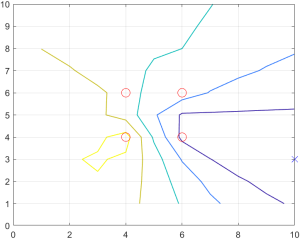

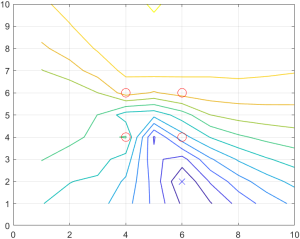

To evaluate the performance of the audio localization algorithm, I conducted simulations using a range of true source angles from 0° to 180°. The algorithm produced estimated angles that closely align with expectations, achieving a mean absolute error (MAE) of 2.00°. This MAE was calculated by comparing the true angles with the estimated angles and provides a clear measure of the algorithm’s accuracy. The result confirms that the algorithm performs well within the intended target of a ±5° margin of error.

To measure computational efficiency, I used Python’s time library to record the start and end times for the algorithm’s execution. Based on these measurements, the average computation time for a single audio cue is 0.0137 seconds. This speed demonstrates the algorithm’s capability to meet real-time processing requirements.

In integrating audio localization with the neck rotation mechanism, I observed both promising results and challenges that need to be addressed.

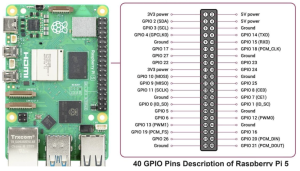

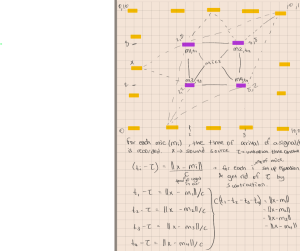

For audio cue detection, I tested the microphones to identify claps as valid signals. These signals were successfully detected when they exceeded an Arduino ADC threshold of 600. Upon detection, these cues are transmitted to the Raspberry Pi (RPi) for angle computation. However, the integration process revealed inconsistencies in serial communication between the RPi and the Arduino.

While the typical serial communication latency is 0.2 seconds or less, occasional delays ranging from 20 to 35 seconds have been observed. These delays disrupt the system’s responsiveness and make it challenging to collect reliable data. The root cause could be the Arduino’s continuous serial write operation, which conflicts with its role in receiving data from the RPi. The data received on the RPi seems to be handled okay, but I will proceed to validate the data side-by-side, and make sure the values are accurate. Attempts to visualize the data on the computer side were too slow for the sampling rate of 44kHz, leaving gaps in real-time analysis.

To address hardware limitations, I have temporarily transitioned testing to a laptop due to USB port issues with the RPi. However, this workaround has not resolved the latency issue entirely.

Despite these challenges, the stepper motor has performed within expectations. The motor’s rotation from 0° to 180° was measured at 0.95 seconds, which meets the target of under 3 seconds, assuming typical latency.

Progress is slightly behind schedule, and the contingency plan for this is indicated in the google sheets of the team weekly report.

Next Steps

Resolving the serial communication latency is my highest priority. I will focus on optimizing the serial read and write operations on both the Arduino and RPi to prevent delays. Addressing the RPi’s USB port malfunction is another critical task, as it will enable me to move testing back to the intended hardware. Otherwise, I will resort to the contingency plan of using the webapp to compute the data. I will be finalizing all the tests I need for the report, and finalize integration with my team over the final week.

Mounted microphones on to the robot’s body

Mounted microphones on to the robot’s body Assembled Body of the robot

Assembled Body of the robot Assembled body of the robot including the display screen

Assembled body of the robot including the display screen