My time this week was split between preparing for the proposal presentation I delivered on Monday, determining design/implementation requirements, and figuring out exactly what components we needed to order, making sure to identify how components would interact with one another.

The proposal was early on in the week, so most of the preparation for it was done last week and over the last weekend. Sunday night and early on Monday, I spent the majority of my time preparing to deliver the presentation and making sure to drive our use case requirements and their justifications home.

The majority of the work I did this week was related to outlining project implementation specifics and ordering the parts we needed. Elliot and I compiled a list of parts, the quantity needed, and fallback options in the case that our project eventually needs to be implemented in a way other than how we are currently planning to. Namely, we are in the process of acquiring a depth sensing camera (from the ECE inventory) in the case that either we can’t rely on real-time data being transmitted via the accelerometer/ESP32 system and synchronized with the video feed, or that we can’t accurately determine the locations of the rings using the standard webcam. The ordering process required us to figure out basic I/O for all the components, especially the accelerometer and ESP32 [link] so we could make sure to order the correct connectors.

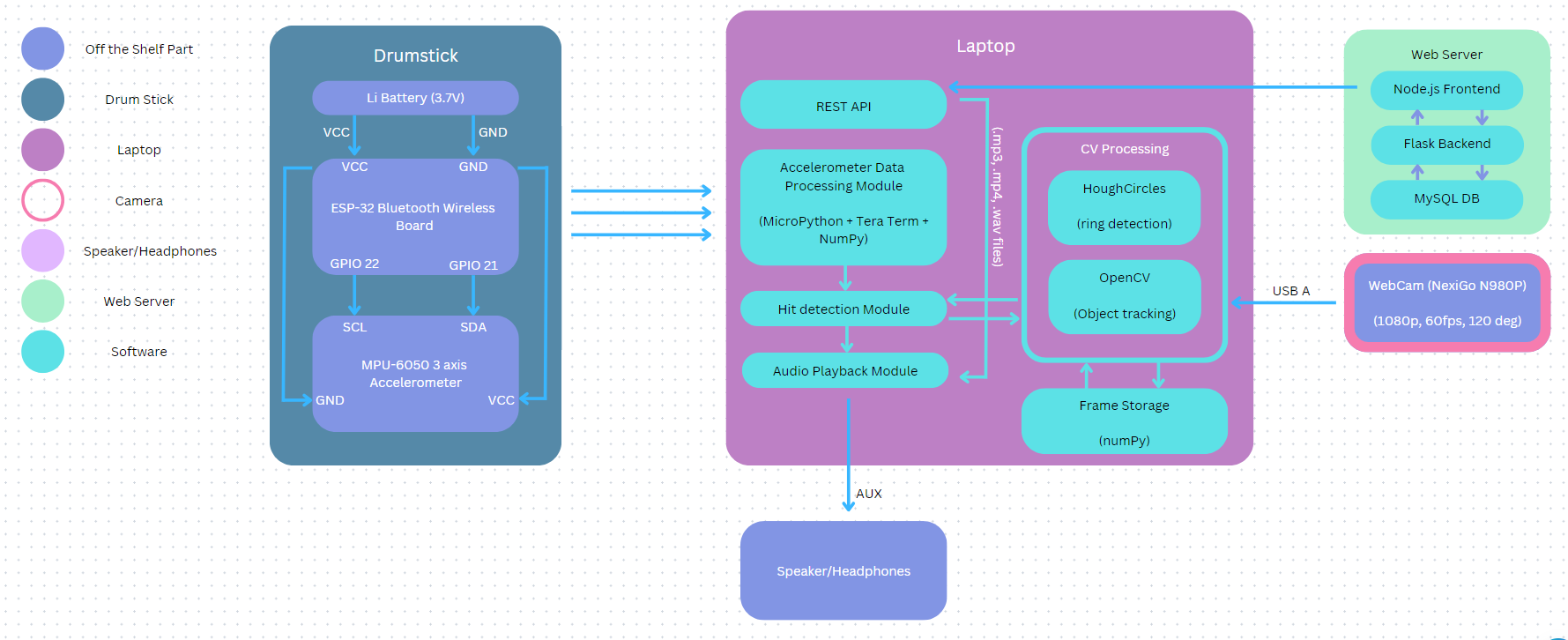

Having a better understanding of what our components and I/O would look like, I created an initial block diagram with a higher level of detail than the vague one we presented in our proposal. While we still need to add some specifics to the diagram, such as exactly what technologies we want to use for the computer vision modules, it represents a solid base for conceptually understanding the interconnectivity of the whole project and how each component will interact with the others. It is displayed below.

Aside from this design/implementation work, I’ve recently started looking into how I would build out the REST API that needs to run locally on the user’s computer. Basically, the endpoint will be running on a local flask server, much like the webapp which will run on a remote hosted flask server. The user will then specify the IP address of their locally running server in the webapp so it can dynamically send the drum set configurations/sounds to the correct server. Using a combination of origin checking (to ensure the POST request in coming from the correct webserver) and CORS (to handle the webapp and API running on different ports), the API will continuously run locally until it receives a valid configuration request. Upon doing so, the drum set model will be locally updated so the custom sound files are easily referenceable during DrumLite’s use.

Our project seems to be moving at a steady pace and I haven’t noticed any reason we currently need to modify our time line. In the coming week, I plan to update our webapp to reflect the fact that we are now using rings to create the layout of the drum set as opposed to specifying the layout in the webapp as well as getting very basic version of the API endpoint locally running. Essentially, I want to get a proof of concept of the fact that we can send requests from the webapp and receive them locally. I’ve already shared the repo we created for the project with Elliot and Belle, but we need to come up with how we plan to organize/separate code for the webapp vs the locally running code. I plan on looking into what the norm for such an application is and subsequently deciding whether another repo should be created to house our computer vision, accelerometer data processing, and audio playback modules.