The most significant risk in our project right now is that the USB connection to the Jetson and the camera connection are a bit unstable. Occasionally, the USB port to the Arduino would suddenly not be recognized by the Jetson, and we would have to reconnect or restart the Jetson in order for it to work again. The camera was also not recognized at one point, and it started working again after restarting the Jetson a couple of times. We are unsure of what the root cause of this is, and to mitigate this risk we plan on testing our system with a different camera or a different cable, to see what the exact problem is.

No changes to the system’s design are planned. Depending on how much time we have, there are some additional features we could explore adding. One possibility we discussed was ultrasonic sensors to detect the fullness of the bins. Once we are confident in the soundness of our existing design, we will look into these features.

No updates to the schedule have occurred.

Integration testing will consist of connecting multiple systems together and confirming that they interact as we expect.We will place an item in front of the sensor, and verify that an image is captured and processed. After the classification is finished, we confirm that the Jetson sends the correct value to the Arduino, and that the Arduino receives it, and responds correctly. We will also verify that the time from placing an object to sending the classification signal is less than 2 seconds. For all of these tests we will look for 100% accuracy, which means that the systems send and receive all signals we expect, and respond with the expected behavior (ie. camera only captures image if and only if the sensor detects an object).

For end-to-end testing, we will test the entire recycling process by placing an item on the door and observing the system’s response. As the system runs, we will monitor the CV system’s classification, the Arduino’s response), whether or not the item makes it into the correct bin, and if the system resets. Here, we are mainly looking for operation time to be <7 seconds as specified in the user requirement. We will also confirm our accuracy measurements from individual system tests.

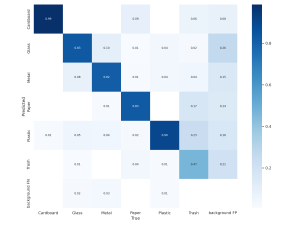

(Our current graph with stubbed data)