One thing that we are concerned about is that the acrylic that we are using as the swinging door is clear, which we think could interfere with the CV’s ability to categorize the objects. In order to manage this risk, we are planning to either paint the acrylic, or cover it in some sort of paper or tape to make it a solid color.

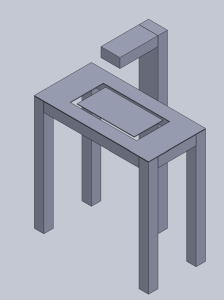

We have also built the structure of the recycling bin, as well as the post to which we are planning to attach the camera. However, we have noticed that the height at which we are placing the camera may not be far enough from the platform for the entire object to fit in frame. In order to fix this issue, our solution is to rebuild the post so that the camera will be placed higher. We have tested with a few objects to find the optimal height to place the camera.

We decided to switch back to woodworking instead of the pre-built structure because the shelf did not arrive on time. Fortunately, we were able to get some help from someone with woodworking experience and finished building the structure of our product within a couple of hours.

We are also moving the ultrasonic sensor to the door rather than being next to the camera above the table. We found that the camera will need to be higher than the holder currently is (12 inches), but that distance will make the ultrasonic sensor less accurate. We plan to move the sensor in front of the motor, facing sideways across the door. This will allow it to detect items as they are placed.

We had a little bit of delay in the schedule for building our mechanical part, but we are in progress of finishing our build and should be able to start final testing next. This had no severe changes to our schedule.

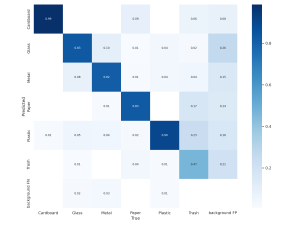

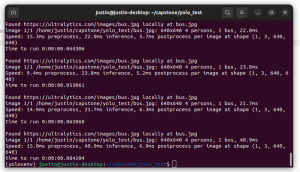

CV Units Tests:

I tested the YOLO model’s performance by running detection on a test dataset of 133 trash/recycling images. These were images that weren’t used in the training or validation steps, so the model had never seen them before. I evaluated the model’s performance using the percentage of images classified correctly. I initially found that the model was not able to detect certain objects that were not well-represented in the training dataset. For example, the original training dataset did not include many milk jugs, and the model failed to classify milk jugs as plastic in testing. I retrained a new version of the model on a much larger dataset, and accuracy improved dramatically. I also used this testing to tweak the threshold confidence score at which the model would classify an item.

Web App Unit Tests:

I tested different components of the web app to make sure that everything was displayed accurately on the screen through written unit tests, and also by user testing. The tests showed that everything was displayed correctly, but sometimes waiting for the information to load on the screen would take a few seconds. I also tested the http call requests to make sure that they would be able to consistently make accurate requests to the backend and the jetson, and they responded to the calls consistently.

Hardware Unit Tests:

I tested each component of the hardware (ultrasonic sensor, servo motor) separately with different scripts to make sure that they work as expected before combining them and implementing the serial communication with the Jetson. I tested with different distances and angles to see what’s ideal for our use case requirement. I also adjusted the delay time between each operation based on how they performed when all the components were combined.

Overall System Tests:

Mechanical construction of our project has started, so once we are finished and have secured all the components together, we will start the overall system tests. This includes taking different objects and placing them on the table and timing how long it takes for the object to be detected, classified, and sorted. We will also test the accuracy of our overall project by hand classifying the objects that we are testing with, and checking how many of them are sorted into the correct bin. We will also make sure that items that are sorted into a particular bin (ie. recycling/trash) actually make it into the bin without falling out.