I spent the majority of this week working on the Kalman filter for our model output, conducting tests, and working on our upcoming deadlines. Here is what I did this past week:

-

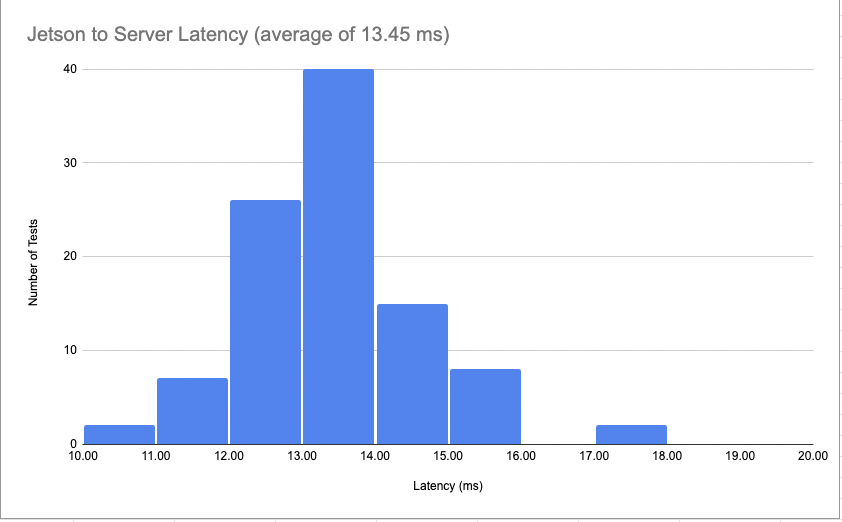

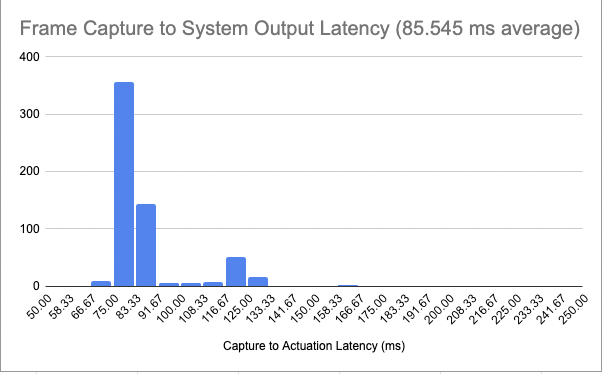

- Made and integrated Kalman filter

- I worked independently on writing Kalman filter code for the model output

- The goal of the system is to reduce noise associated with model output

- The system achieves the desired effect of reducing unnecessary state transitions and reducing noise

- Still needs to be tuned for video and final demo

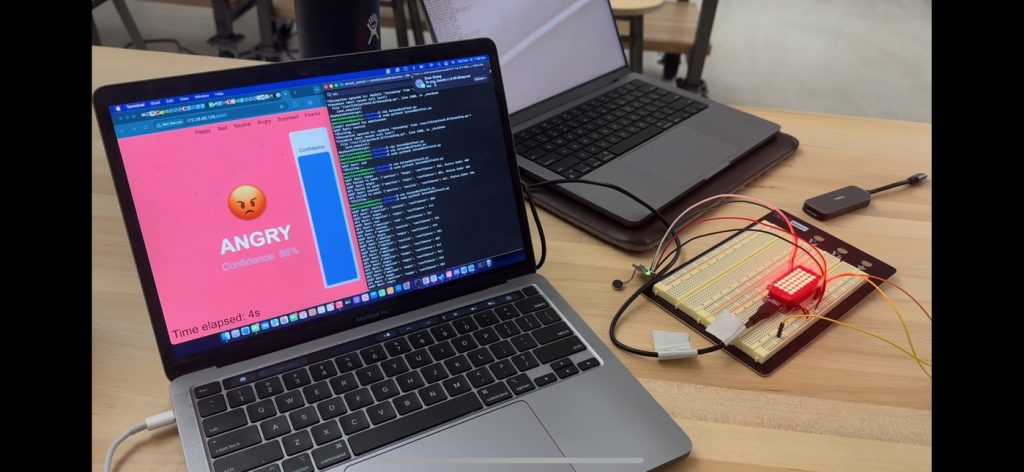

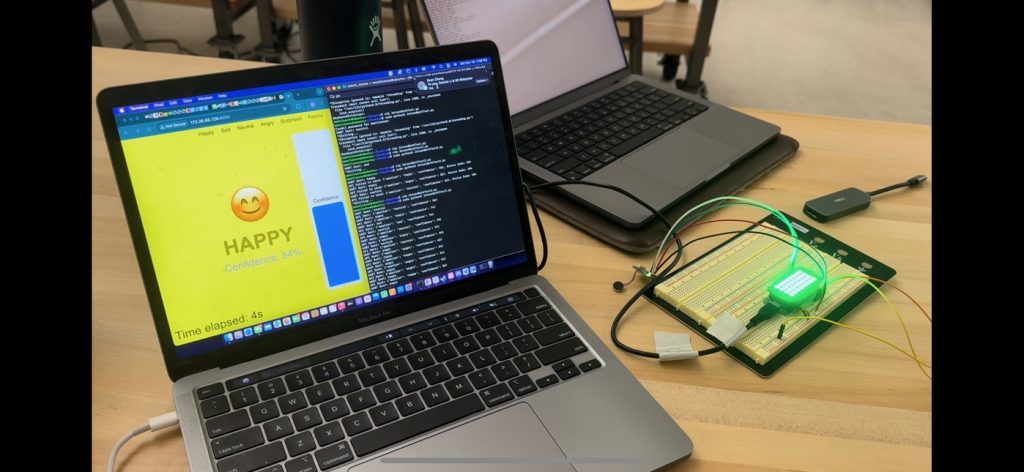

- Set up and tested system in demo room

- Made sure that the jetson will be able to set up on ethernet in our demo room

- Began work on poster and video

- Added poster components related to the web app subsystem

- Conducted user tests with 3 people

- Had them use the system and give feedback on the UI and system usabiliity/practicality

- Worked with Kapil on Jetson BLE integration. Researched a few options and ultimately found a suitable usb dongle for Bluetooth.

- Made and integrated Kalman filter

Goals for next week:

- Tune Kalman filter

- Further prepare system for video and demo

- Finish final deadlines and objectives