Status Report 4

What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

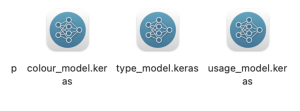

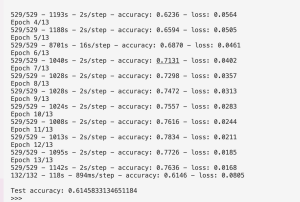

The most significant risk at the moment is our clothing classification. We are managing this risk by developing a new way to classify clothing, using three different trained models. This should help us get the accuracy we need as each model will be able to focus on one aspect of the item. One model will classify usage, another will classify item type, and the third will classify color. If we are not able to achieve our design requirement classification accuracy, our contingency plan is to have the user confirm that the detected classifications are correct. If they are not, we will allow the user to correct them.

Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

Yes, we updated the plan for our outfit generation algorithm to take into account the user’s preferences in addition to the clothing they have. This change is necessary because we want the outfits we generate to make sense for the occasion, and the user’s style. This change means that we will need to add a new table in our database, but it should not cause issues time-wise as we already allocated time to update our outfit generation algorithm. Going forward, we will make sure to complete the changes to our outfit generation algorithm for using preferences in the time we have set aside.

We also updated the way that we are classifying clothing. We will be using 3 models instead of one. This change was necessary because we were not getting the accuracy levels for classification that we wanted with our previous design. This change will not affect our latency requirement or our Jetson storage requirement since 3 individual ResNet50s are smaller in size than the original model we had and running classification on a ResNet50 on a lower-spec model of the Jetson takes around 1 second, meaning classifying 3 times will take around 3 seconds. This is discussed in more detail in our design report. Moving forward, we will not move our application to be hosted on the Jetson so that the device can use all its power and have enough storage for the three models.

Provide an updated schedule if changes have occurred.

Our schedule has not changed.

This is also the place to put some photos of your progress or to brag about a component you got working.

Photos of our progress are located in our individual status reports.

Additional Question:

Part A: Written By Alanis

Our product solution will encourage people not to purchase fast fashion products by recommending stylish and weather/occasion-appropriate outfits that already exist in their own closets. Style Sprout allows users to make the most of their existing wardrobe. While this makes getting dressed in the morning easier, it also reduces the need to purchase new, fast-fashion items, minimizing textile waste and resource consumption. As mentioned in part C, the environmental benefits of discouraging people from fast fashion help the globe as the earth’s health and global warming affect everyone. Furthermore, fast fashion companies often exploit workers all around the world since producing cheap clothing can mean underpaying your workers or unsafe factory conditions. Fast fashion leads to many unethical labor practices, as mentioned in this article. Style Sprout encourages users to confidently reuse and style their current clothes, and this push away from fast fashion will also remove support for unethical fast fashion companies who exploit workers in countries all over the world, like Bangladesh, India, and China.

Part B: Written by Riley

Our product solution touches upon many cultural factors due to clothing and attire being an insoluble fragment of culture. Clothing is deeply tied to the representation of culture, tradition, and identity, making it necessary for us to consider how our solution might affect these factors. Another intractable truth is that clothing is also inherently personal. It is near impossible for an algorithm to summarize and tailor suggestions to all users’ unique styles. As such our solution attempts to touch upon this point by disregarding style as a parameter. Predictions will first only be given with combinations that include covering for the chest and the legs, but will not distinguish between styles outside basic color matching. As such we intend to allow users to maintain their individuality and allow them to express themselves in their cultural values. Only after the user selects clothing would we attempt to partially include their previous selections into future recommendations.

Another cultural implication of our project solution is the western clothing bias inherent in our system. Even if we disregard our personal biases, the types of clothing included, the valid color schemes we include, along with what is classified as “formal”, and “casual” are all rooted in Western (specifically American) culture. As such it is important for us to take this into account for our solution that it is not emblematic of all cultures and all peoples but inherently limited in its usability for certain peoples. This is an issue that is not easily solvable without a massive undertaking but nonetheless is vitally important to consider and disclaim.

Part C: Written by Gabriella

Our product solution addresses the environmental impact of fast fashion. Fast fashion is hugely detrimental to the environment as it leads to increased waste and over consumption. Style Sprout encourages users to make better use of their existing wardrobe, reducing the need for people to purchase new clothing. Through wardrobe management and outfit generation, our app aims to help users make a more intentional use of their wardrobe.

We also hope to help decrease the pressure to participate in fast fashion trends by generating stylish outfits for our users that align both with convenience and environmental consciousness.