What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours.

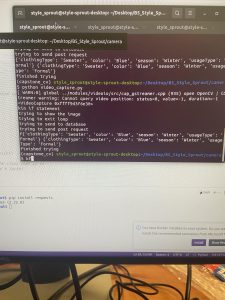

This week I fixed the camera bug that was preventing the openCV from displaying the images properly. Additionally I scaled the image to a level appropriate for the model and can continuously detect keyboard input to take pictures 4 seconds after the input was triggered. The issue with the openCV ended up being the fact that I needed to explicitly state what frame rate and resolution I was using, along with needing to add a delay for CV to display the image.

Additionally, on Saturday I worked with Gabi to set up the Xavier NX with the database and API. We configured the hardware, finalized endpoints to send and store data and connected the server and the embedded computer. Currently I have gotten the device to send some dummy information to the backend server, which then can send a sql query to add the items to the database.

Below are images of my successful POST request via fastAPI. The left image is showing the testing arguments I passed in successfully mirrored as a method to test the endpoint. The right image shows the fastAPI success, which is a little green OK at the bottom. If you see a couple of red failures above it, those issues were fixed 🙂

Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the proiect schedule?

Progress is on schedule and nearly ready for the demo. Some more testing will be done between now and Wednesday but no new major features need to be added until the demo.

What deliverables do you hope to complete in the next week?

Next week I hope to set up a free trial with Amazon Educate and through that gain access to S3 file storage. With that we should have more capabilities in sending and storing images to display as a visual for the user. Additionally I hope to be able to detect the physical button input and use that as a trigger to take a picture.

Verification Tests:

I tested the functionality of the camera by testing if the camera could display consistently between different boot ups and with the different frame rates and resolutions available. With the GUI the camera was able to consistently display and didn’t experience any issues in the testing.

Additionally, I tested the cv code by running fifty different image capture requests and ensuring that each time, the event triggered as expected and displayed the image taken by openCV. This action worked 100% of the time. Further testing will be taken when the model is included in order to test if the results can be generated within 3 seconds for a sample dataset of fifty images taken by the camera. However since not all the models are ready and on the Jetson, this requirement will be tested in the coming weeks.