This week I gave the final presentation to the class and discusses the various steps we have taken so far to verify our design such that it aligns with our design requirements we have set forth.

Bhaviks Status Report for 11/30/2024

This week was mostly spent planning and ordering the remaining components for the project (GPS, planning power distribution, and Rpi attachment). I also cleaned up the code from the demo and prepared it for the final demo. I have also spent some time making sure that the radio communication can work bi-directionally. Moving on to the next parts of testing, we want to be able to send signals from the base station to the drone, hence we need to make sure this step works. Next week we will continue to integrate our system and test our drone to start flying.

Additonally, a majority of the time was spent creating the final presentation slides. I created a full outline of the presentation. I will continue to work on the slides by rerunning some demo scripts to capture more media for the presentation. Since I will be the one presenting this time, I have also begun to practice and get the required information.

Throughout this project, there were a lot of new tools and knowledge I had to learn in order to accomplish my tasks. Working a lot of various different hardware systems, I had to learn a lot about efficient methods to communicate between systems, the various communication protocols, and how to interface with third-party tools. I also had to learn a lot about controls (PID specifically). Some learning strategies were watching tutorials on related topics, looking at other project documentation, or talking with friends who were knowledgeable about the specific topic. I also learned a lot by trial and error. For example, using the knowledge I gained by reading documents and watching videos, I was able to implement basic functionality, learn from the mistakes made there, and retry. This has helped me take the theoretical knowledge and apply it in a fast manner.

Bhaviks Status Report for 11/16/2024

This week I focused my efforts on finalizing the CV and helping with drone controls.

At the beginning of the week I worked closely with Gaurav to get a model working on the rpi. We got the model working but the frames were really low as it wasn’t being accelerated. Thus, we spent time looking through the documentation to figure out how to compile the model. In our attempts we compiled the model multiple times in various configurations but have run in various PC related issues (ex. incompatiblae architecture or not enough hardware specs such as ram). We resolved this problem by configuring an AWS EC2 instance and setting up an additional memory. Once we did this we were able to successfully compile the model after lots of efforts.

Once we put it on the rpi, the script wasn’t able to utilize the model and detect anything. We believe this is a compiler issue with the hailo software as its very new and doesn’t fully support using custom models very easily. Therefore, we have tested this theory by compile a base yolov8n model and uploading it to the rpi. This seems to have worked. Thus, we have decided to move forward with objects trained on the base yolo. Another reason for the change is that we realized the drone propellers produce a lot of windforce and would easily cause the balloon to move around a lot, causing the detection and tracking algorihtims to fail.

On the drone pid controls aspect, I worked closely with Ankit to set up a drone testing rig. We have tried multiple rigs and finally decided to use a tripod attachment with the drone on top. This seems to have worked fairly well and we have been tuning with this. While tuning we found lots of hardware inconsistencies which seem to be like the major risk moving forward. The PID seems to be working well one day and the next day we seem to have gone backwards. We have spent multiple days trying to limit these hardware issues to fully ensure that the drone PID controls work well. There is still a lot of testing to do to make sure the drone is reliable.

In regards to testing, we will gather specific metrics required that related to our use case requirements. For example, for the computer vision model detection, we will be measuring that the computer vision model is able to accurately detect the object in the various frames. This will let us measure the accuracy and thus we will be able to analyze this metric to check if we meet the design requirements. For tracking we will check if the camera is able to provide directions on which way the drone should move. If the algorithm is able to make sure that object is always centered we know we have met the design requriment.

Bhaviks Status Report for 11/09/2024

This week, I spent a lot of time getting the drone ready for PID tuning and setting up the various components required to relay coordinates back to the base. I began the week by attaching all the motors to the drone, testing them to ensure they all worked, and setting up the polarity so the drone could properly take off.

However, as discovered last week, our propellers don’t fit properly onto the motors. Thus, we spent some time talking with techspark experts to figure out a method to drill into the propeller. After many trials and various techniques, we couldn’t figure out a proper method to drill into them. Therefore, we decided to change our motors instead so that the properllers are compatible. Once we changed the motors, we again had to perform testing to make sure they all worked and set their polarity correctly. We found out that the required voltage for these motors are lower that the ones we were previously using, thus, we realized the need to order a different battery. But to continue testing while the batteries were being ordered, we set software constraints to ensure the motor operated safely.

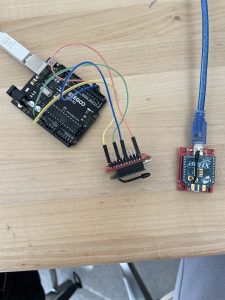

On the other hand, I spent some time setting up the radio mechanism for the drone. This required me to set up the radios by installing the correct firmware, setting up its configurations, and interfacing with them to read data. Once these aspects were figured out, we were able to send data between the radios. The next step was to set up the radio such that one will be connected with the arduino board and one to my laptop (base station). I wrote code such that the arduino can understand the radio and read its values. Once this was set up, I tested the radio by sending messages from both sides of the radio to one another. We saw that the radio was working as expected.

The next steps are being able to interface with the GPS to transmit its values over by radio and connect the computer vision to the Arduino as well.

Bhaviks Status Report for 11/02/2024

This week was mostly spent on building the actual drone and connecting all the electronic components. We began the week by putting the entire drone frame together. Once we had the basic drone frame together, we had to connect all the ESCs to the motors. Once this step was complete, I worked with Ankit to solder all the wires to the power joints. This involved soldering the wires, running a continuity test, and finally doing a final test with the actual power on. After soldering and performing a continuity test on all the wires, we were able to verify that the wires were placed in the correct spots and that there was no short circuiting. Then we plugged in the battery could see the motors were powered. This signifies that we can properly power the drone and have all motors working.

We then began to run basic tests on the ESCs by plugging in the motors to the ESCs and connecting the ESCs to an arduino. We also had a drone receiver connected to the arduino. We programmed the arduino such that it would take the input of the drone controller and equally power the motors. Ex, if we are at full throttle, all the motors would be spinning at its fastest rate. We ran the program and confirmed that the drone motors were correctly responding to the inputs. The next step would be to test the pid control code by hanging the drone and checking if it can be stable. If not, we will need to fine tune the PID control system. This part of the project is at the highest risk right now since the rest of the project relies on this aspect working. If the drone is able to be controlled in a stable manner, the path planning should hypothetically be simple.

In regards to the computer vision aspect of the project, we were delayed due to the switch we made to the Rasberry Pi 5. The parts arrived at the end of the week and we are currently working on setting up the pi. This process hopefully shouldn’t take too long. I will be prioritizing this step next week and getting the computer vision algorithms up and running on the pi.

Bhaviks Status Report for 10/26/2024

The beginning of the week I tested the yolo balloon detection at a long range (20ft and beyond). For distances of 20 ft I ran over 50 trials and noticed that the ballon was detected 98% of the time. The confidence score was also very high at >80%. I noticed if I reduced the confidence threshold there were some false positives. I tested the model using other objects too try to break it and the model continued to perform well. I placed objects such as small balls, phones, hoodies and etc. however the model seems very robust and wasn’t affected by the distractions. Overall, I’m very confident in the models ability to work.

Additionally, this week I worked closely with Gaurav to get the kria set up for the vision model. We began by setting up the vitis AI tool, and attempted to load the yolo model on to it. In order to do that, we began by first quantizing the model. In order to do this, we began by exporting the model into a onnx format. Then, wrote a script to quantize the model (from float to int8). Using the quantized model we tried to upload it to the kria following the “quick start” guide. However, we realized that we would have to write our own script that utilized the Kira’s xillinx’s library to analyze the model to ensure it’s compatible with the dpu. Once we wrote the script and ran it on the kria, we kept getting errors that the hardware isn’t compatible. Thus we tried multiple different approaches such as using their onnx library and PyTorch libraries to try to get vitas . After discussing with Varun (FPGA TA), we were recommended to look into using an AI accelerated raspberry pi 5.

We looked into the pros and cons of new hardware and found that is would be much more efficient and would simplify our issues. Working towards fixing this issue in the next week and getting the model working on the new hardware.

Bhaviks Status Report for 10/19/2024

The majority of the week went into working on the design review report. For this report, I worked on various sections of the paper including introductio, use case, architecture and many more. I spent quite some time with my team to understand the feedback received from the design presentation and discussed if any changes were required to the original plan. We incoorporated any needed feedback and wrote up a comprehensive design report.

I also put some time looking into interfacing with the xbee radios to begin communication with the drone. In order to test any of the code I wrote, I need the hardware first. Therefore, I placed orders for two radio devices (one for the drone and one for the base station – my laptop). I am on schedule but will need to put in some extra effort next week to make sure my code is working as expected. Additoinally, our previous order has come in and I was able to get a small start on looking into buildling the actual drone frame. This work will continue next week with the team to finish building the frame and attatching the motors for testing any initial code.

For the next week, Gaurav and I will work closely to get the vision system working on the Kria. We will uplaod the model weights to the kria and hopefully get it running. We will also build out the frame as a team to get started on testing if possible.

Bhavik’s Status Report for 10/05/2024

In the beginning of the week, I spent time with my team to finalize our design presentation and make any changes required for the presentation. Once we completed the work required for the design presentation, I began working on our path planning algorithim. I wrote up sudo code for our lawn mowing algorithim and the various states we will have in our drone. The next step would be to write the code out in Arduino. In order to do this, I need to first get a good understanding of the hardware parts we have and understand how to interface with them. I began by looking into how to interface with our radio and wrote up basic tests benches in Arduio that I can use to verify my knowledge and set up the radio correctly once it arrives.

I also began looking into how the altimeter and the GPS will send signals to the arduiono board. For path planning, we need to make use of these signals to determine the drones current position and determine its next step. I wrote up some basic test benches to verify these components once they arrive in our order.

On the computer vision front of the project, we ordered the various parts required for testing. Once I get a hold of a testing carmera and the testing balloons, I plan to set up a testing structure to test the accuracy of the trained model and verify that it can detect the balloon up to 20ft. I will do this by setting up a camera to a stand, and using a tape measure to walk back 20ft away from the camera. Then, we can position the balloon in various places of the frame to make sure it is able to detect it. We can also place other random objects in view to make sure the model would accurately ignore them.

Bhavik’s Status Report for 09/28/2024

During this week I spent time learning about computer vision, more specifically object detection. I took time to understand how Yolov8 works and how we can utilize it for our project. For the MPV we will be utilizing a balloon as the object to find. Therefore, I began by identifying a good dataset for training our Yolov8 model. I had to look in various datasets and identify which onces have a diverse set of images and large amounts of training data. Once I found the dataset, I realized that Yolov8 doesn’t support the lableing format used by the dataset (via format). Thus, I researched methods to convert a VIA format to COCO format. To verify correctness of the dataset, I wrote a script to viasualize the dataset after converting it to COCO format.

I then trained a model with 10 epochs to verify it is functioning as expected. I observed the loss and the testing images and noticed the trend seems correct. Then, I trained a model by running for 50 epoch. Once trained, I visualized the model’s output on testing imagse and noticed that the bounding boxes were not always good.

Going back to the dataset, I realized that the dataset was made for image segmentation and contained semgentation masks instead of bounding boxes. Therefore, I wrote an another script to convert from segmentation masks to bounding box lables. I retrained the model yet again for 50 epoch with the converted dataset. Once tested, I noticed good results. Next step is to write a program to intake live video and test the model in the real world.

Example of Yolov8 Nano model output (noise introduced to image to make it harder for detection):

Bhavik’s Status Report for 09/21/2024

At the beginning of this week, I spent a lot of time helping preparing the proposal presentation slides and meeting with team to finalize the idea and use cases. I spent time with Gaurav to create the slides such as problem statement, solution, technical challenges and testing.

After the presentation I reviewed the TA and professor feedback with the team to clarify our use case and make additional modification. I also spent time writing up a Jupyter notebook that will be able to train our computer vision yolo model. I tested the code by running sample training with 5 epochs. I also met with my team once again to write some code for the imu and start reading data from it. We were able to measure and see readings from the gyroscope and accelerometer.

My progress is on schedule. For next week, I hope to complete the design presentation with my team and also work with Gaurav to help flash the FPGA and hopefully load a CNN model on to it.