After attending the ethics session on monday, we split off and worked on our own areas for wednesday. I was working on the second of three guides necessary to setup the HLS on the KRIA. For this second guide, it was installing Peta Linux, which is the OS that is necessary to run files that we send from a laptop. Going through the guide took a bit of time, but I learned how to send files from my laptop to the KRIA via ethernet connection. There were a lot of settings to configure, and it took a while for the system to build. Here is a link to a picture of me working on the setup. (was too big to upload)

While the system was building, Jimmy was next to me workin on setting up a Kalman filter on the camera code he was working on. This sparked a conversation about the role and usage of the KRIA, because previously we had assigned the KRIA to be doing the Kalman filter. I wrote about the detailed reasoning and discussions we had in the team status report, but to summarize we essentially knew that there was going to be uncertainty regarding the camera and KRIA connection, and didn’t expect the camera to be this powerful. As a result, we are going to be looking into how we can use a raspberry pi to substitute for the KRIA. I had met up with Varun the FPGA TA guru, and explained to him our concerns with the KRIA. He agreed that we could potentially have an easier solution in the raspberry pi, and we also talked through the limitations of the KRIA a little more.

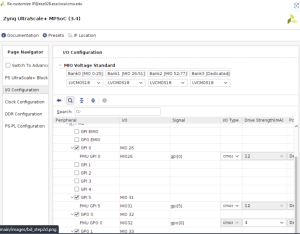

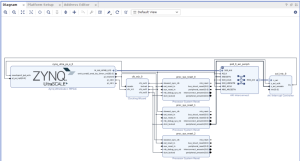

Specifically, the KRIA is a platform that has an SoC and FPGA housed together, capable of running programs and also accessing the FPGA for hardware acceleration via High Level Synthesis (HLS). How HLS works is that you feed in a C program, and it translates it to RTL that the hardware would be able to read. However, the FPGA on board does not have many floating point units, which makes dealing with floating points challenging. Since we are doing the trajectory calculation and hope to use precise floating point numbers for position estimation, the hardware might actually have a tough time dealing with all those floats. Another challenging component is that we need a file in C for the HLS to translate. This means rewriting the Kalman filter algorithm in C that is compatible with HLS, which is a non-negligible workload.

Considering that the Raspberry Pi gets the same job done but just slower, we decided that it’s worthwhile to evaluate if the Pi is fast enough to work. As I touched upon in the team status report, the timeline is now even more stretched, as we need to reconfigure things to work with a Pi now. However, this should still be way less work than using a KRIA, as there is just way less setup and knowledge required. I will be looking into acquiring the Pi next week, and getting that integrated ASAP. The team status report has a more detailed plan of attack that I wrote.