For the camera / estimation and tracking pipeline on Jimmy’s end, the decision was made to stop using the OpenCV kalman filter library, and ended up making a good in house implementation which yielded good results. This will be good to set us up as now the 2D visual pipeline is done, which can be a good contingency plan incase our 3D pipeline does not give good results. Some of the risks of this subsystem now lie entirely on the accuracy of the 3D model that is going to be implemented. As for validation, there will be two components to the test for validation for object detection and trajectory estimation. The first is to making sure that the estimates are accurately predicted and tracked. The second is to ensure that the calculation time can be done in a timely manner. The overall goal of the camera and detection/prediction pipeline is to generate a coordinate to send to the gantry so that it can catch the ball in time. Making sure of these conditions are met in the verification of this subsystem will ensure that our overall project goal can be achieved.

For the Raspberry Pi hardware integration side, a lot of work was put into getting past the setup stage and having functional work be done on the Pi. Jimmy has been working on developing a good Kalman prediction code from a recorded video that he is able to pass into the code. Once I finalized the setup and finished installing the few dependencies, I was able to run the Camera on the Pi. The depth AI package that the camera came with also had many example files that could be run to showcase some of the camera’s capabilities. On Monday I focused on learning how the file system structure is set up and looked through many examples of the camera and what different pieces of code could make the camera do. The next step was to adapt Jimmy’s detection function to take in camera input for video instead of a recorded video. That was successfully done, but the resulting video was only 15 FPS, and it struggled to detect the ball. We had a few ideas of how to improve that, and the details of how we went about implementing that are elaborated more specifically in Gordon’s status report, but essentially we were able to identify that making the camera window smaller greatly increased the FPS, as shown in this table below.

Camera with 2D Ball Detection FPS Experiments

| Window Size (All 1080 resolution, cant do worse) |

Resulting FPS |

Comments |

| camRgb.setVideoSize(1920, 1080) |

13-15 |

Default screen size, FPS is way too slow for reliable ball detection. This is without any optimizations of code, which we are looking into |

| 1200, 800 |

33-35 |

Ball detection is pretty smooth, need more integration to see if its enough |

| 800, 800 |

46-50 |

At this point window size is probably as small as it should go, starts to inhibit ability to capture ball trajectory. Ball detection is super smooth however, a balance between screen size and FPS should be studied |

| 700, 800 |

~55 |

^ |

| 700, 700 |

60 |

Achieved 60 FPS, but again window size is likely too small to be of use |

The comments provide a baseline overview of what I concluded from this simple verification test. From what I’ve gathered, I believe that this framerate and window manipulation will be a good tactic that we can use to increase the precision and effectiveness of our tracking and trajectory prediction. There are even more tactics that we haven’t tried yet, and plan to test out in these last few weeks if any design requirements have still not been met. There is still a good amount of work to be done to add the third dimension into it, and to convert it to instructions for the XY robot. These last bits of integration we will work on as much as we can as a team, as we still need to figure out how to structure the whole system to give real world coordinates. In general I think our team has done a lot of verification within each of our own subsystems, and it is almost time to start the validation process of integrating all of them together. The RPI and Jimmy’s camera trajectory work are already relatively connected and working, but like aforementioned, connecting to the XY robot will require work from everyone.

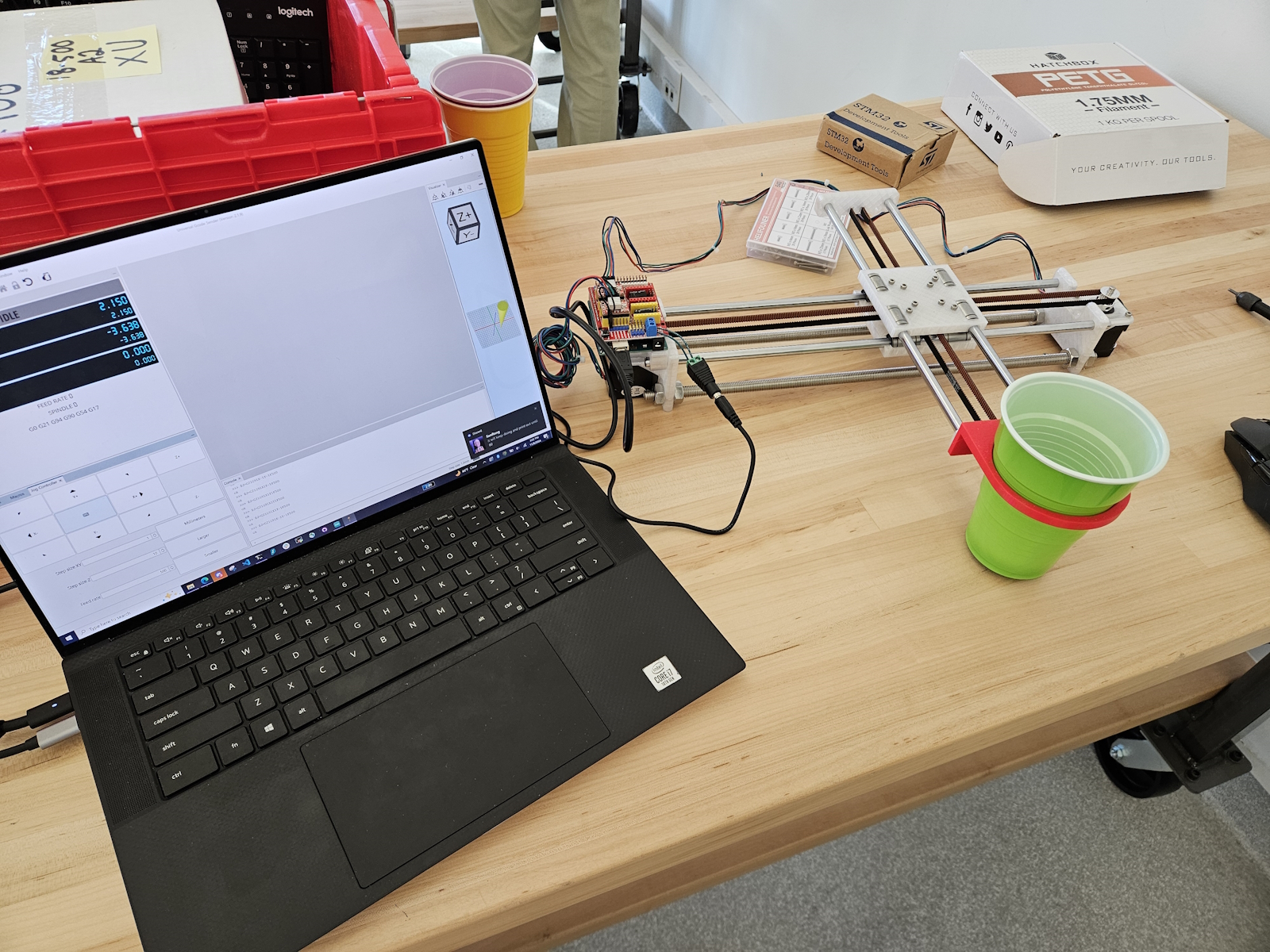

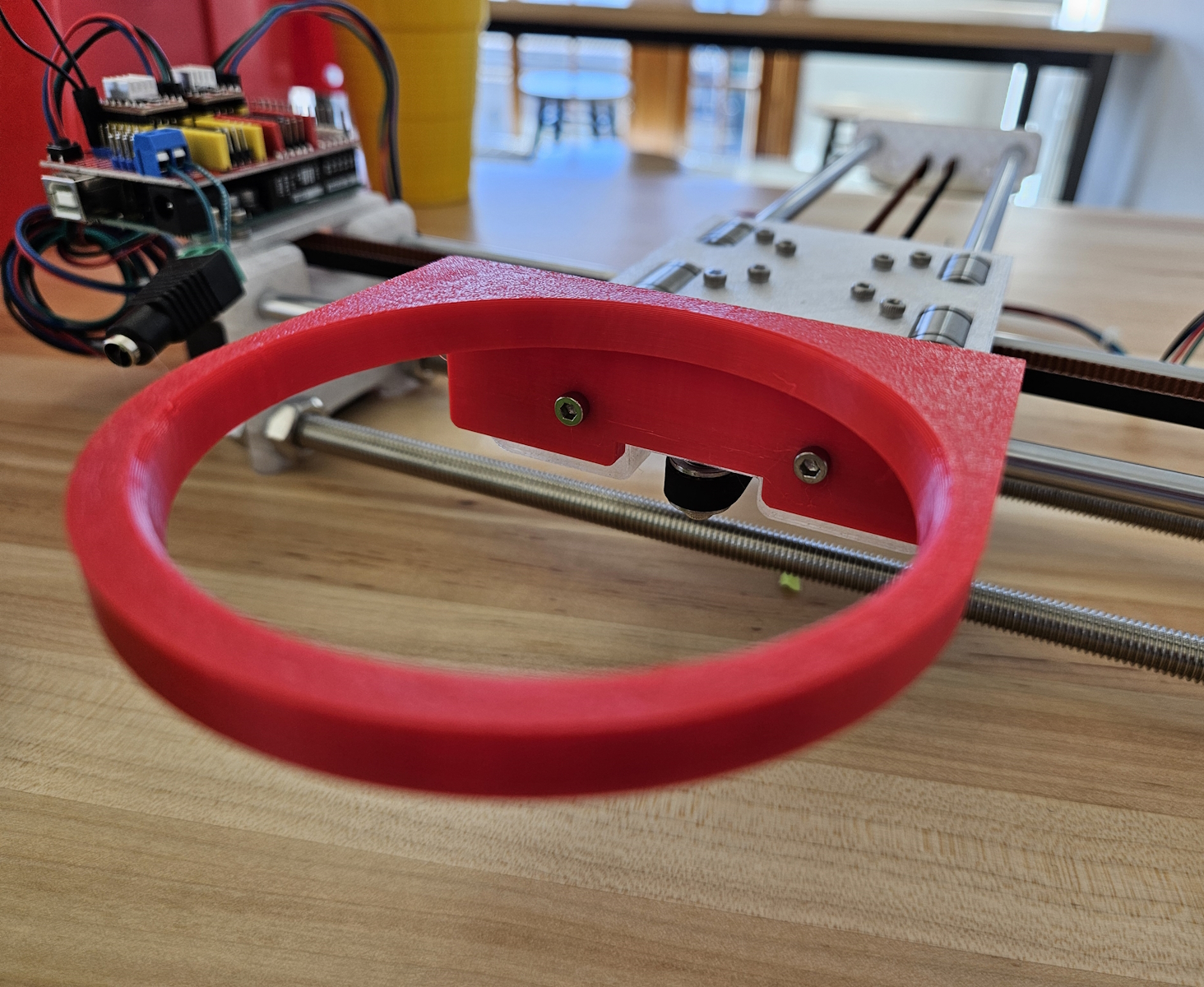

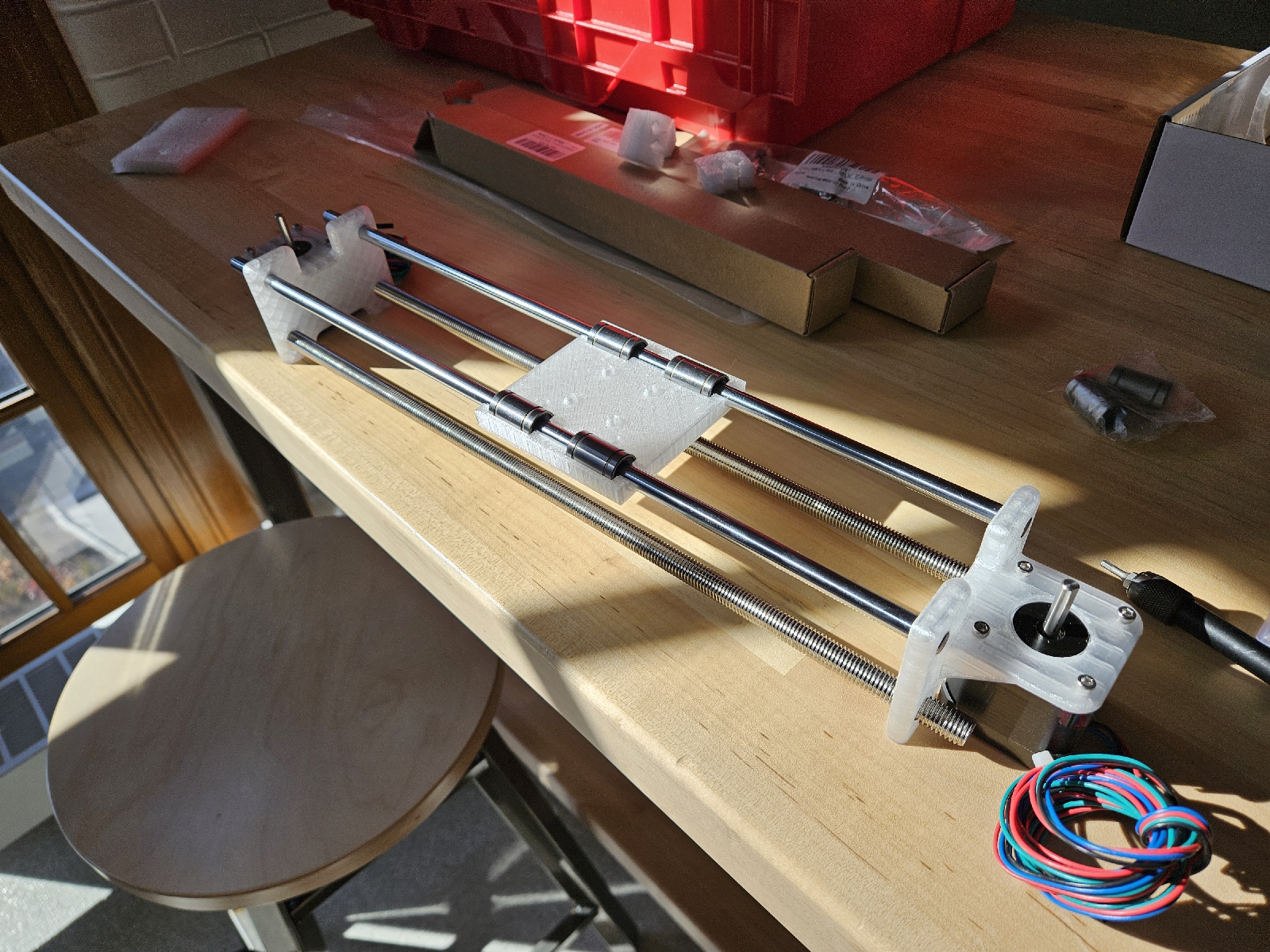

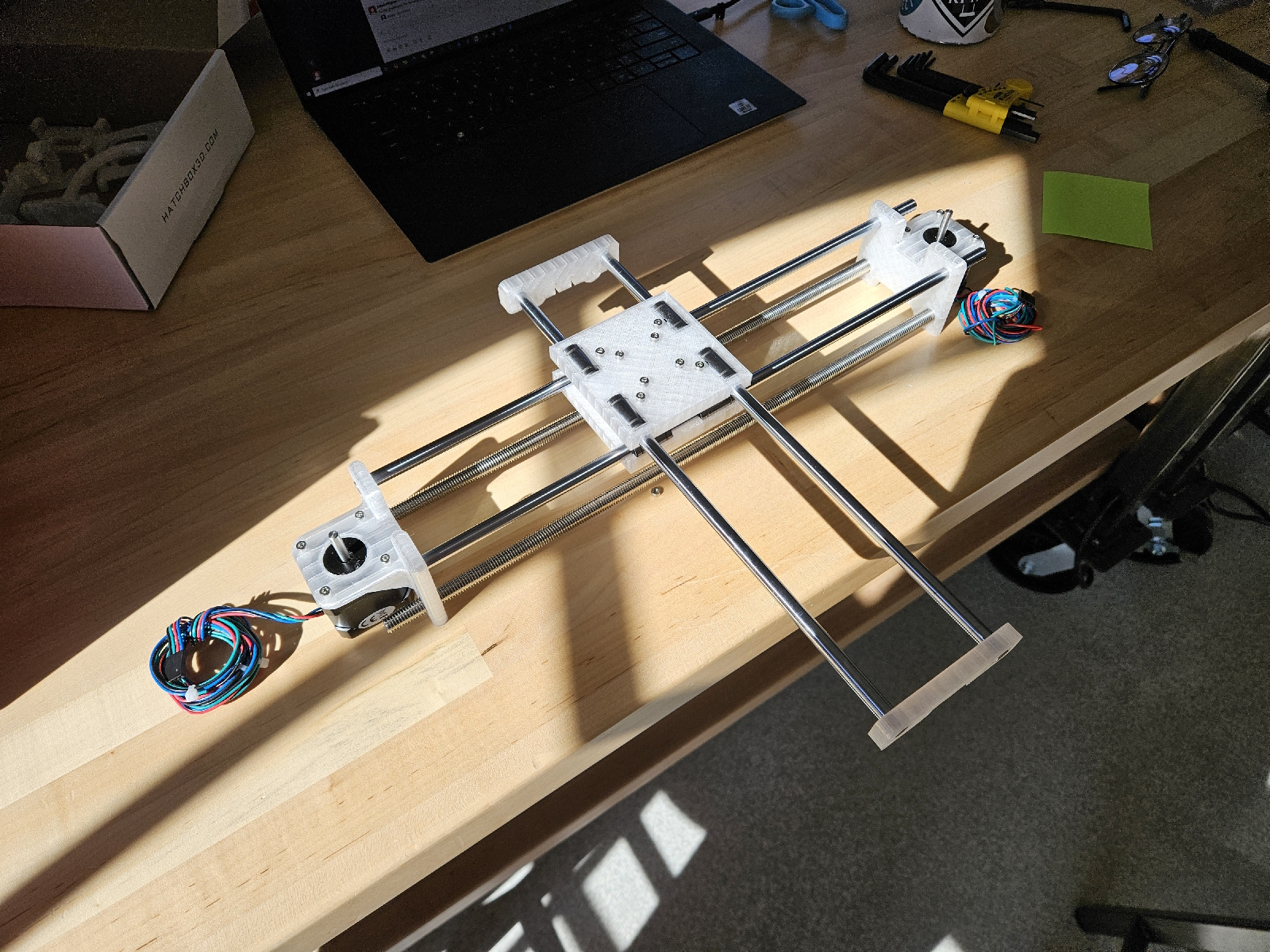

XY Robot:

As described in the design report, there is only a single, though important, test to be run. The big picture is that the robot is agile in motion and precise in positioning, such that it can support the rest of the system. Thus, we have the following validation test:

- Supply a series of valid, arbitrary real-world coordinates to the Arduino running grbl firmware through G-Code serial communications. For each coordinate, validate that the robot:

- Moves the cup within <0.5cm of the position

- Moves the cup to the position within <1.0s

The following conditions should also be met:

- We define a valid and arbitrary coordinate to be any position within a 10cm XY radius around the origin of the cup.

- Thus, the cup will only have to move AT MOST 10cm at a time.

- After each movement, allow the robot to reset to (0, 0).

- Ideally, configure the firmware to make the robot as fast as possible.

- We want to give as much leeway to the other systems (computer vision/trajectory prediction) as possible.

For the demo, I’ve made fairly good progress towards most of these goals. The firmware is set up to a possible maximum configuration of XY acceleration/feed rate, though I think this can be further configured. The demo entails moving the cup 10cm in the 8 cardinal directions + diagonals, and back to (0,0). This leads me to believe that the robot should be able to handle any instructions that call for less than 10cm of translation too. While unrigorous, I’ve also timed these translations, and they seem to fall within 0.3-0.4s, which is fairly decent.

Future testing would be to set up a python script that communicates G-Codes directly to the arduino through the serial port. I believe this should be possible. This can automate random valid coordinates as well. I also need to purchase a different set of limit switches. I had a misunderstanding over how they function, and so in order to enable homing, must set up four at the ends of each rail.