Accomplishments

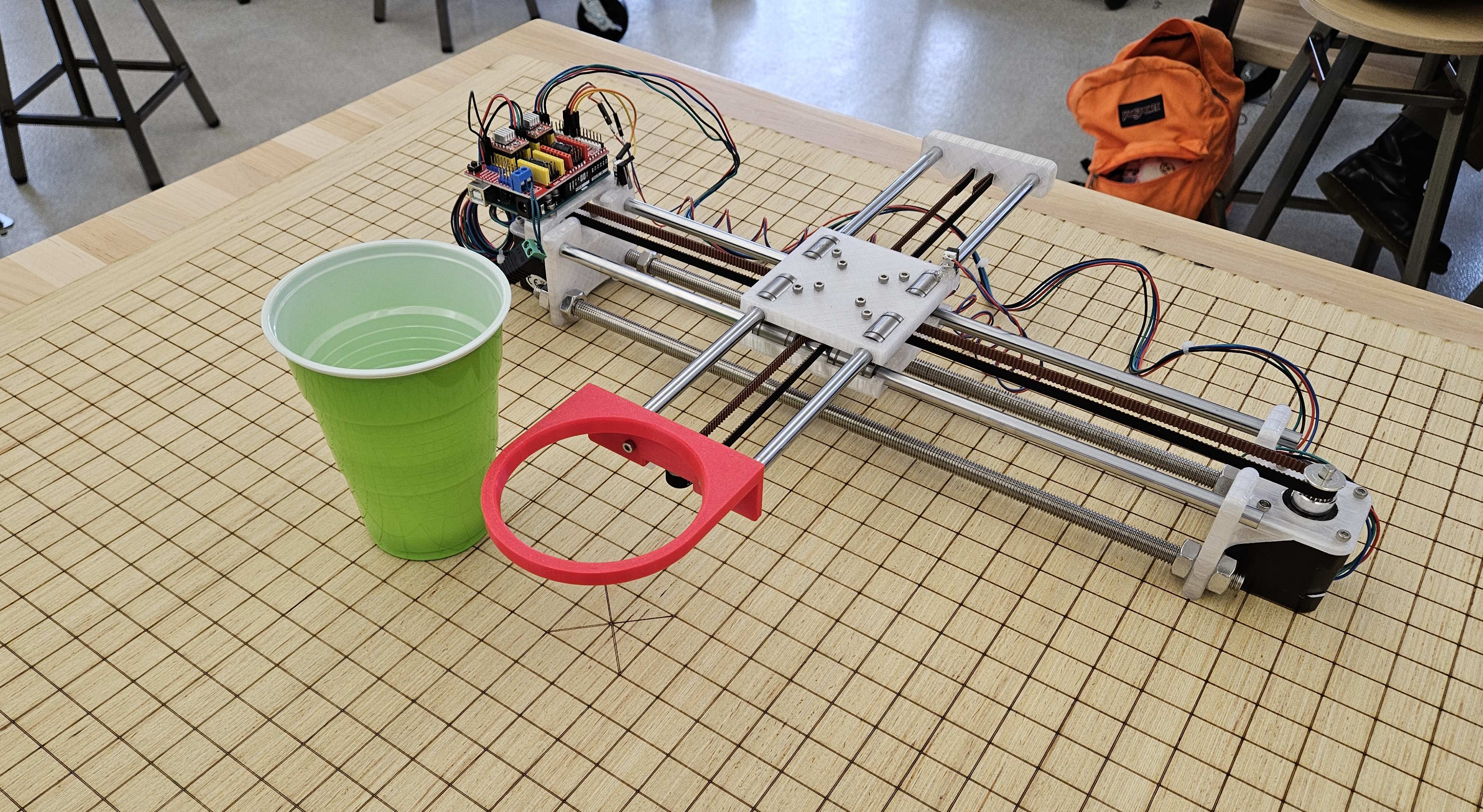

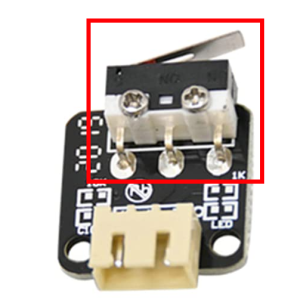

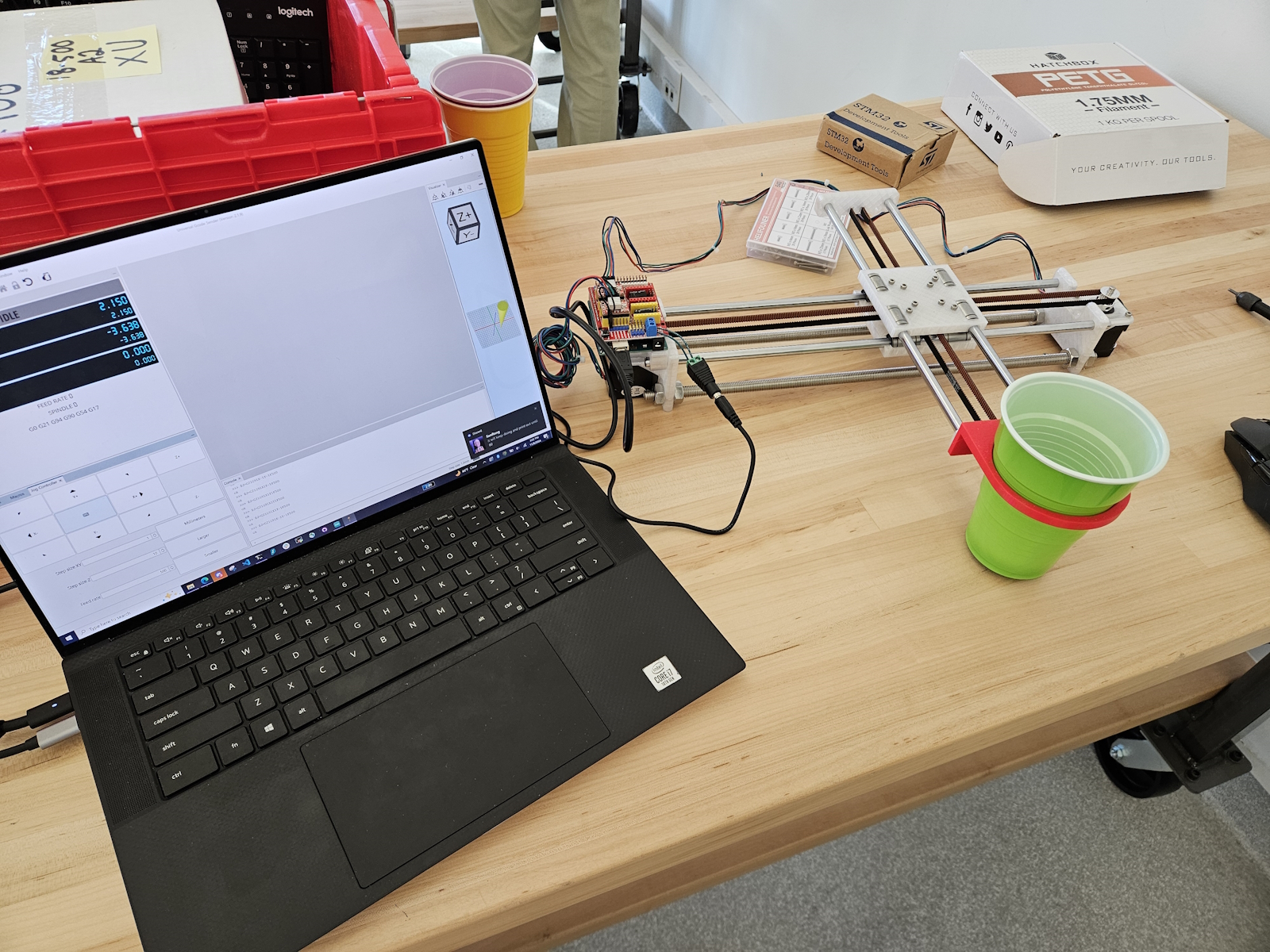

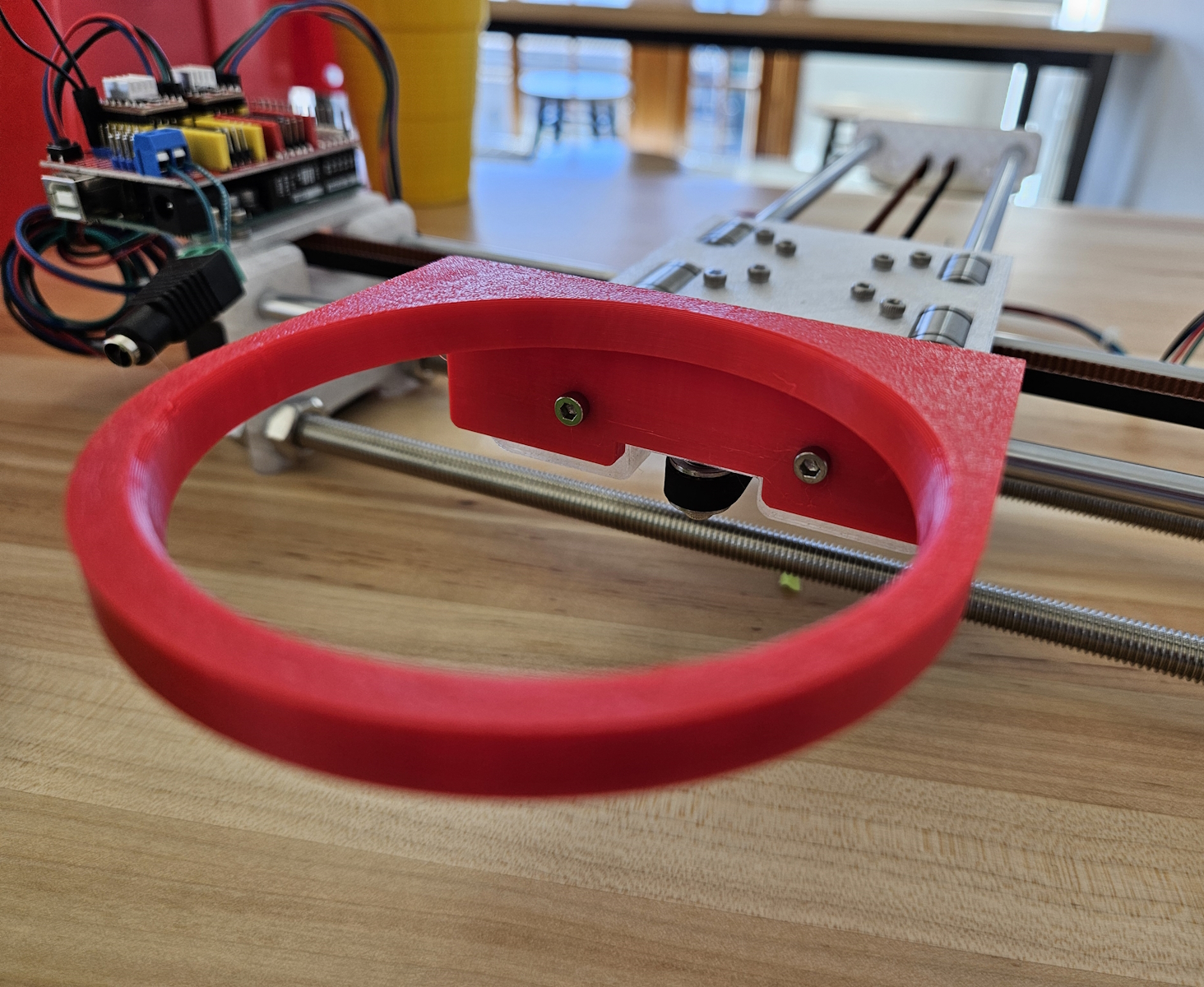

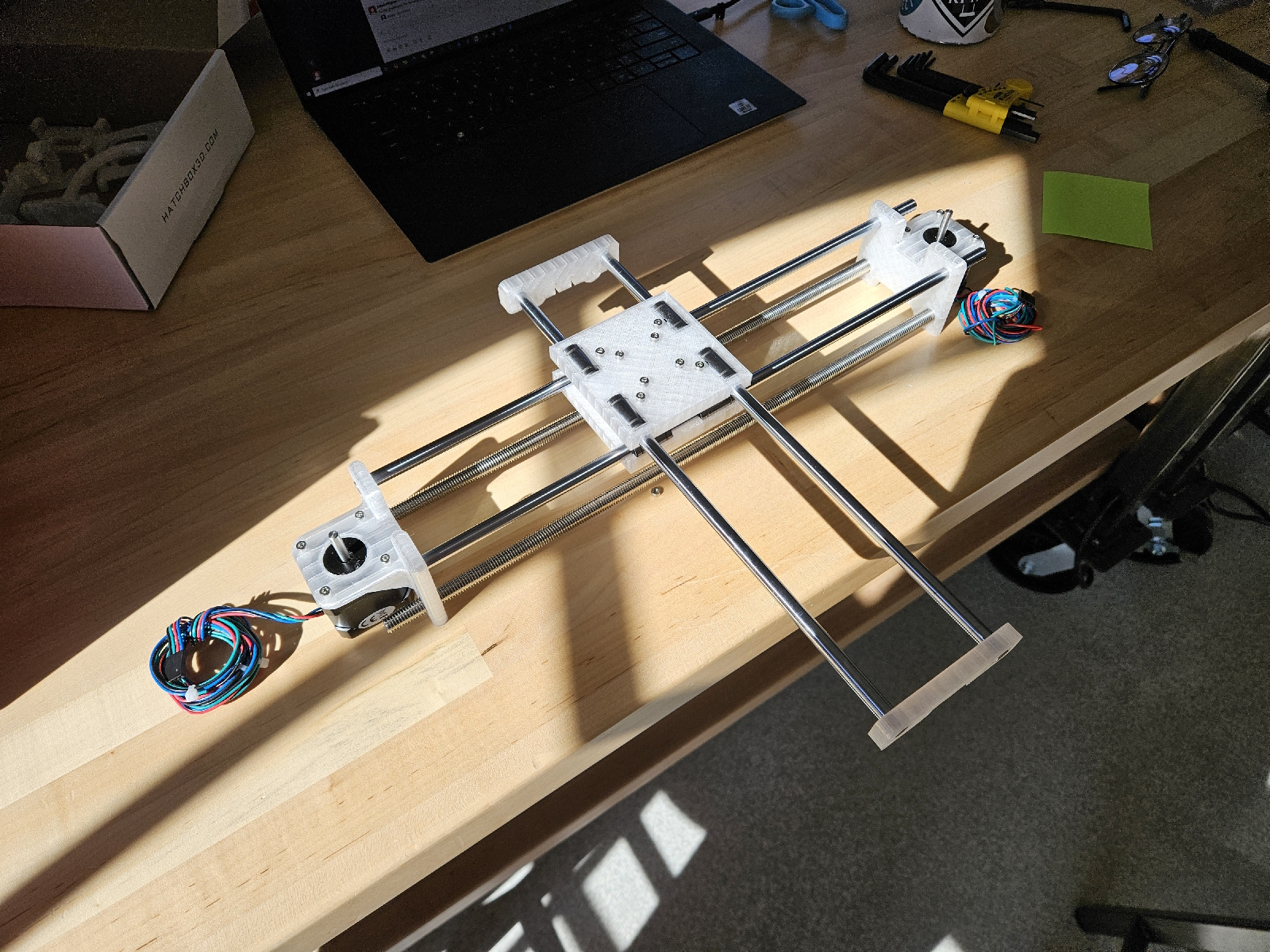

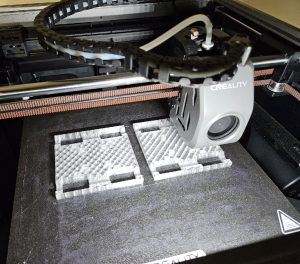

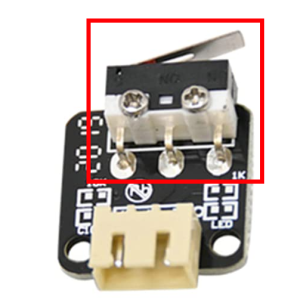

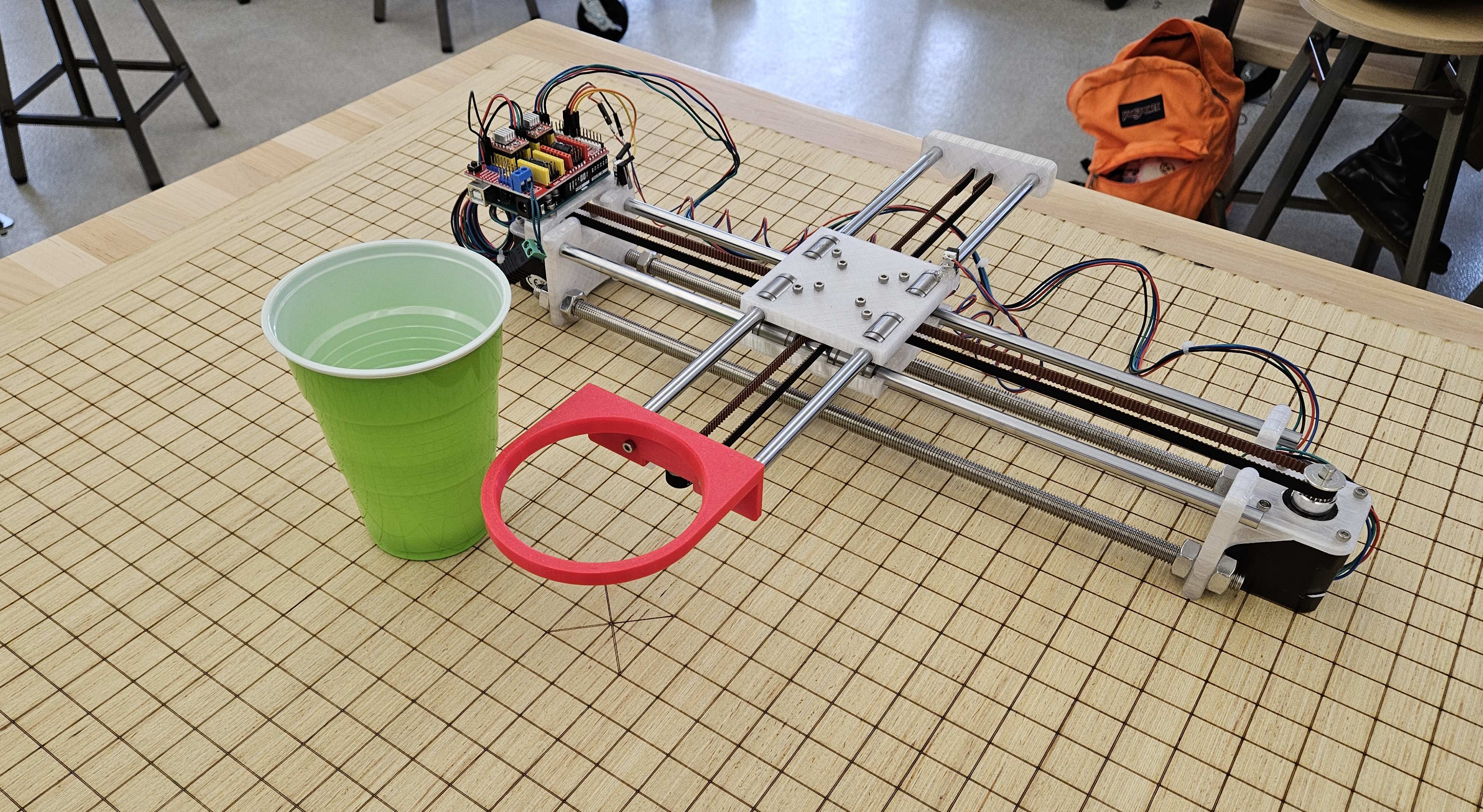

In short, the robot really is complete! It was mainly finishing touches. Instead of purchasing new limit switches, I just snipped the actual switch from the end stop that was intended for a 3d printer. From there, a little bit of soldering, wiring and glueing completed my homing setup. From now on, the origin will always be well defined and replicable, and therefore all translations well defined and replicable. I only used two limit switches, so hard limits aren’t quite enforced on the +X and +Z axis… but ideally we never exceed 10cm in any direction with software-enforced checks.

I’ve successfully migrated away from UGS and have implemented serial communication with the Arduino running the firmware through a python script. This will integrate cleanly with the rest of the system (RBP, camera). All integration requires on the side of the Pi is to first establish a connection over serial (USB) with the grbl firmware, and initialize some processes and configs, namely homing, feed rate, among others. Then whenever a prediction is made, simply call gcode_goto(x, y) to move the cup.

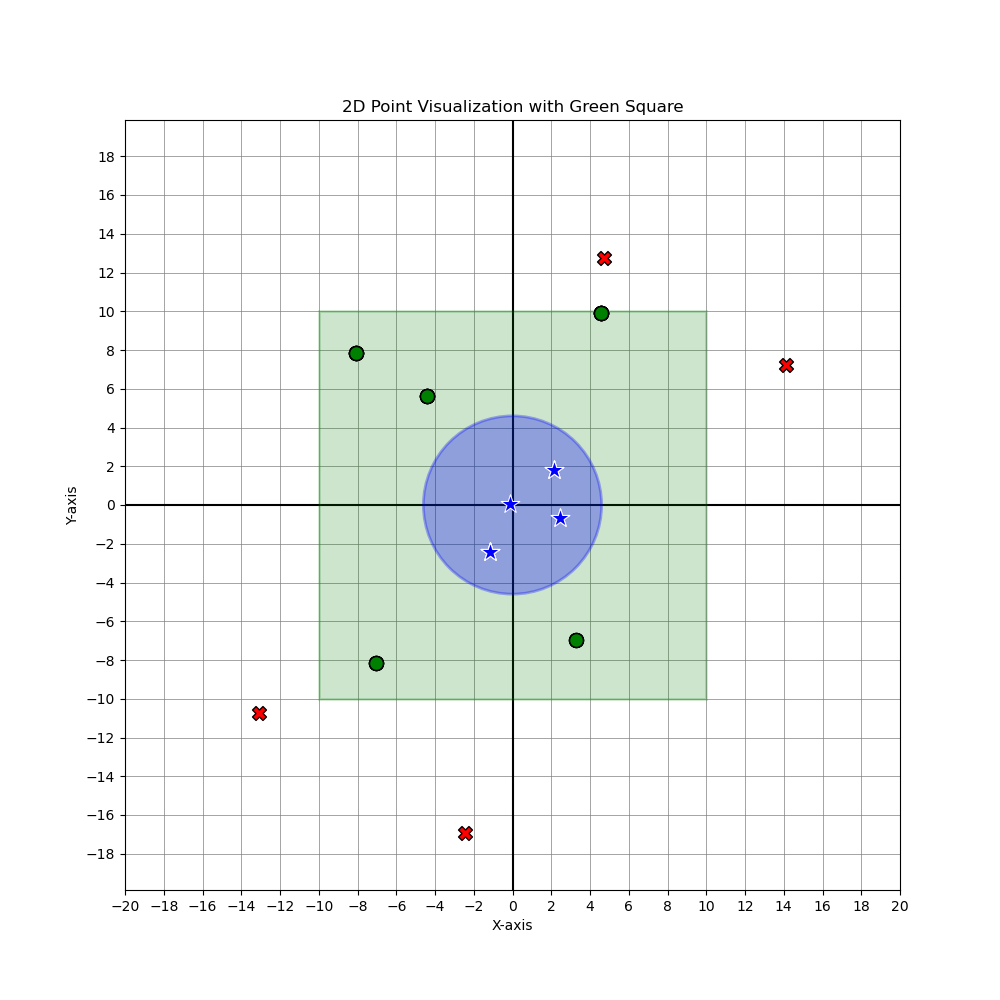

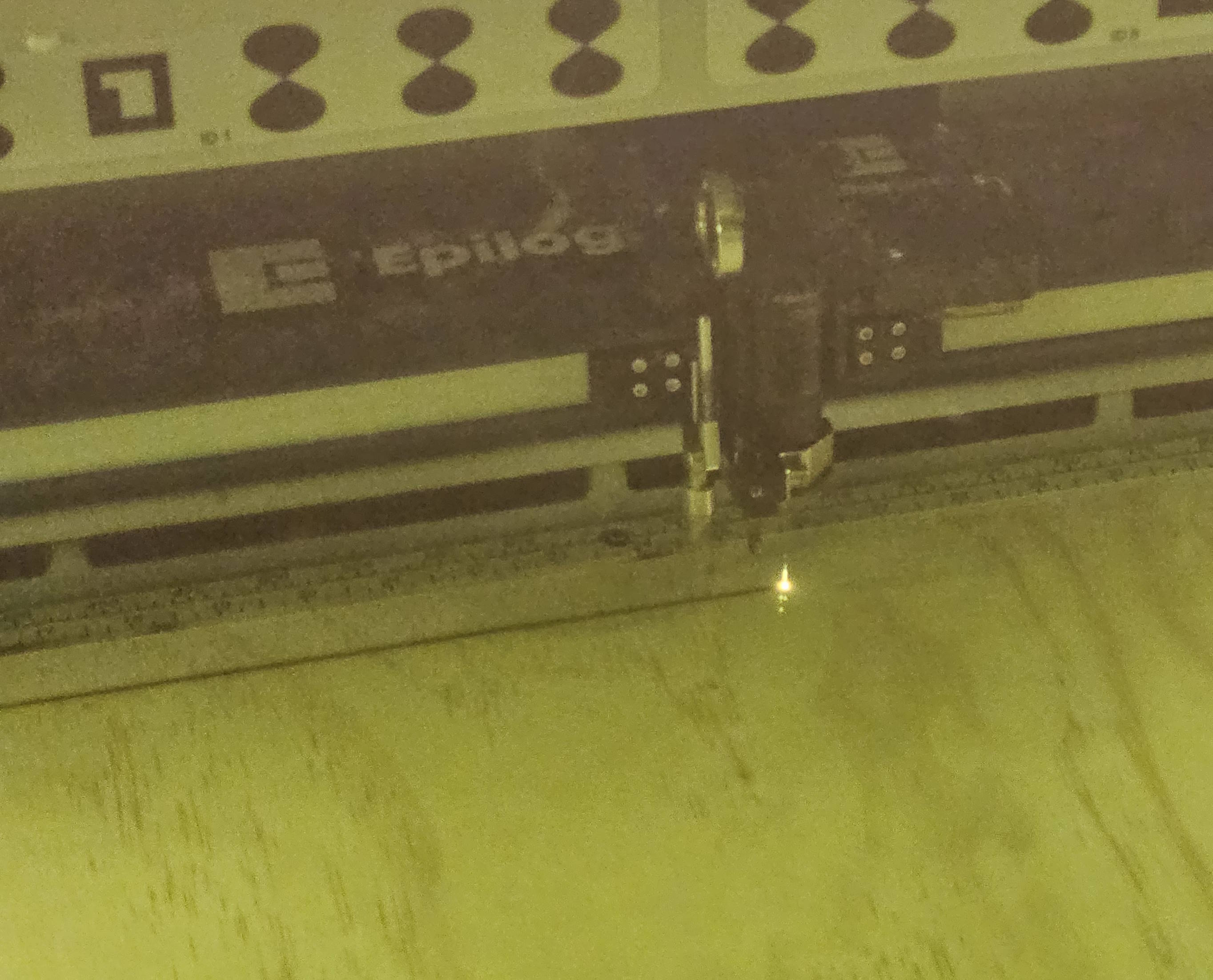

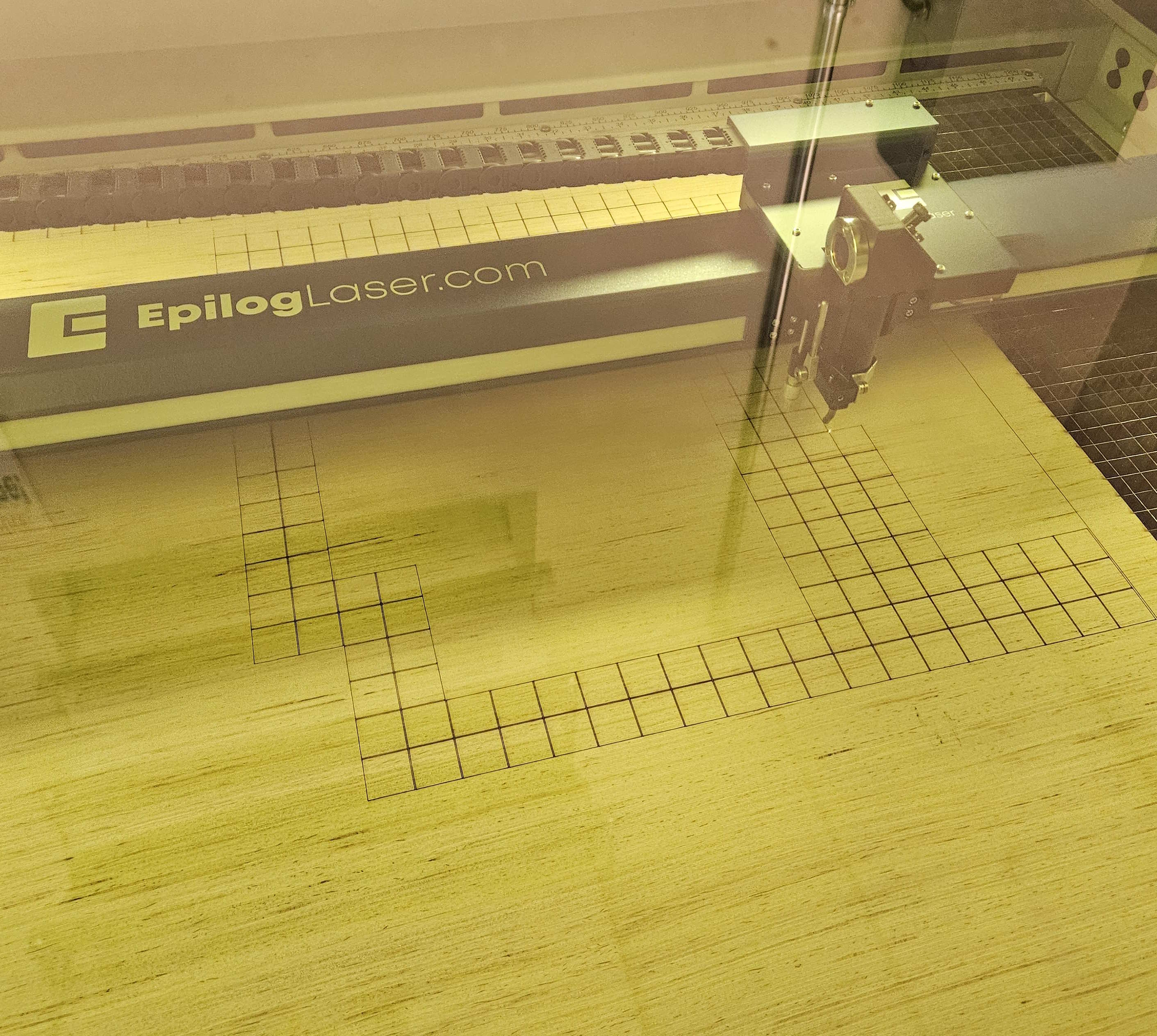

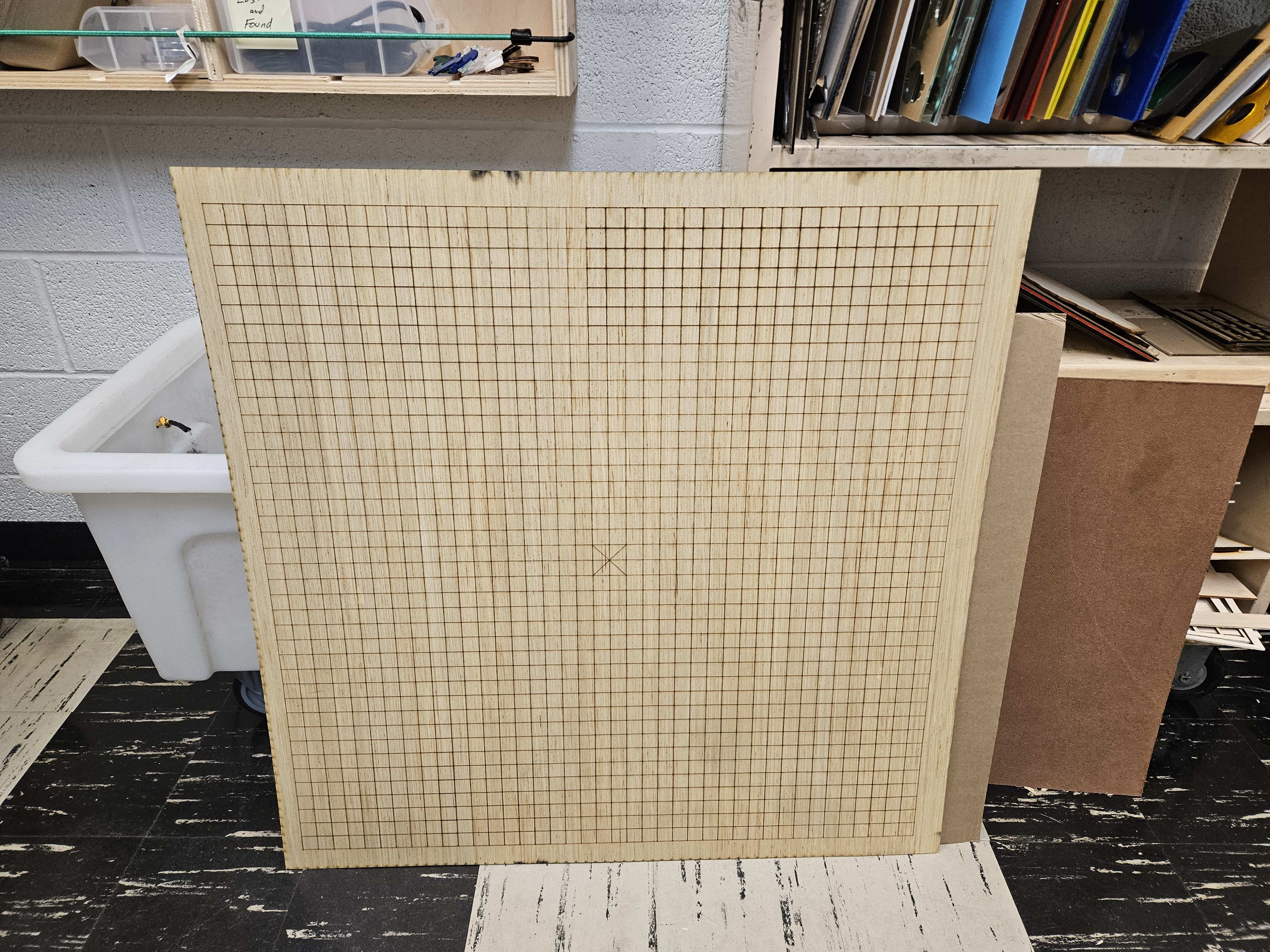

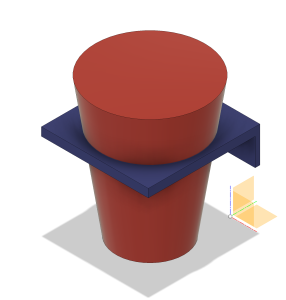

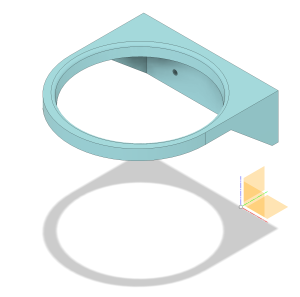

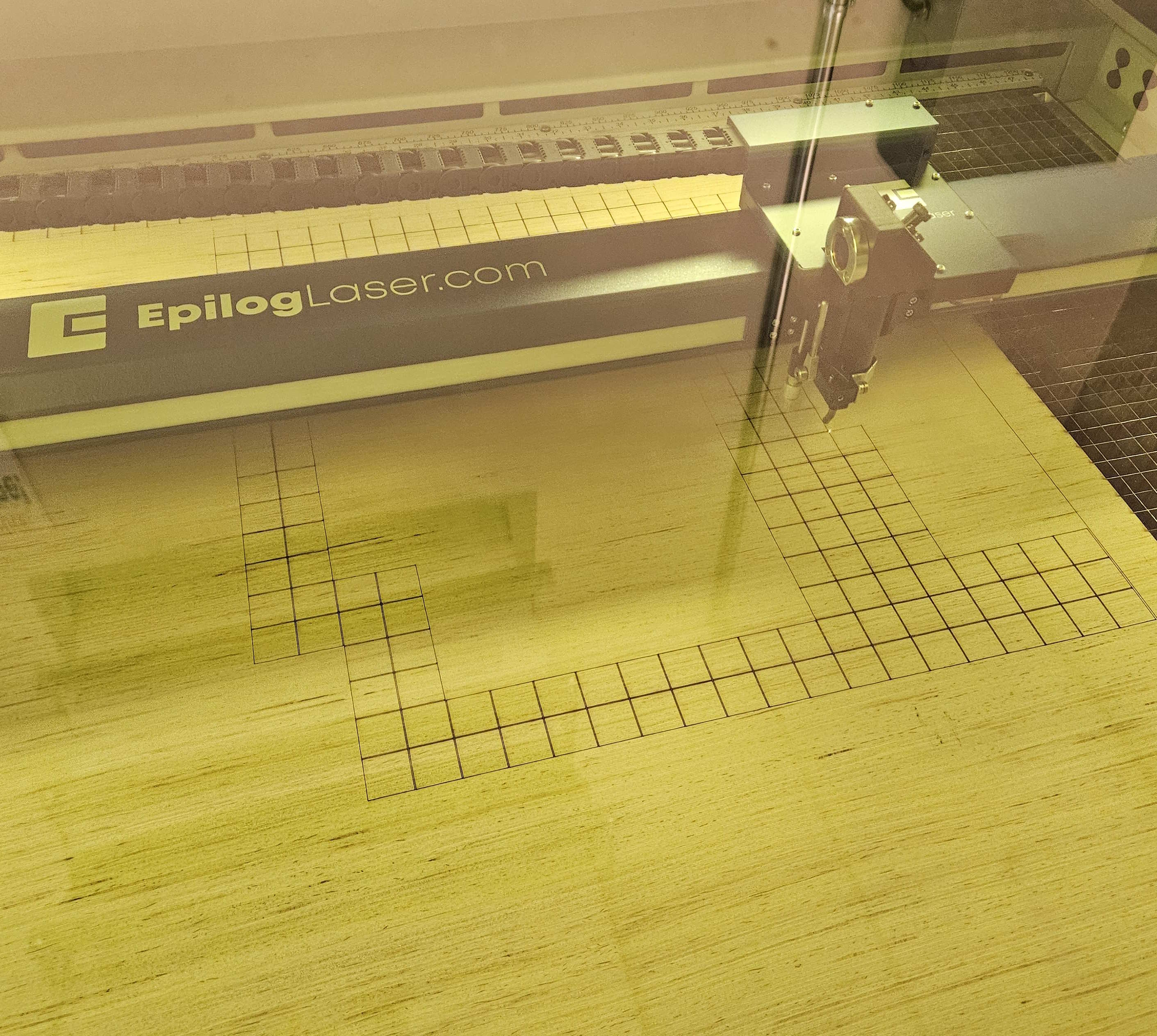

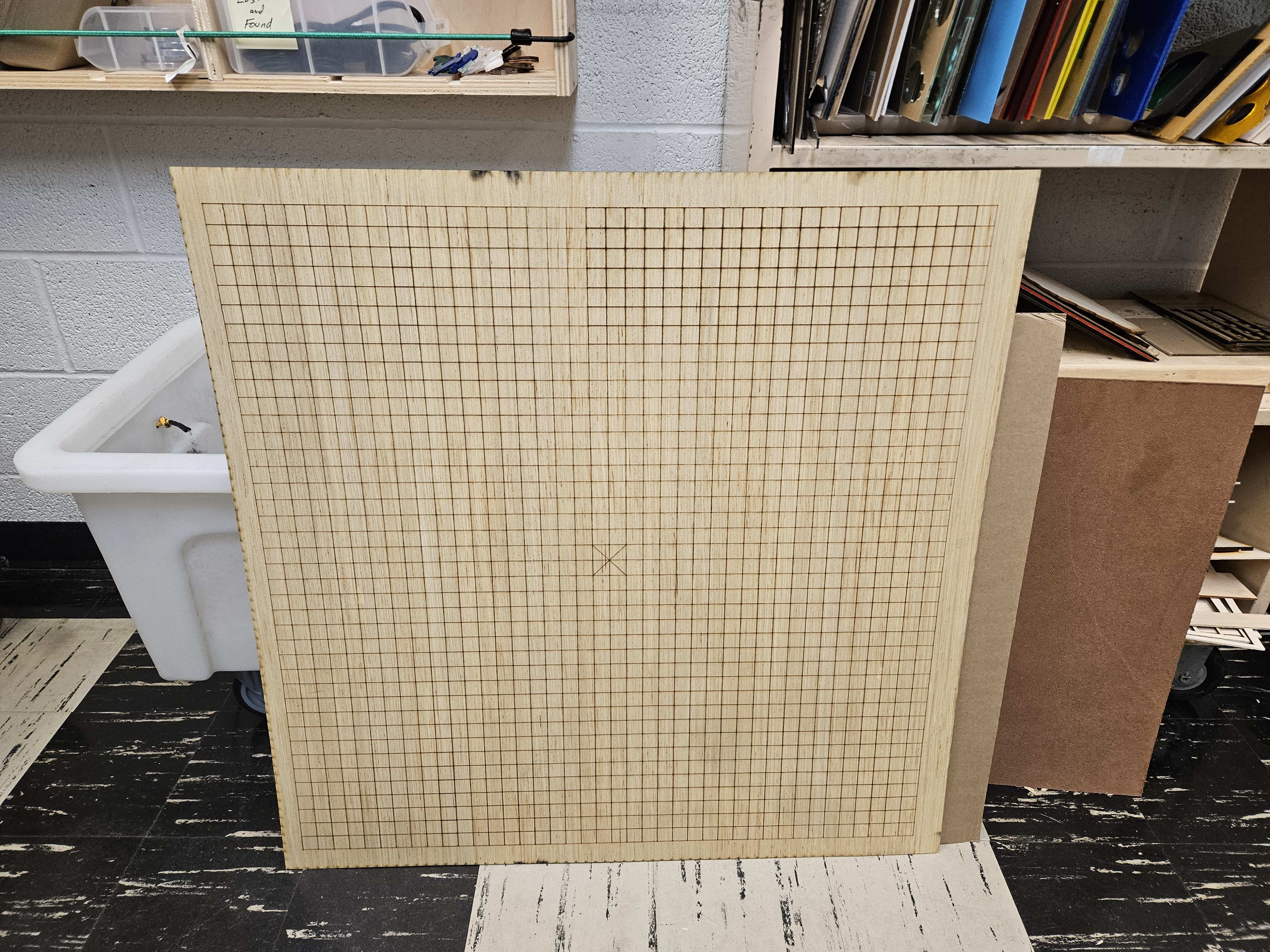

To add to this, I verified the robotics system. A maximum translation of 10cm takes at most 0.4s, which provides ample time. Secondly, I determined that grbl has a receiving buffer. In other words, it’s possible to enqueue several translation commands that the firmware will handle sequentially, and in order. This works nicely with our system that uses a Kalman Filter to generate increasingly better predictions incrementally as new frames are processed. Lastly, with the help of a MechE roommate, I acquired a base board around 3×3’ in area to ground the robot. An extensive grid was laser engraved onto the wood, with a special marking for the center, with 2cm separations. This will help with determining the location of the robot relative to the camera (and thus relative to the ball), as well as a good visual reference. We can mark out where the robot will rest every time.

Progress

Doing some final touches to the last presentation and getting ready to present it on Monday or Wednesday. I hope to support my other two teammates with concluding the rest of the system and with integrating with the robot. Integration should prove extremely straightforward.

As you’ve designed, implemented and debugged your project, what new tools or new knowledge did you find it necessary to learn to be able to accomplish these tasks? What learning strategies did you use to acquire this new knowledge?

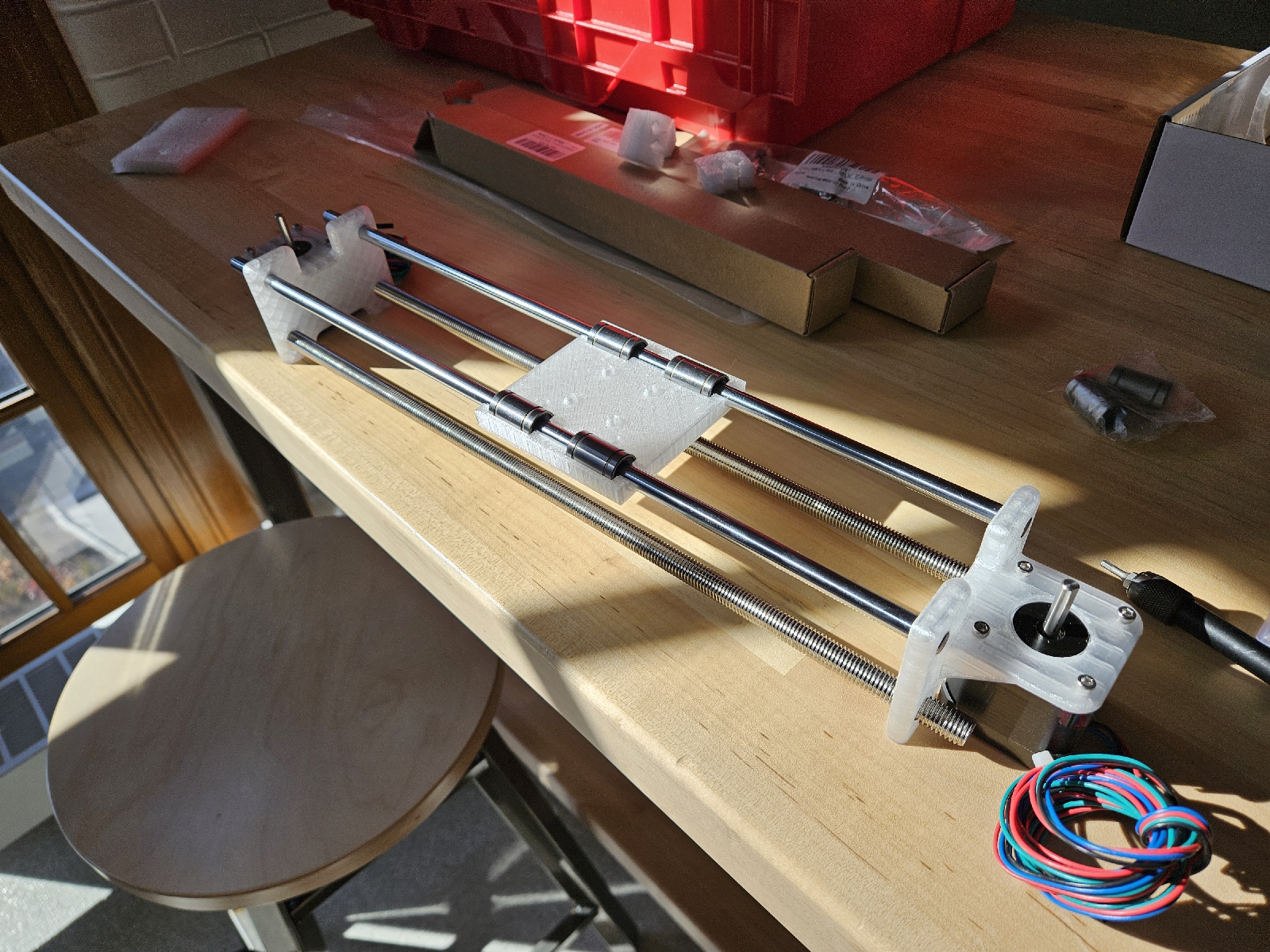

Robotics as a whole was mostly unfamiliar to me. Even just driving a stepper motor was a leap for me. I think the Autodesk Instructables website was integral for my success in creating the robot. It lowers the skill floor required to implement a physical system, for which many ECE students (including myself) are not extremely familiar with. GRBL and CNC techniques were also new to me – I found online resources to be very useful, including the wiki pages for the grbl github repository that helped to explain some configurations I might be interested in. Capstone requires that you search on your own for resources and potential solutions; there isn’t a reference solution for you to ask TAs for. I felt I developed some independence in creating my robot. I think it’s important to realize that it’s okay to not have to build everything from scratch. Technology is developmental, continually building upon what is already there. No need to reinvent the wheel if the blueprint is right there in front of you.