Status Report for 11/30

As each of our individual portions began to conclude, we started to focus on the integration of the project. Check Gordon’s individual status report for more details on 3D coordinate generation. Since Gordon is in charge of most of the hardware integration section, I took charge in drawing out what the real world mapping would look like, and did a calibration test to see how many pixels on the camera frame was equivalent to how many centimeters in real life. Below is a picture of how our mapping is visualized and connected between the Camera/RPI and the XY robot.

For the code that would integrate it all, I worked with Jimmy to create “main” function files that would tie together code to operate the 2D and 3D cameras, detection, kalman filter, and G-code interface. This is our final pipeline, and we were able to get it to a point where all we needed was to physically align and calibrate the camera in real life, and could begin our testing of the project from camera to robot. We actually made 2 “main” files, one for a simpler 2D pipeline (this would not have the depth camera activated) and one for the whole 3D one, since I was still fine tuning the 3D detection and coordinate generation while Jimmy created the 2D pipeline code. We are planning to first test the 2D pipeline, as switching to 3D will be as easy as running a different file.

On the camera and prediction side for Jimmy, all implementation details have been completed. The 3D kalman filter was completed, however testing needs to be done to verify the correctness using real world coordinate systems, for tuning for acceleration variables. Some roadblocks that was run into included the producer/consumer problem between threads when using multithreading to increase the camera FPS, which was eventually resolved. Currently, the biggest unknown and risk for our project remains to be the integration, which we will be working tirelessly for until demo day. Jimmy will be focusing mostly on the software integration of the camera detection, tracking, prediction on the raspberry pi. The following video is a real-time capture of the detection, and kalman prediction being done in real-time for a ball being thrown (more details in individual report)

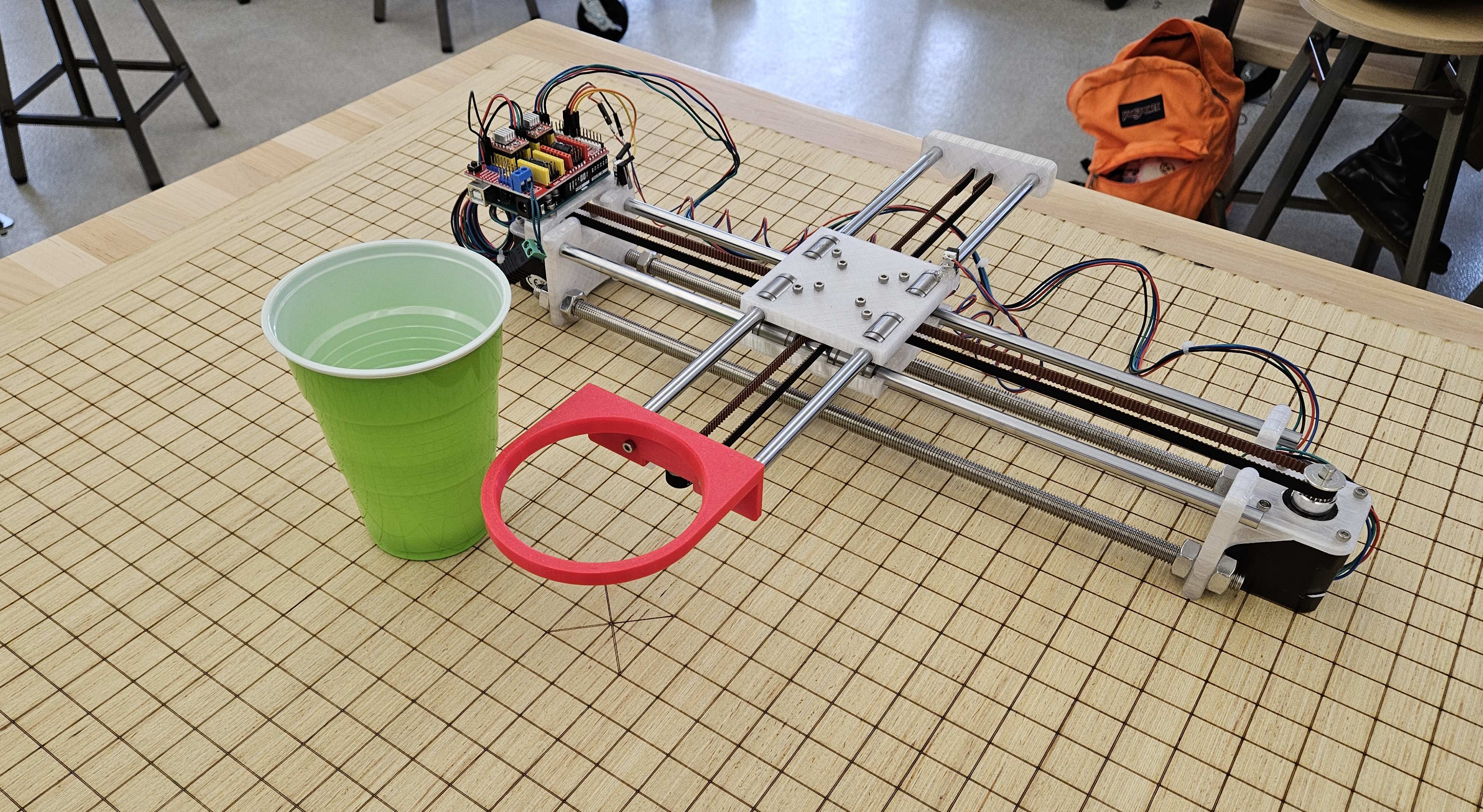

On the robotics side, everything is complete. See Josiah’s status report for detailed documentation on what was accomplished. The big points are that serial communication through a python script is functional with the firmware running on the Ardunio (use a simple USB cable), and it’s possible to queue multiple translation commands to grbl because it has an RX buffer. Therefore, it integrates well with the Kalman Filter’s operation, as it generates incrementally better predictions iteratively. These can be passed to the robot as updates are made.