The 2D ball detection has been completed, and we are now able to reliably detect the ball and generate x and y coordinates for the ball position within the frame of the camera. Jimmy had ironed out the Kalman filter detection code, and so I was able to successfully port that into the 2D detection file. With the Kalman function in, I made it so that when the ball is detected, a recording of the prediction and trajectory gets sent into a video file. We did a quick test, but it turns out that after adding the Kalman function, the FPS dropped down to about 15. This happens because within the code, a new frame is retrieved, then any processing we do on it (detect, then Kalman) will happen and needs to finish before a new frame can be retrieved. This causes the FPS to drop, to the point where the recorded video only spotted the ping pong ball for like 2-3 frames. This led to a pretty terrible Kalman prediction, which is understandable. This is concerning, but we knew that FPS would be an issue from the start. We are still confident that the Kalman prediction is correct, based on Jimmy’s testing with recorded videos. There are also numerous ways in which we can increase the FPS. For one, displaying the frame also takes computation power and slows down the rate of frame retrieval, and since displaying is purely for debugging or demonstration purposes, for actual runs of the system we don’t need to show the frame on a monitor. This reliably increases the FPS by 10+, based on some simple testing I did. Jimmy is also working on using threading to separate the frame retrieval and frame processing, which in theory should also give more frames.

For now the 2D work was done on my end, so I turned my attention to setting up the 3D detection and coordinate generation. This is entering a gray area, as none of us have had any experience working with the depth feature of the camera. I started by piggybacking an existing example that the depthAI library came with. This example worked with activating the left and right mono cameras as an additional stereo camera, to provide depth data of the whole frame. Then there was an ROI (region of interest) in which the depth values within the ROI would be displayed. This ROI is adjustable, and in the example you could move the ROI with the WASD keys. My idea was to activate the color camera at the same time, and use that to detect the ball. Once the ball is detected, move the ROI to where the ball is in order to get the 3D Z coordinate. I wrote out the code, and for the demo we were able to showcase the ball’s depth being reported as we moved it around on screen. There was also an issue with alignment of the two camera systems, but I was able to take some time and fix that. This was promising, but there was still more to do, as the returned depth is not the real world 3D Z coordinate, but the shortest distance from the camera to the ball. This is only speculation, and more rigorous testing needs to be done to determine if that is true. Currently I’ve figured out the math that would translate the shortest distance from camera into the real world 3D Z coordinate, and have been able to generate 3D coordinates of ball position while the camera is on.

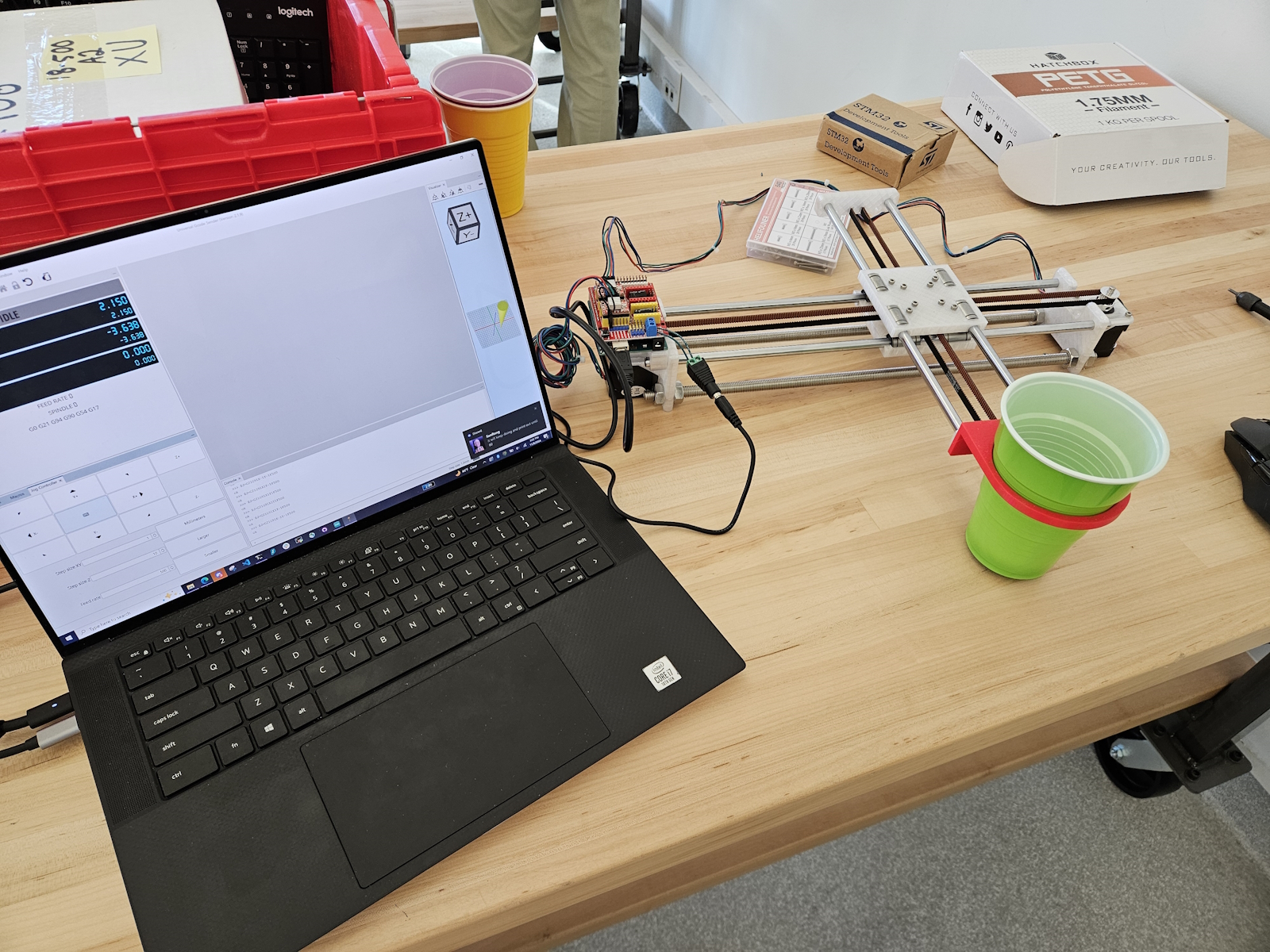

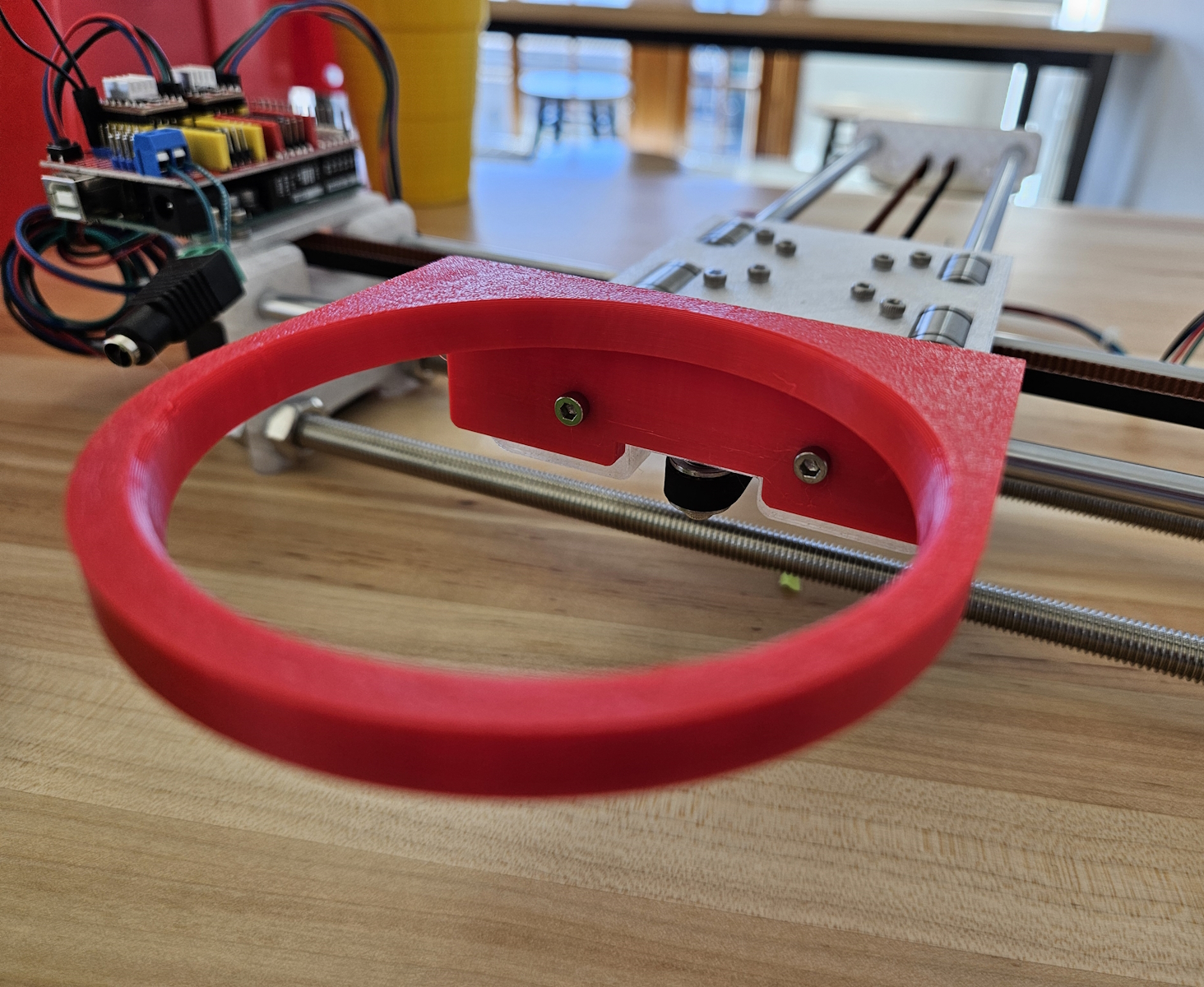

Either way, we are mostly confident about our ability to generate 3D coordinates relative to the camera’s location. The next thing I did was to think through and create a real world coordinate system that the XY robot could also utilize. I wrote out a plan of integrating what we have with the Pi into the robot side, as that still wasn’t super developed yet. Josiah has done a good job with getting G-code to reliably run on the robot, so it is just a matter of accurately determining the landing coordinates and sending them over.

We are coming to the end of the semester, and even though there are still a few big question marks to tackle, we have set up quite a few work sessions to get it all done. I would say that we are currently on pace to finish within the next week or two, but will require a solid group effort to get it done.

As you’ve designed, implemented and debugged your project, what new tools or new knowledge did you find it necessary to learn to be able to accomplish these tasks? What learning strategies did you use to acquire this new knowledge? We recognize that there are quite a few different methods (i.e. learning strategies) for gaining new knowledge — one doesn’t always need to take a class, or read a textbook to learn something new. Informal methods, such as watching an online video or reading a forum post are quite appropriate learning strategies for the acquisition of new knowledge.

I had never worked with either the AMD KRIA or a Raspberry Pi before, so learning the respective tools to get that to work took significant time. I used Vivado and Vitis in the context of setting up the KRIA, and had to look through many AMD/Xilinx guides and forums in small bug encounters. For the Raspberry Pi (RPI), I also had to look through numerous guides and tutorials by RPI for setup and debugging issues (the forum was especially helpful with an issue I ran into with bootup, giving me the exact LED error code and solution). I also used online videos to give me a better sense of what the RPI is and what it is capable of. Then there were the numerous guide pages and forums that Luxonis had in regards to dealing with any code regarding the camera. I also had to rely on TA help from both Varun and Nathan, who had expertise in the KRIA and RPI/OAK-D camera respectively, for other small issues that online resources couldn’t satisfy me for.