What we did as a group:

- We all had reduced workload because of thanksgiving. Work was mostly done remotely. We continued working on the algorithm and the recording. We did feasibility research for a new features we were thinking of.

Hubert:

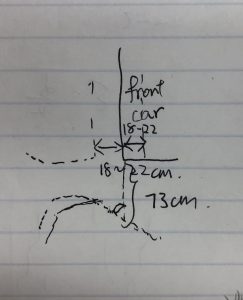

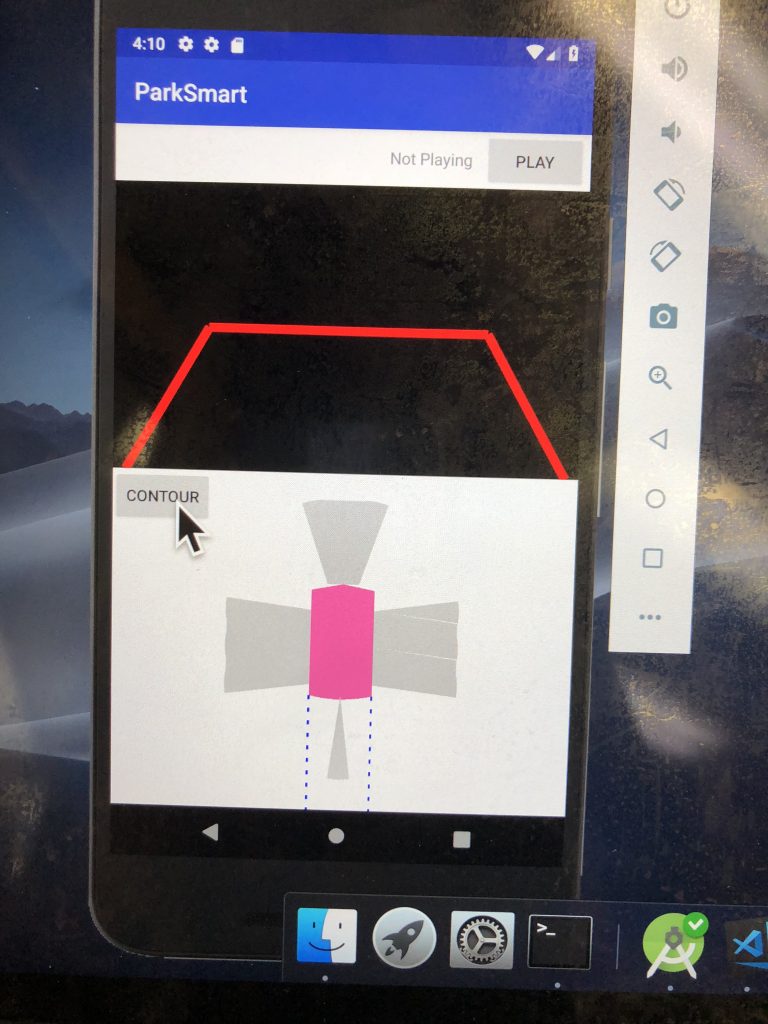

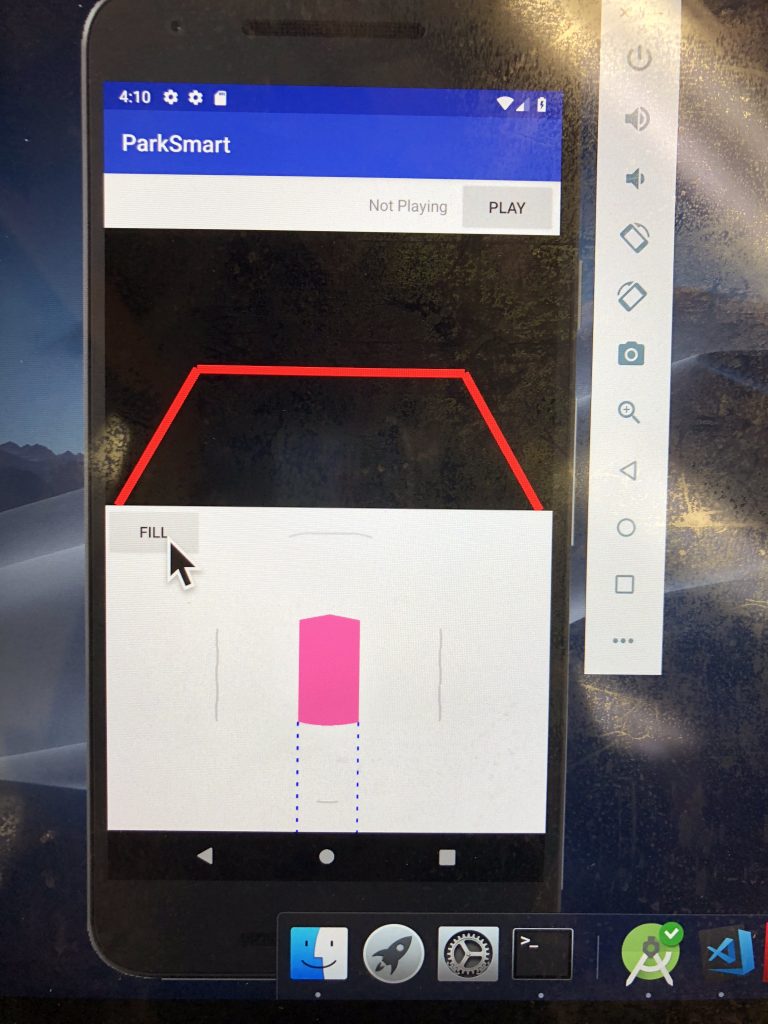

- Feasibility research on animation for instructing drivers to get closer or further from the right

- This will be during the stage where we’re trying to get to the position that’s parallel with the reference car in front of the parking spot.

Zilei:

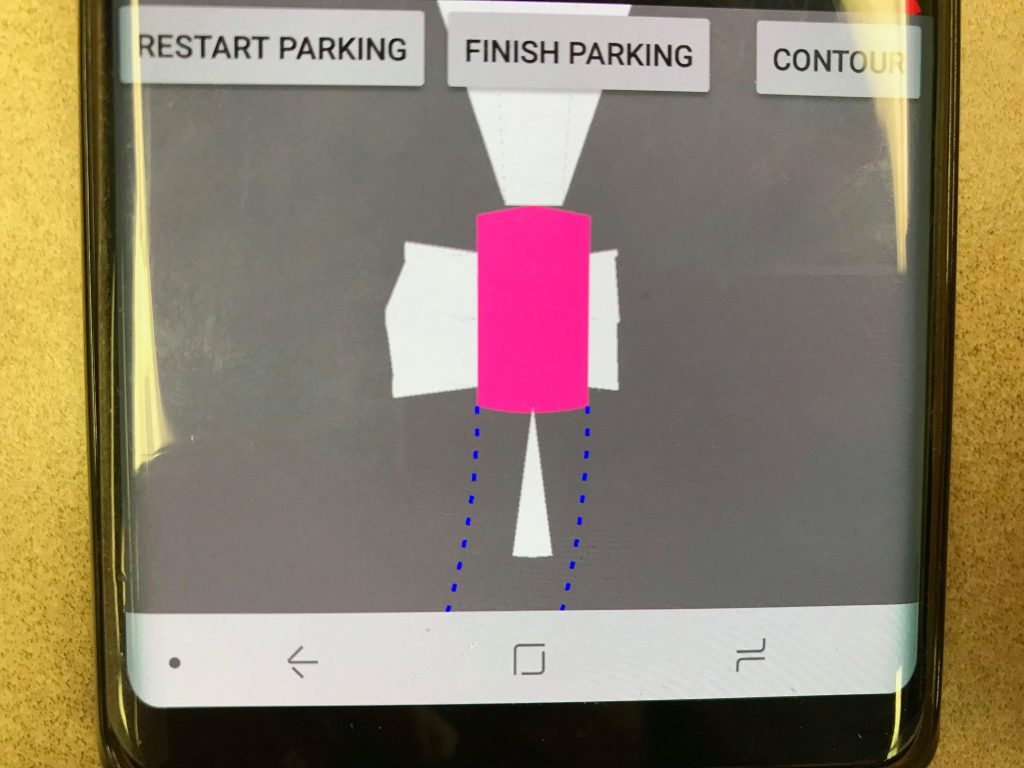

- Progress on the algorithm

- Still using text overlay for now, pending integration with the audio in the upcoming week

- Redesign of different sub-states within a state

- currently a mess in code, but pretty comprehensive of the different scenarios

Yuqing:

- Recording of the audio files

- Playing audio files from the android app