What we did as a team

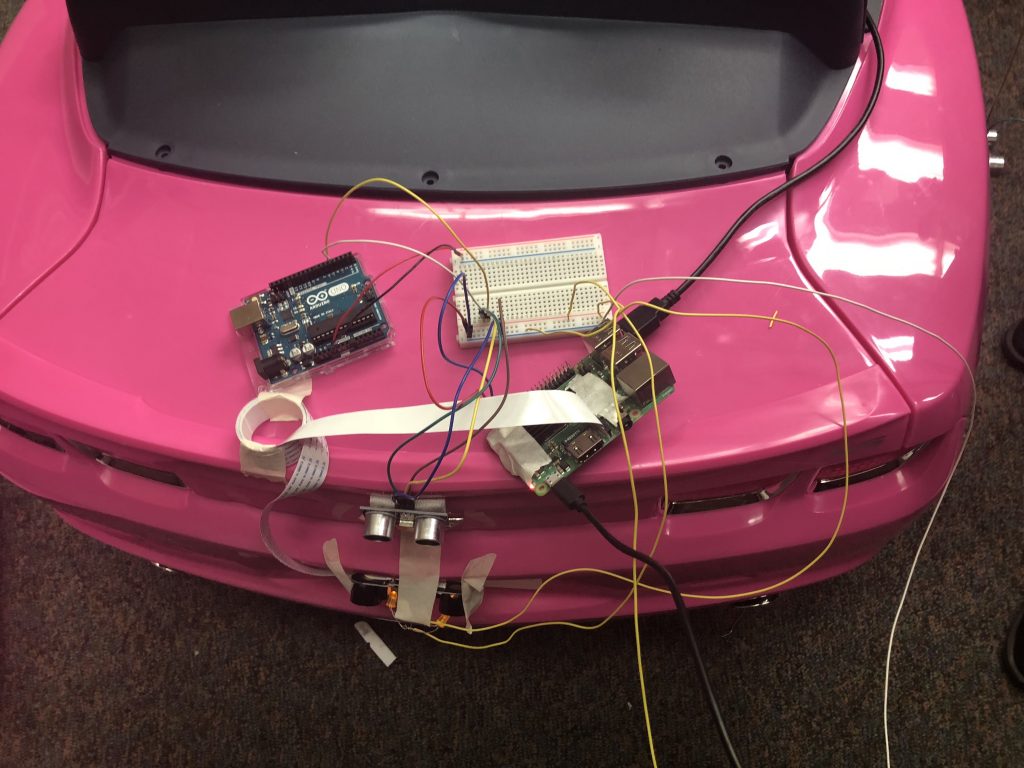

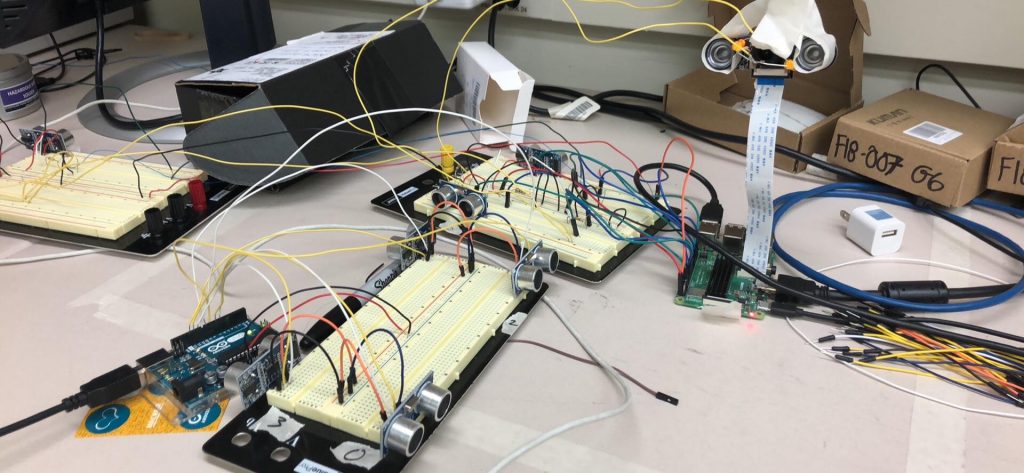

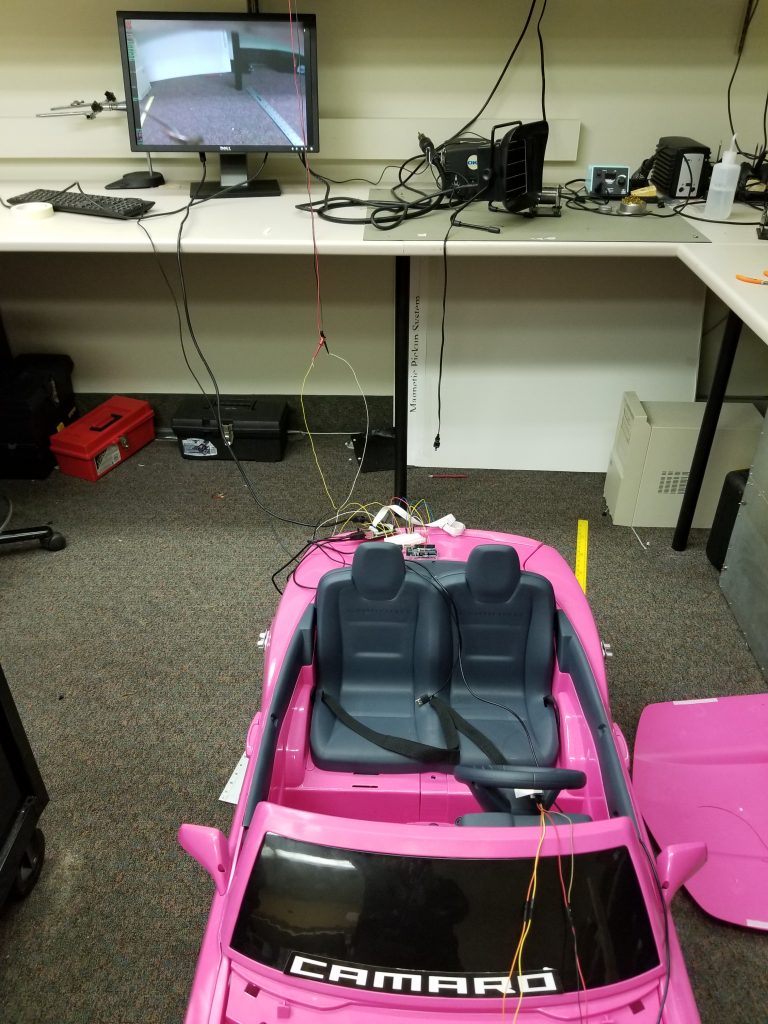

- Attached ultrasonic sensors, camera, and gyroscope to the testing vehicle

- Measured dimensions of the vehicle and discussed solutions for sensor attachment & wiring

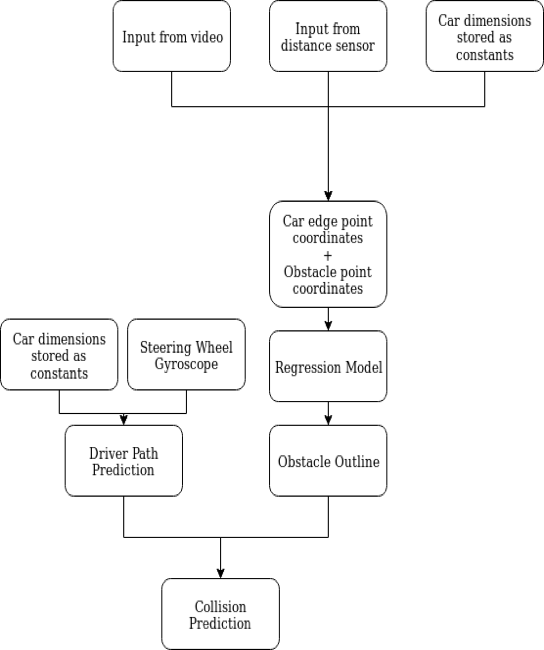

- Obstacle outline with all 9 ultrasonic sensors

- Discussed any potential changes to the positions

Hubert

- Attached camera to the back of the vehicle and connected camera to RPi

- Realized rapid video streaming within customized view in Android app.

- Added view container for path prediction overlay on video streaming video.

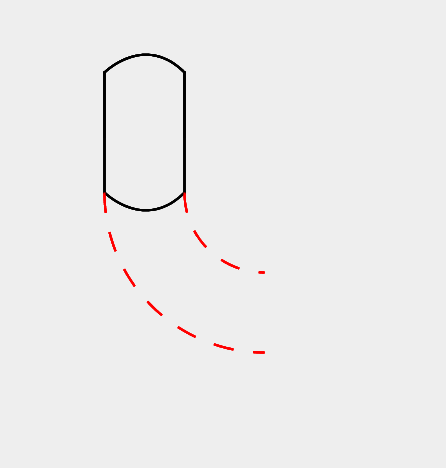

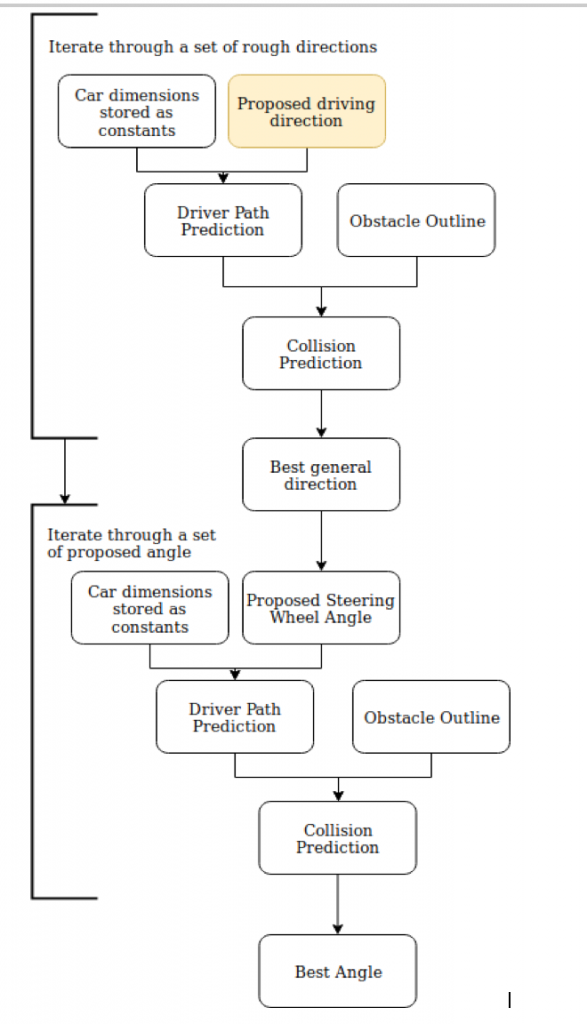

- Path prediction conceptual effect:

Zilei

- Added car dimension constants to our library

- Finished sensor initialization & indexing for RPISensorAdpator that initializes all sensor coordinates & types for later use by the drawing tool

- differentiating between front-left, front-right, left, right, back ultrasonic sensors so that the angle of detection could be based on the type of the sensor placement

- positions & types of the sensors relative to the car are initialized at compile time

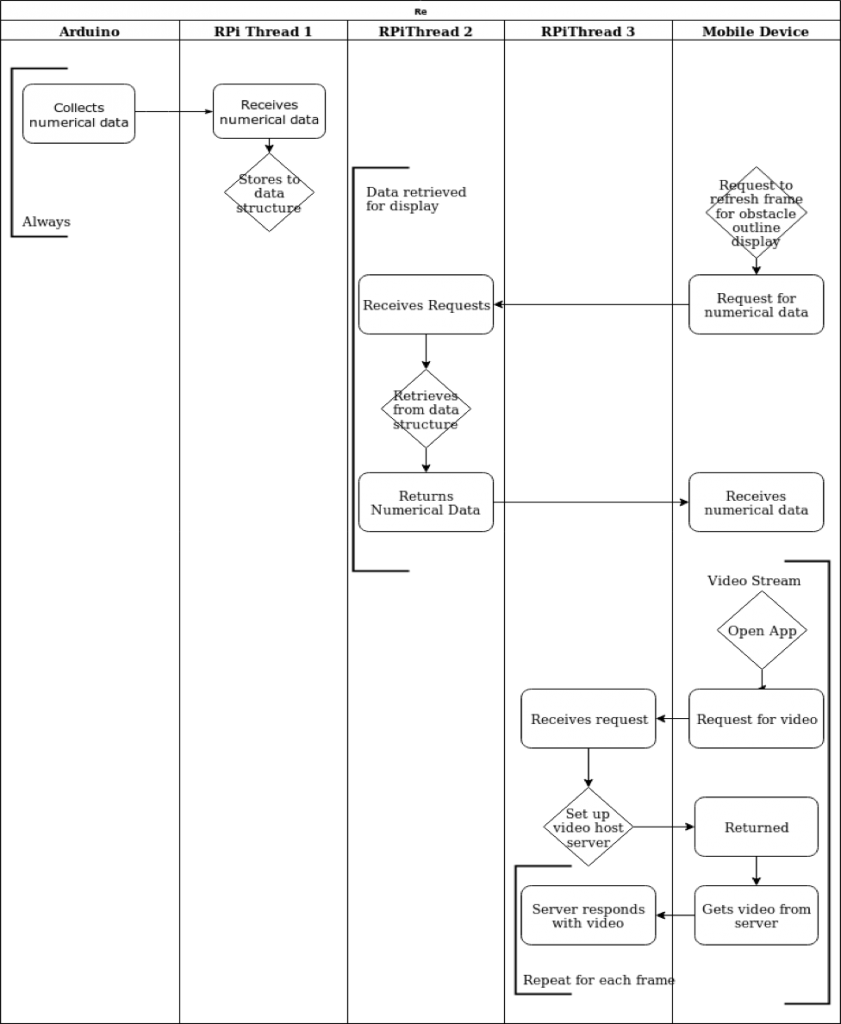

- Finished RPI to Android numerical data parser, this completes the entire pipe of numerical data

- splitting a list

- changing the rpi output to be formatted so that only few digits are transmitted

- Test using dynamic sensor readings for drawing

- Worked on socket networking to transfer data, we have a working dynamically updated obstacle outline as of Sunday

Yuqing

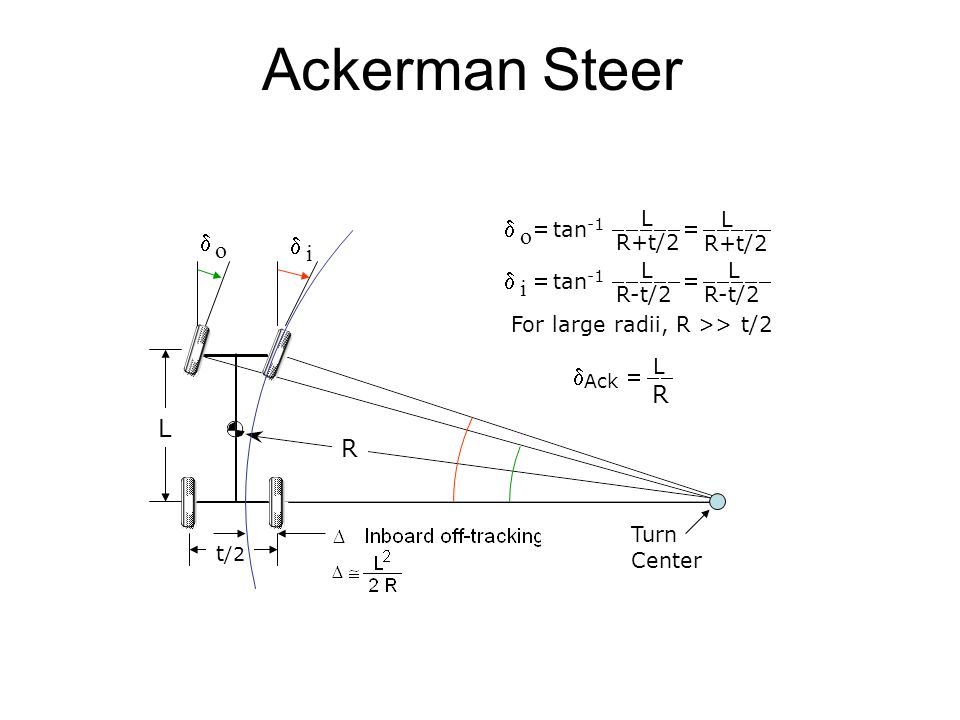

- Experimentally determined the relationship between steering wheel angle and car wheel angle

- Maximum car wheel turn angle is ~20 degrees (19.89)

- When car wheel turned to its max angle, roll calculated by gyroscope reading is around +- 30

- Hence, we are going to approximate the car wheel angle by multiply gyro roll reading by 2/3

- Modified plotting algorithm based on measurements of the testing vehicle to reflect more realistic ratio

- Connected 4 ultrasonic sensors and gyroscope to the front Arduino

- Connected ultrasonic sensors to the back Arduino