What we did this week as a group:

- Successfully connected multiple ultrasonic sensors to Raspberry Pi and realized distance data transfer from multiple sensors

- Tested accuracy of distance data from ultrasonic sensors and valid distance range and angle range of sensors. What we have learnt:

- Accuracy of sensor data fluctuates a lot at the very beginning and then slowly stabilizes

- Sensor has a limited range of roughly 2 meters and around 15 degrees

- Sensors occasionally will have glitches where the distance reading is way off. Some algorithm like median out of 5 readings will need to be used

- Interference between sensors can drive both sensors to be extremely inaccurate. Therefore, sensors need to far enough from each other in order to have accurate data.

- Interference between sensors get extremely high if there are no obstacles within 1m the sensors.

- Successfully connected camera to Raspberry Pi and enabled night-vision camera

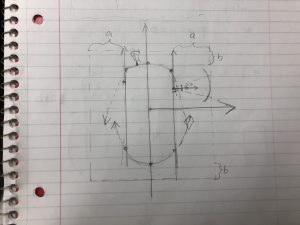

- Devised baseline algorithm for plotting of the surrounding environment based on distance data

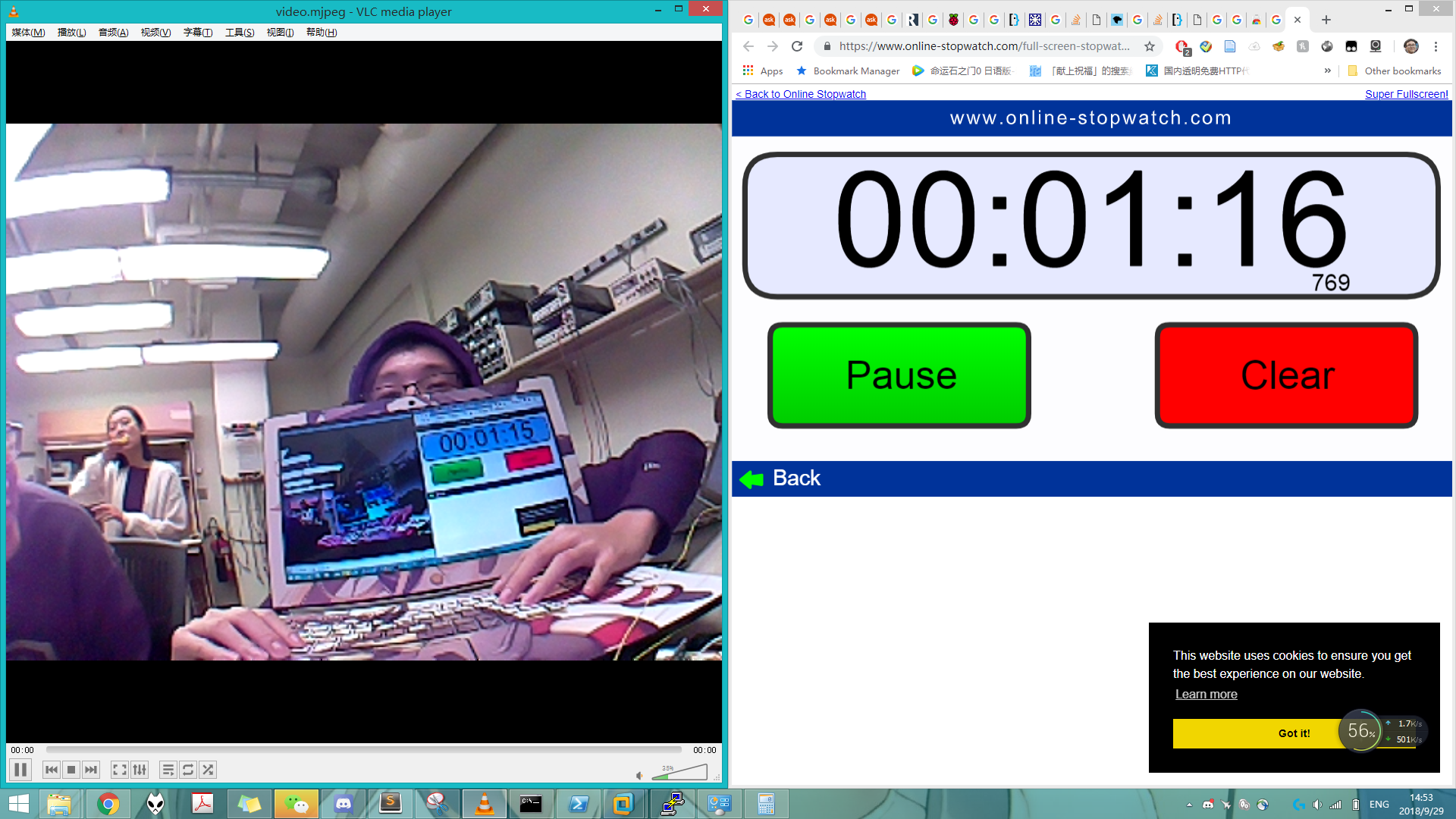

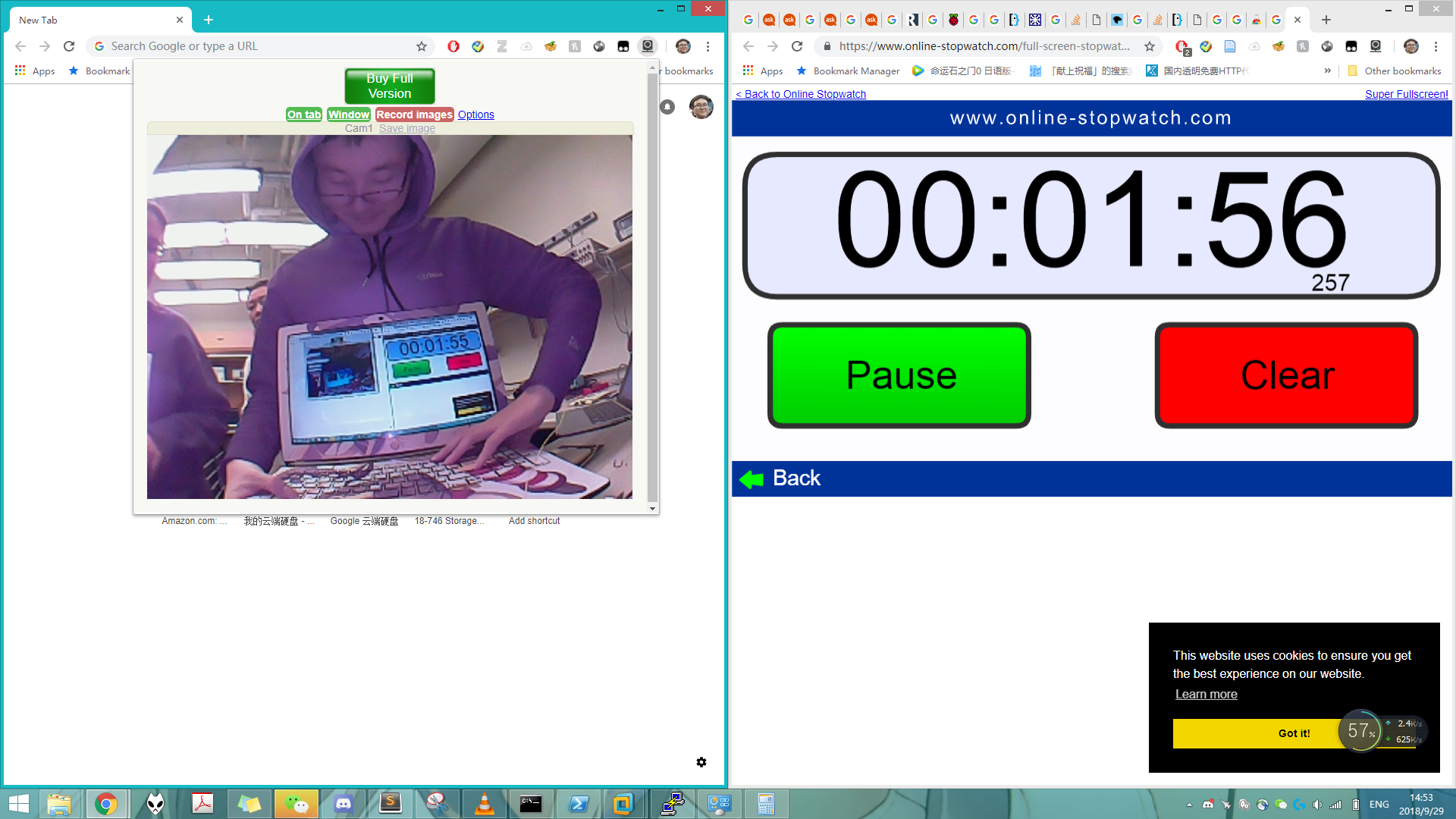

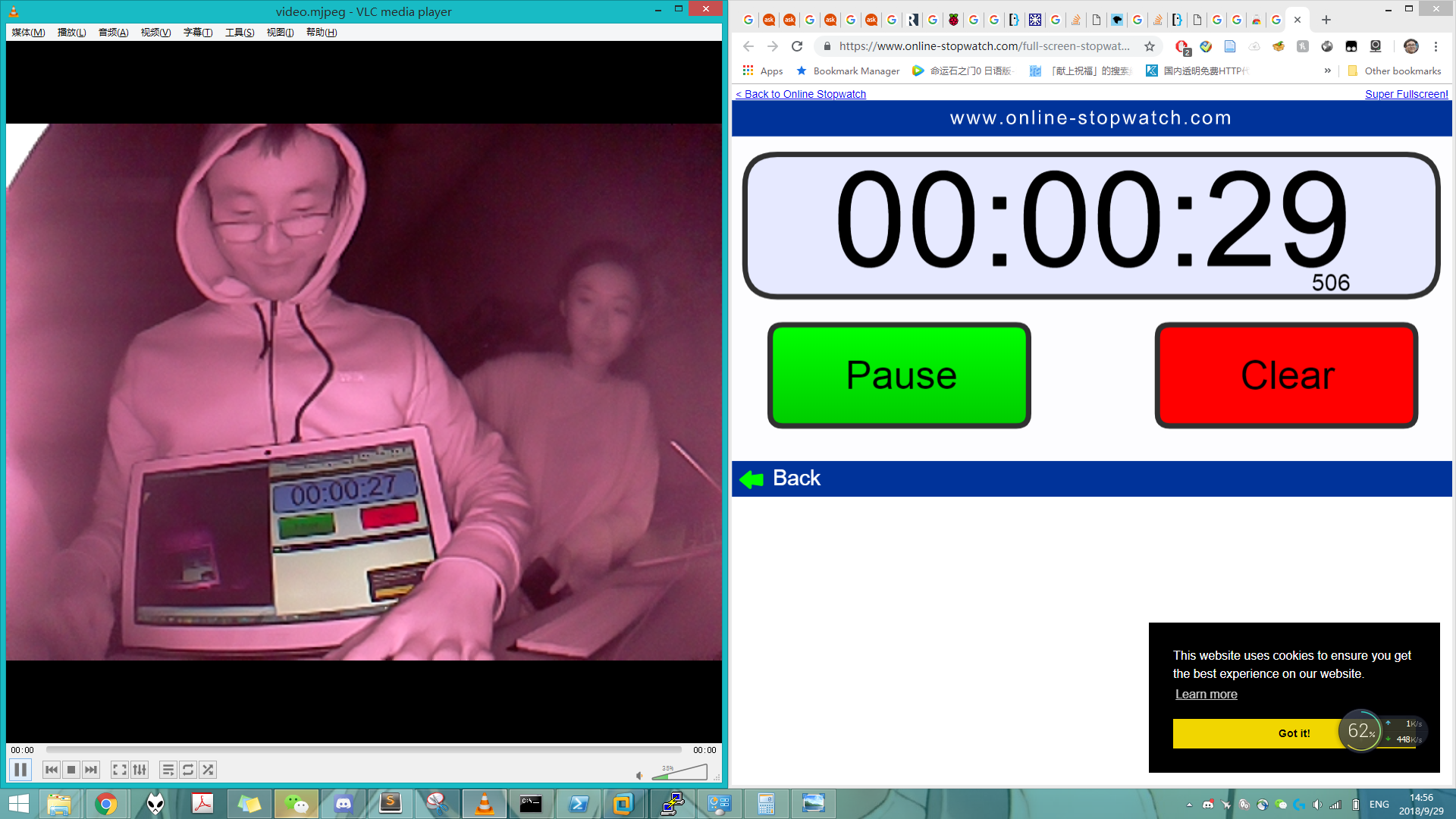

- Testing of camera latency

- Day

-

- Night

Hubert

- Realized connection between camera and Raspberry Pi

- Installed Raspberry pi camera module driver

- Installed uv4l camera video processing & streaming library

- Tested live-streaming of camera video and tried multiple streaming settings.

- H264 streaming result in long latency and due to the lack of compression & bandwidth limit, performance is bad (sutter & huge lag)

- MJPEG streaming does not depend on the key frame & thus bandwidth bottleneck does not stutter streaming as bad

- Achieved framerate about 10 fps, latency of daylight max 2s, night-vision max 3s

- H264 can be streamed in VLC player

- MJPEG can be streamed in browser & VLC player

Zilei

- Connected ultrasonic sensors to Raspberry Pi

- Tested the range and accuracy of ultrasonic sensors

- Drafted the baseline algorithm for outlining the surrounding environment based on sensor data

- Refined java functions drafted by Yuqing

Yuqing

- Connected ultrasonic sensors to Raspberry Pi

- Drafted python script that utilizes gpiozero module to parse data from ultrasonic sensors

- Drafted java functions that will be used to plot the position of the vehicle and the outline of obstacles around it

- Helped devise the baseline algorithm for plotting outline