What we did as a team

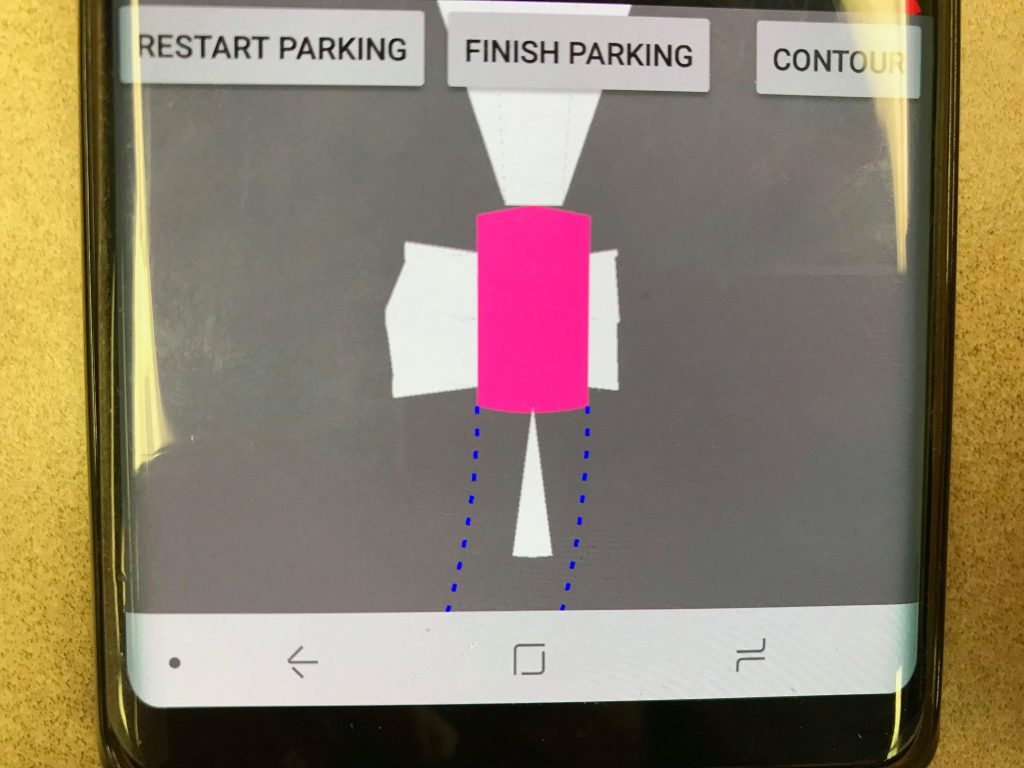

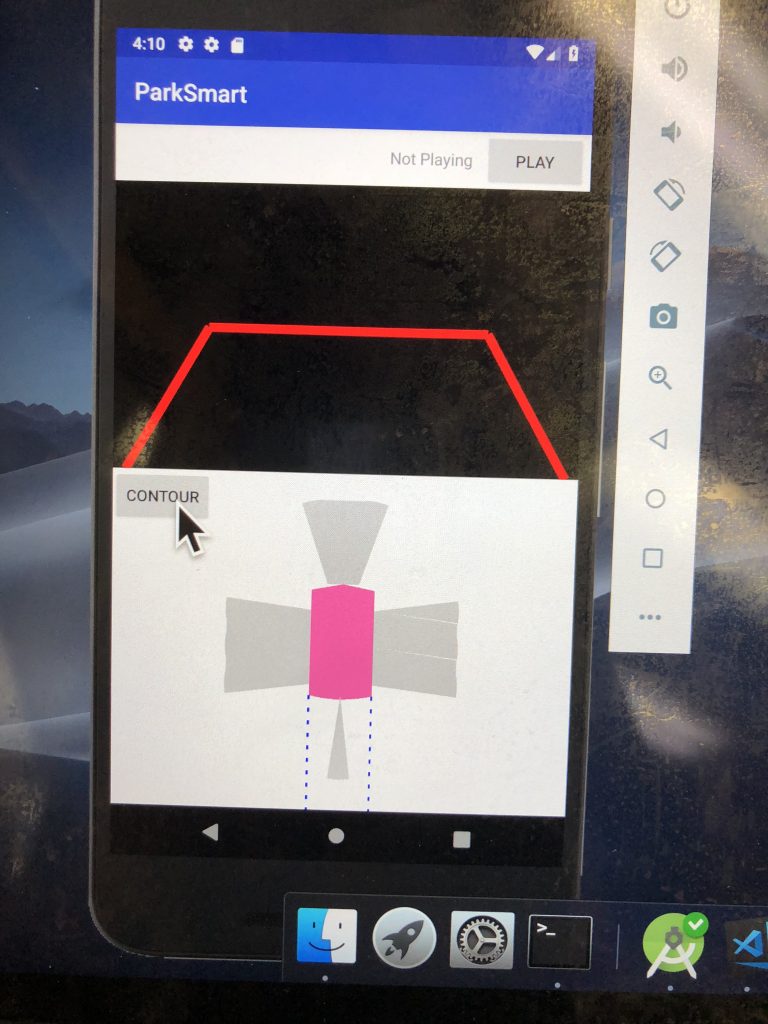

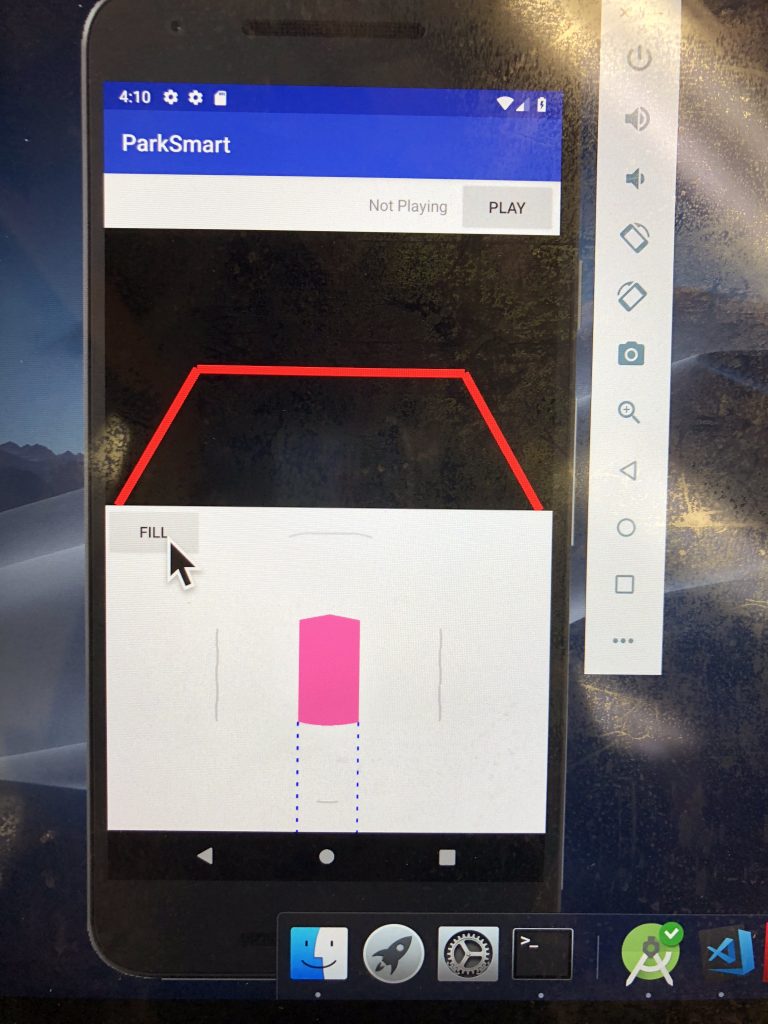

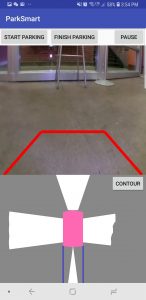

- Integrated parking algorithm and instruction generation into the Android App

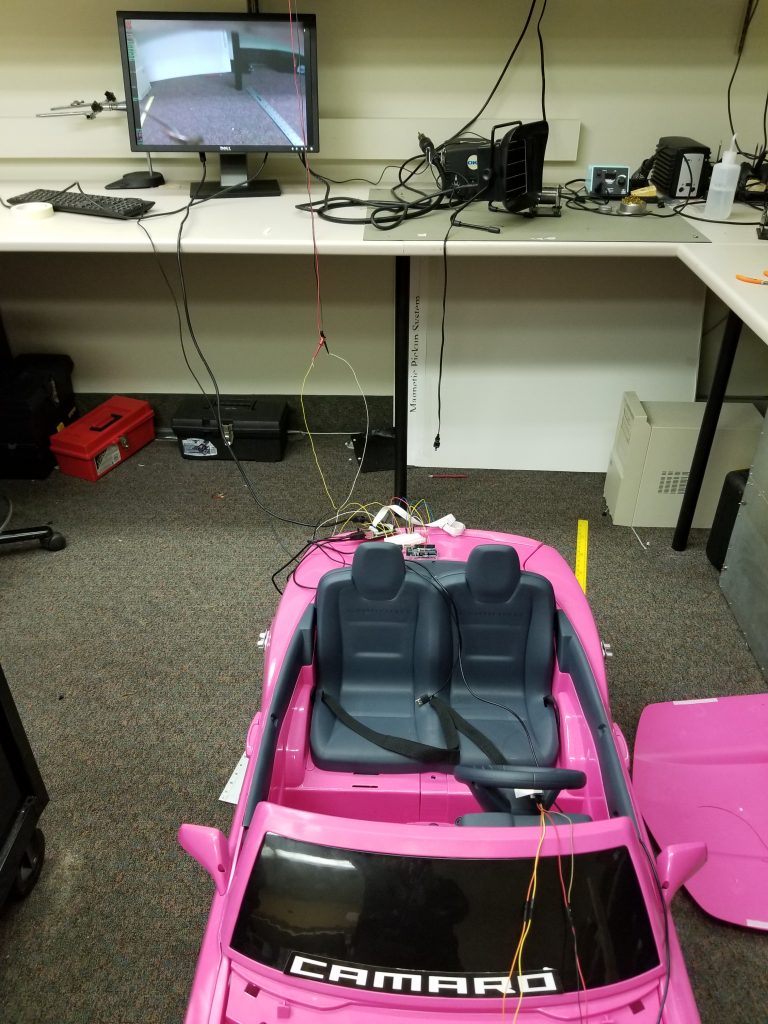

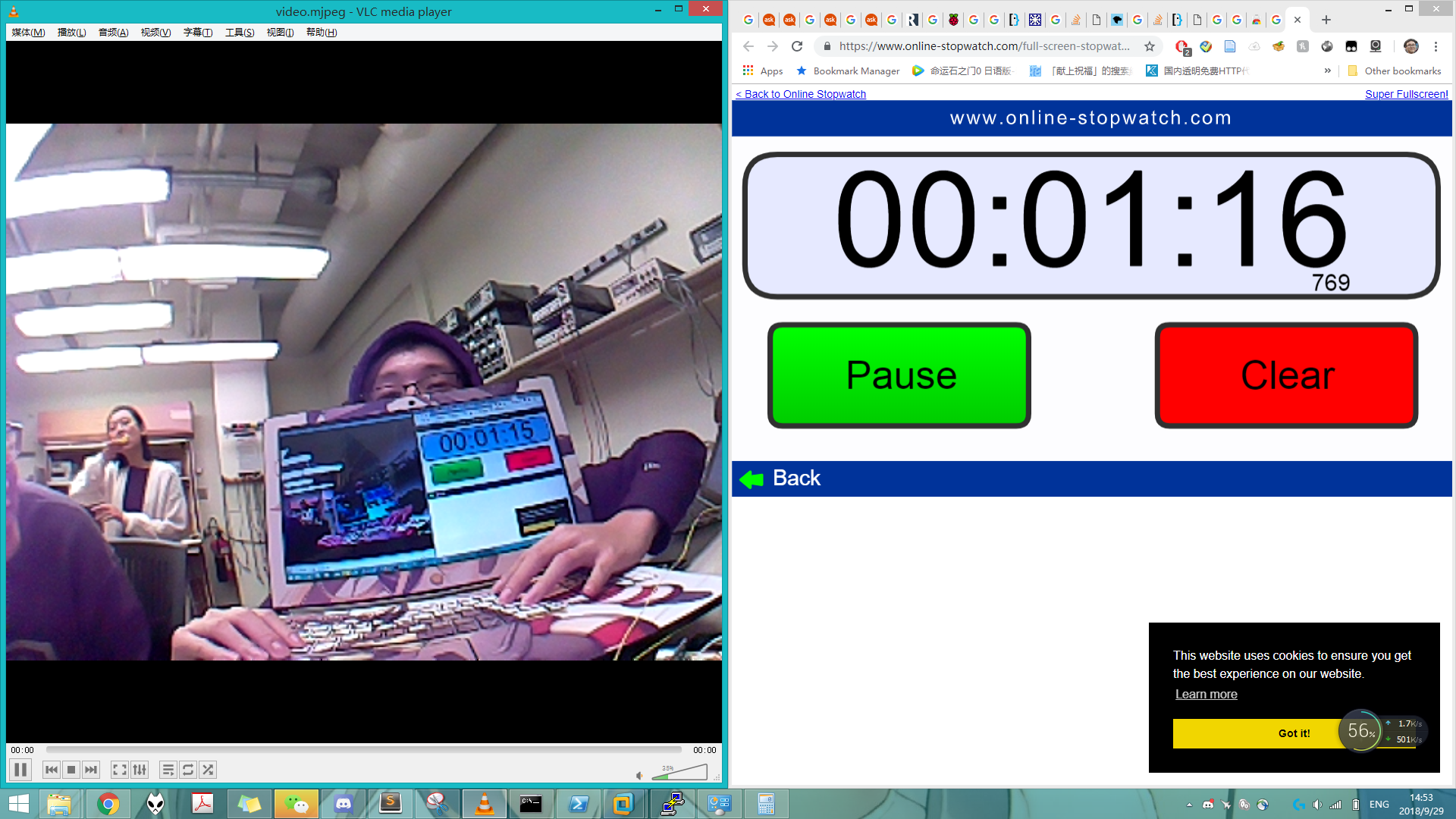

- Conducted various tests

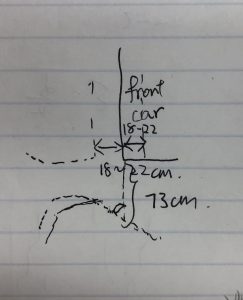

- distance test for ultrasonic sensors

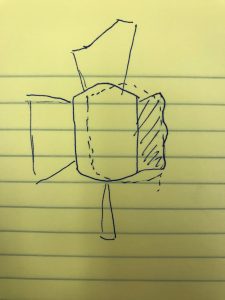

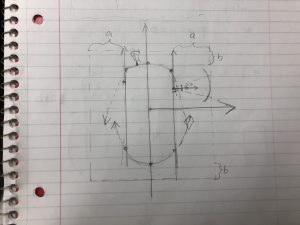

- predicted path

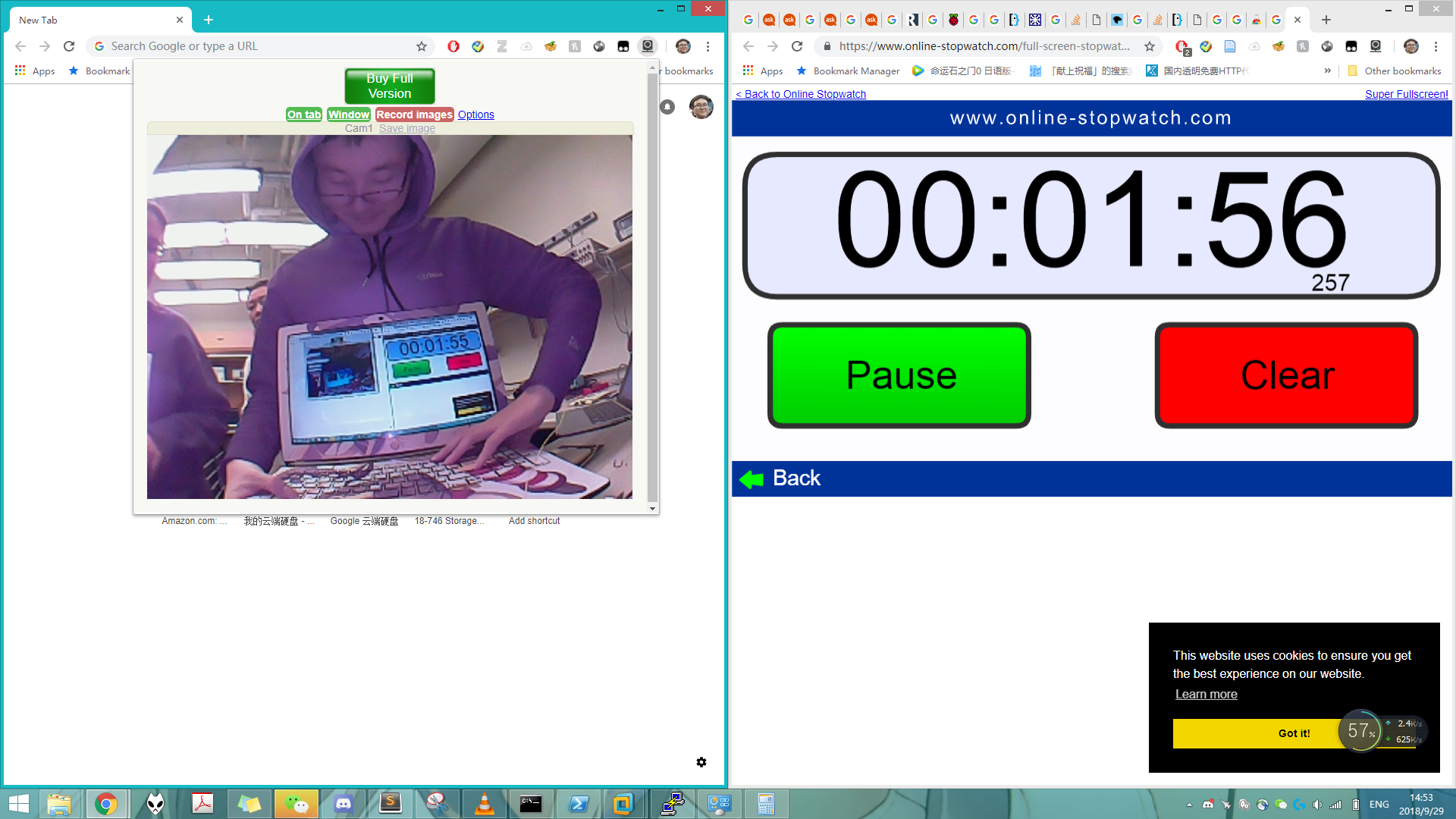

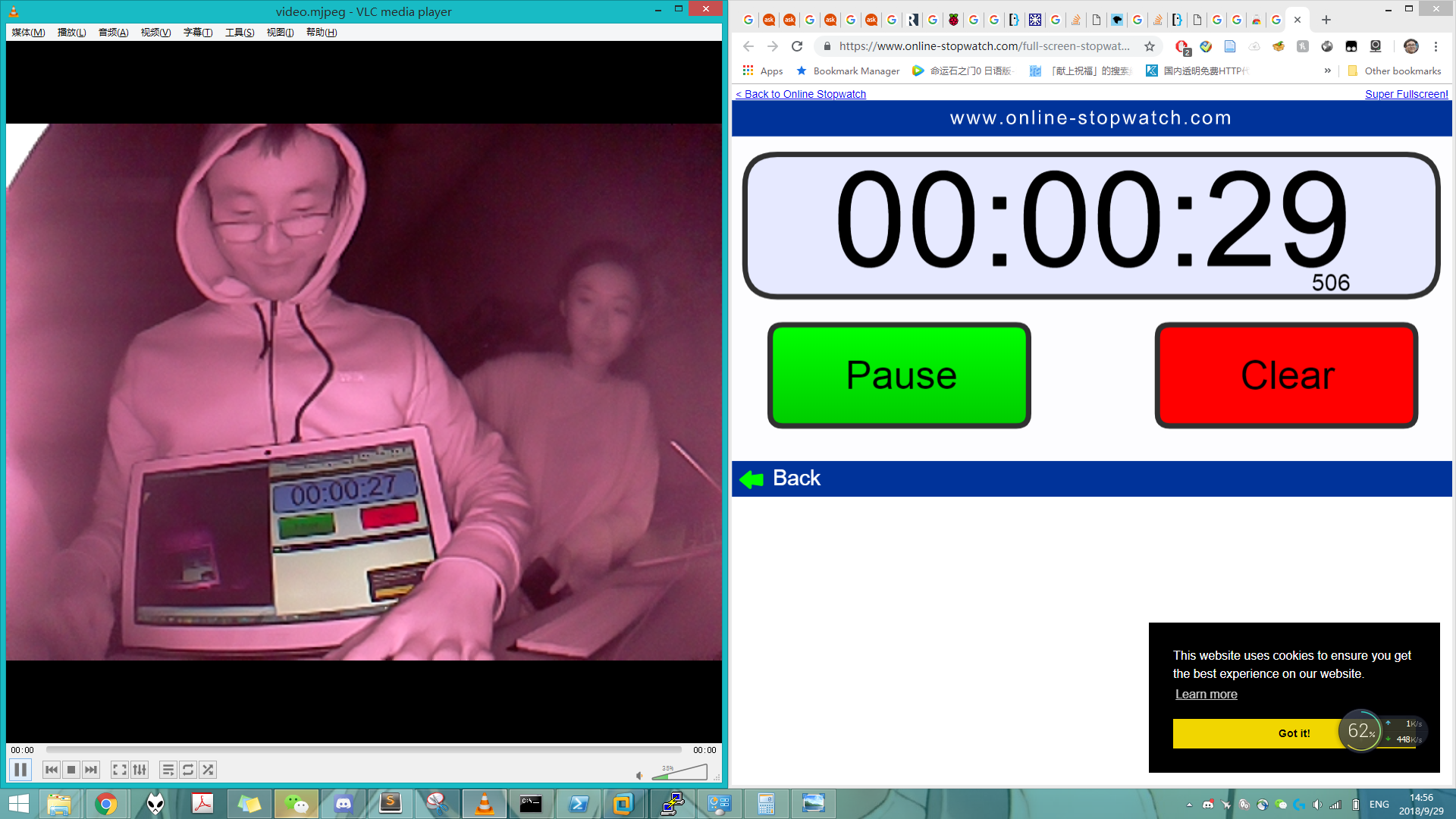

- parking algorithm user(Yuqing) testing

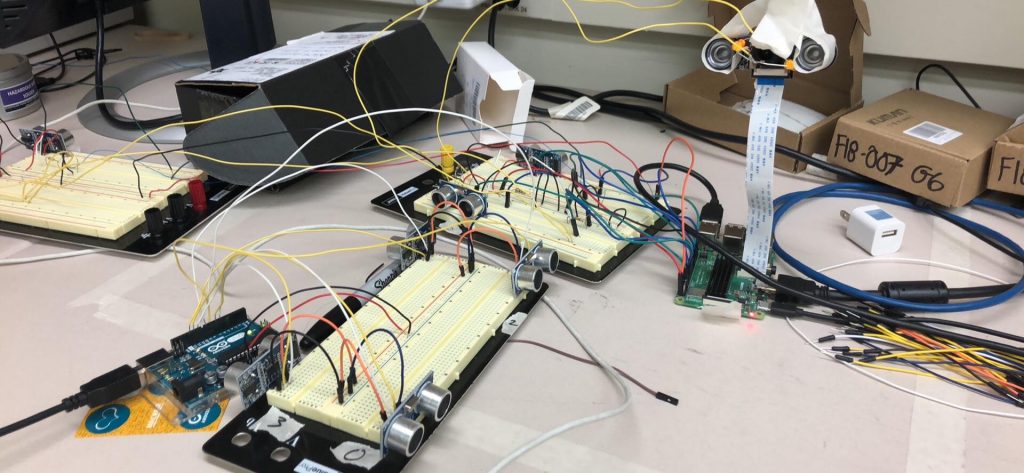

- Setup for testing sensor reading accuracy

Hubert

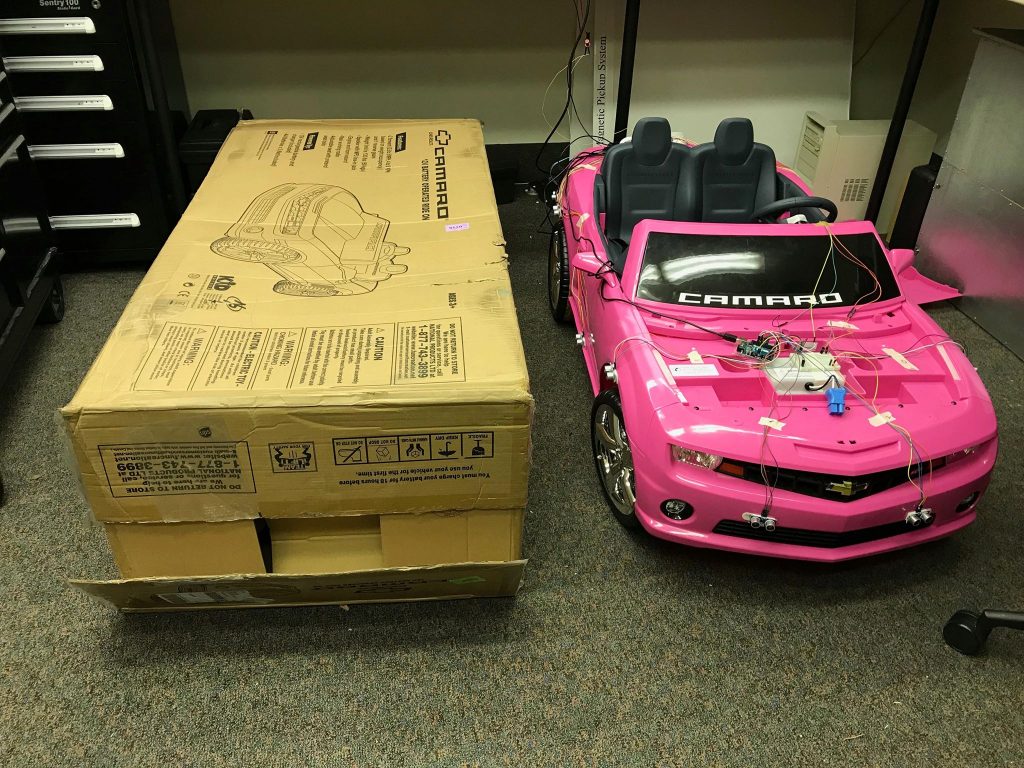

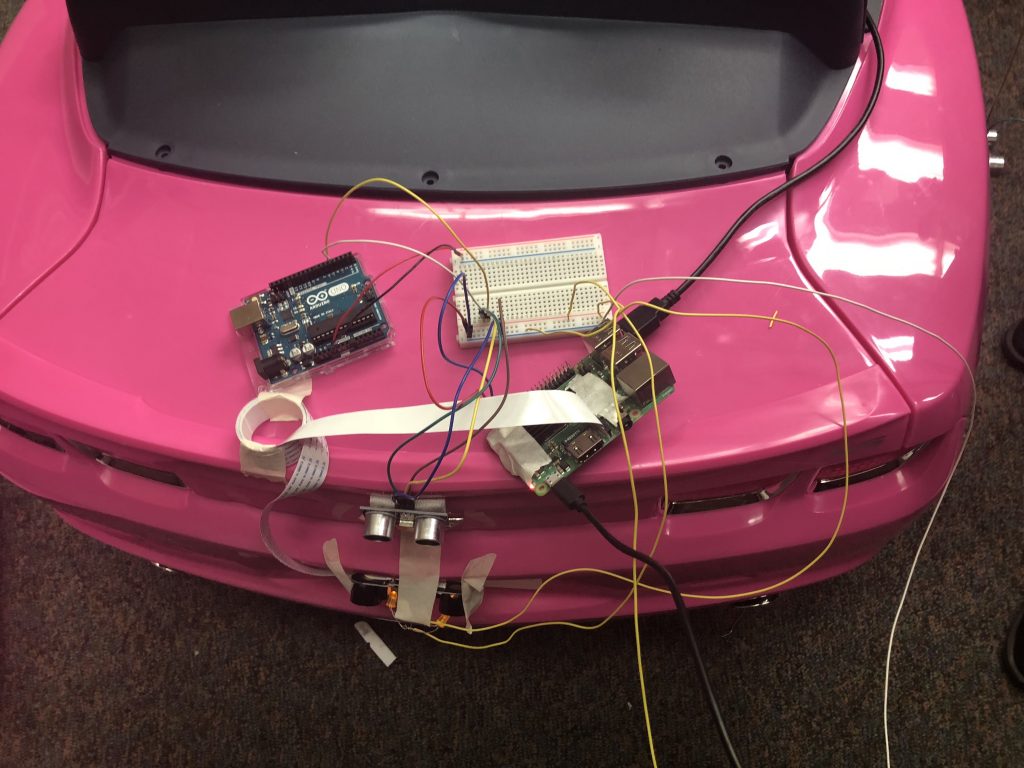

- Rewired the circuits to ensure better presentation of the vehicle

- Reconfigured arduino code to auto-restart after time-out

Zilei

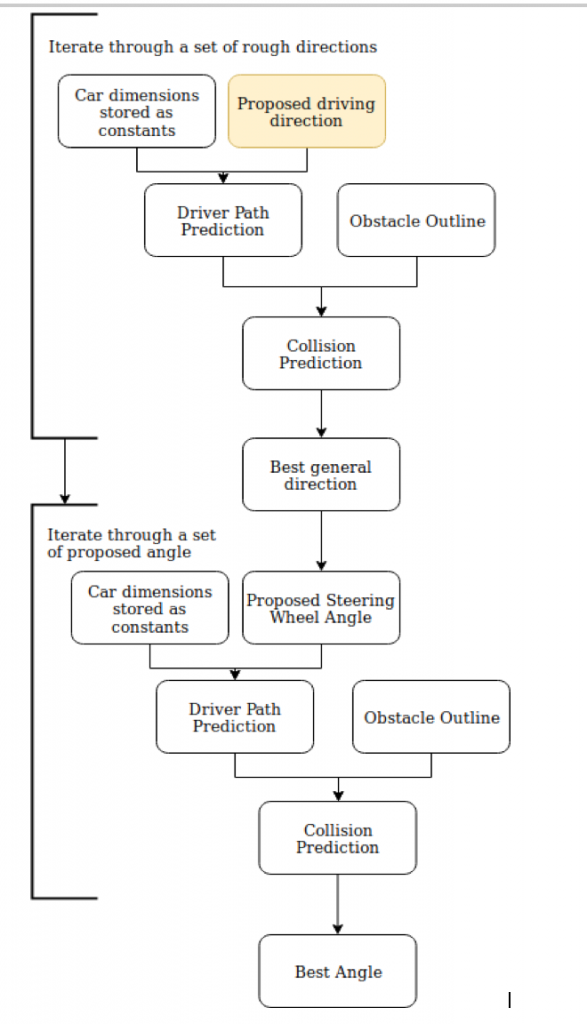

- Finished working on parking algorithm

- adjusting finishing conditions

- adjusting verbosity of the instruction during the first phase

- adjusting range for appropriate distance to the right during the first phase

- adjusting detection mechanism for switching from state 2 to 3

- previously relying on a single point, due to unstable values at the reading point, changed to use 2 readings

Yuqing

- Finished working on audio instructions for parking algorithm

- Designated driver